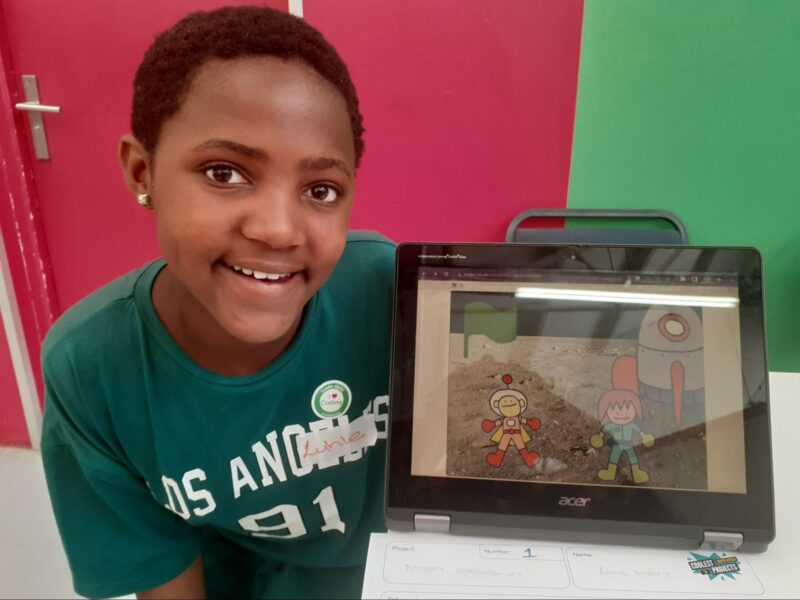

Each year, young people all over the world share and celebrate their amazing tech creations by taking part in Coolest Projects, our digital technology showcase. Our global online showcase and local in-person events give kids a wonderful opportunity to celebrate their creativity with their communities, explore other young creators’ tech projects, and gain inspiration and encouragement for their future projects.

Now, visitors to the Young V&A museum in London can also be inspired by some of the incredible creations showcased at Coolest Projects. The museum has recently reopened after a large reimagining, and some of the inspiring projects by Coolest Projects 2022 participants are now on display in the Design Gallery, ready to spark digital creativity among more young people.

Projects to solve problems

Many Coolest Projects participants showcase projects that they created to make an impact and solve a real-world problem that’s important to them, for example to help members of their local community, or to protect the environment.

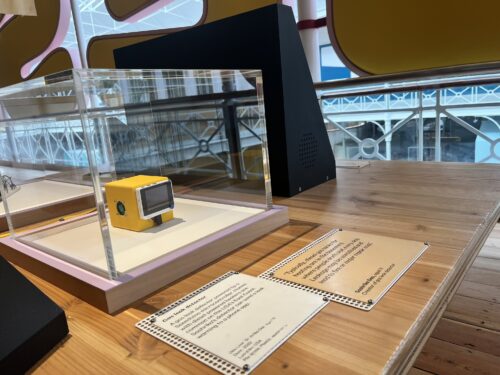

One example on display in the Young V&A gallery is EleVoc, by 15-year-old Chinmayi from India. Chinmayi was inspired to create her project after she and her family faced a frightening encounter:

“My family and I are involved in wildlife conservation. One time we were charged by elephants even though we were only passing by in a Jeep. This was my first introduction to human–animal conflict, and I wanted to find a way to solve it!” – Chinmayi

The experience prompted Chinmayi to create EleVoc, an early-warning device designed to reduce human–elephant conflict by detecting and classifying different elephant sounds and alerting nearby villages to the elephants’ proximity and behaviour.

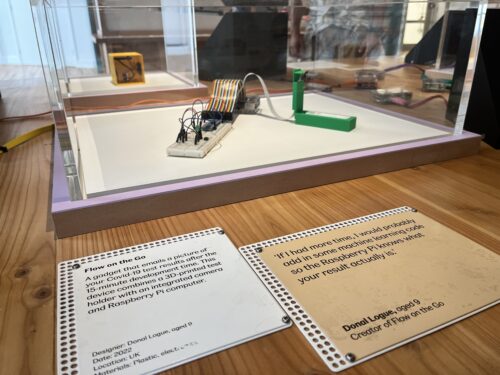

Also exhibited at the Young V&A is the hardware project Gas Leak Detector by Sashrika, aged 11, from the USA. Gas Leak Detector is a device that detects if a fuel tank for a diesel-powered heating system is leaking and notifies householders through an app in a matter of second.

Sashrika knew this invention could really make a difference to people’s lives. She explained, “Typically, diesel gas tanks for heating are in the basement where people don’t visit every day. Leakage may be unnoticed and lead to fire or major repair cost.”

Projects to have fun

As well as projects designed to solve problems, Coolest Projects also welcomes young people who create things to entertain or have fun.

At the Young V&A, visitors can enjoy the fun, fast-paced game project Runaway Nose, by 10-year-old Harshit from Ireland. Runaway Nose uses facial recognition, and players have to use their nose to interact with the prompts on the screen.

Harshit shared the motivation behind his project:

“I wanted to make a fun game to get you thinking fast and that would get you active, even on a rainy day.” – Harshit

We can confirm Runaway Nose is a lot of fun, and a must-do activity for people of all ages on a visit to the museum.

Join in the celebration!

If you are in London, make sure to head to the Young V&A to see Chinmayi’s, Sashrika’s, and Harshit’s projects, and many more. We love seeing the ingenuity of the global community of young tech creators celebrated, and hope it inspires you and your young people.

With that in mind, we are excited that Coolest Projects will be back in 2024. Registrations for the global Coolest Projects online showcase will be open from 14 February to 22 May 2024, and any young creator up to age 18 anywhere in the world can get involved. We’ll also be holding in-person Coolest Projects events for young people in Ireland and the UK. Head to the Coolest Projects website to find out more.

Coolest Projects is for all young people, no matter their level of coding experience. Kids who are just getting started and would like to take part can check out the free project guides on our projects site. These offer step-by-step guidance to help everyone make a tech project they feel proud of.

To always get the latest news about all things Coolest Projects, from event updates to the fun swag coming for 2024, sign up for the Coolest Projects newsletter.

The post Celebrating young Coolest Projects creators at a London museum appeared first on Raspberry Pi Foundation.

Source: Raspberry Pi – Celebrating young Coolest Projects creators at a London museum