Generative AI (GenAI) tools like GitHub Copilot and ChatGPT are rapidly changing how programming is taught and learnt. These tools can solve assignments with remarkable accuracy. GPT-4, for example, scored an impressive 99.5% on an undergraduate computer science exam, compared to Codex’s 78% just two years earlier. With such capabilities, researchers are shifting from asking, “Should we teach with AI?” to “How do we teach with AI?”

Leo Porter and Daniel Zingaro have spearheaded this transformation through their groundbreaking undergraduate programming course. Their innovative curriculum integrates GenAI tools to help students tackle complex programming tasks while developing critical thinking and problem-solving skills.

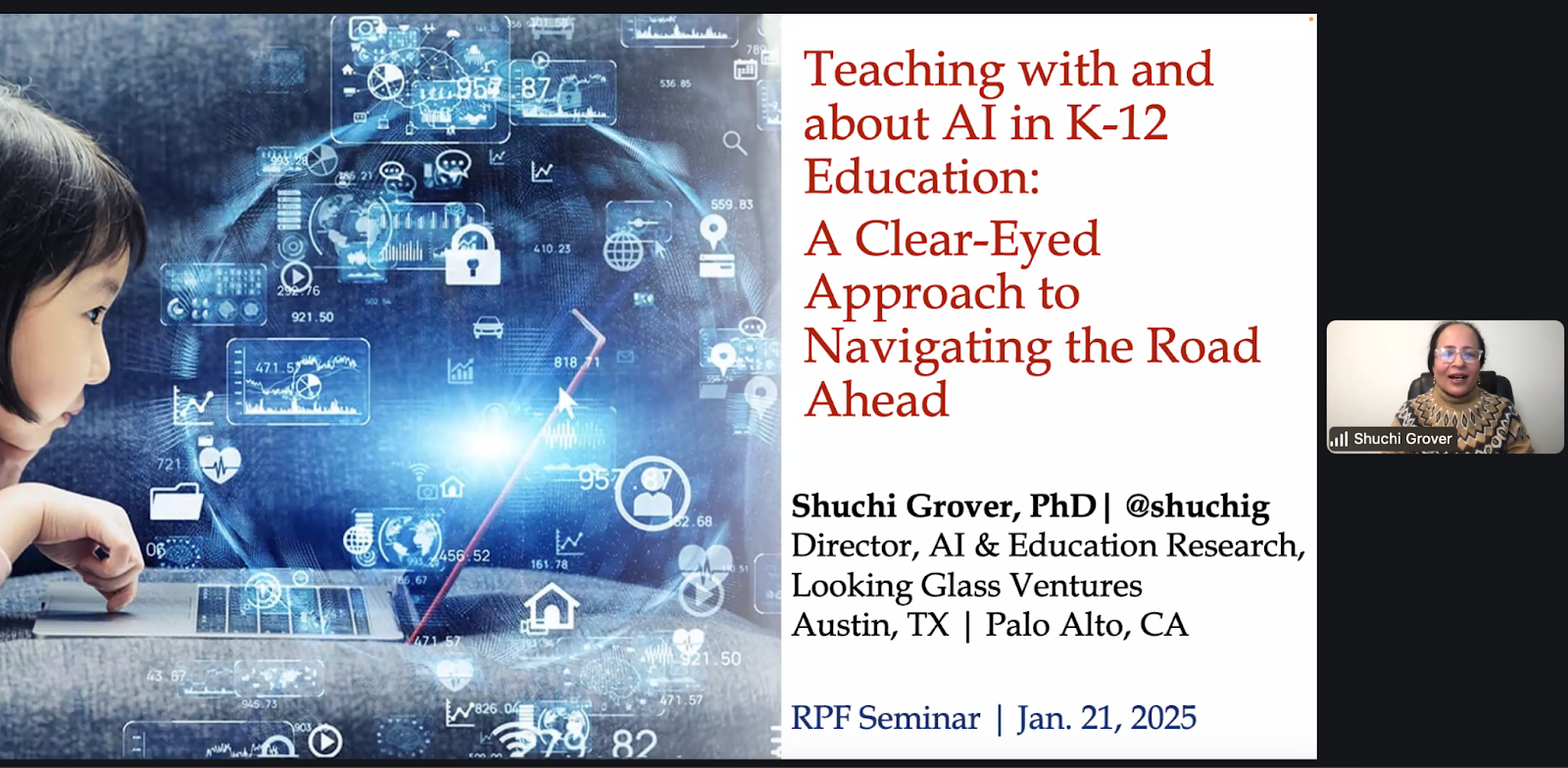

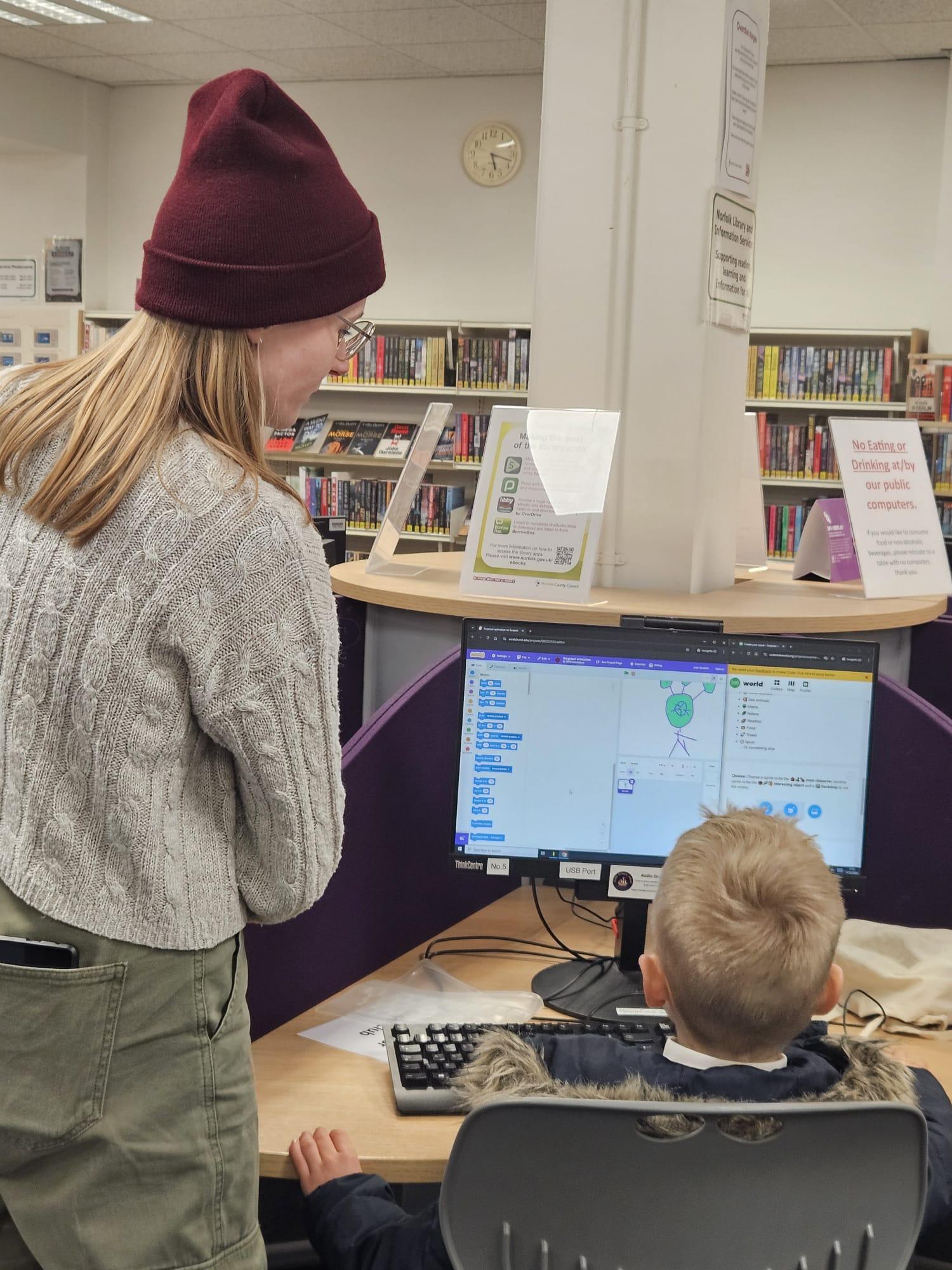

Leo and Daniel presented their work at the Raspberry Pi Foundation research seminar in December 2024. During the seminar, it became clear that much could be learnt from their work, with their insights having particular relevance for teachers in secondary education thinking about using GenAI in their programming classes

Practical applications in the classroom

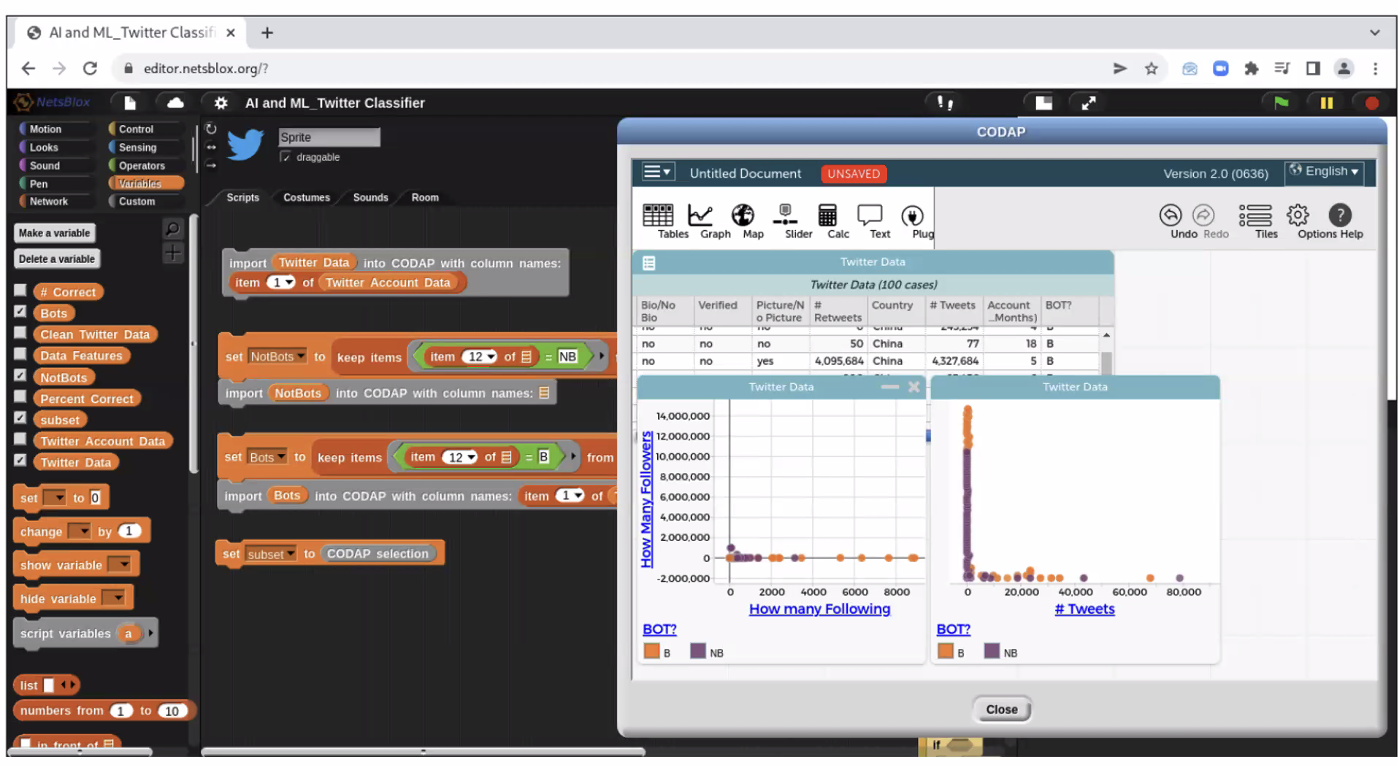

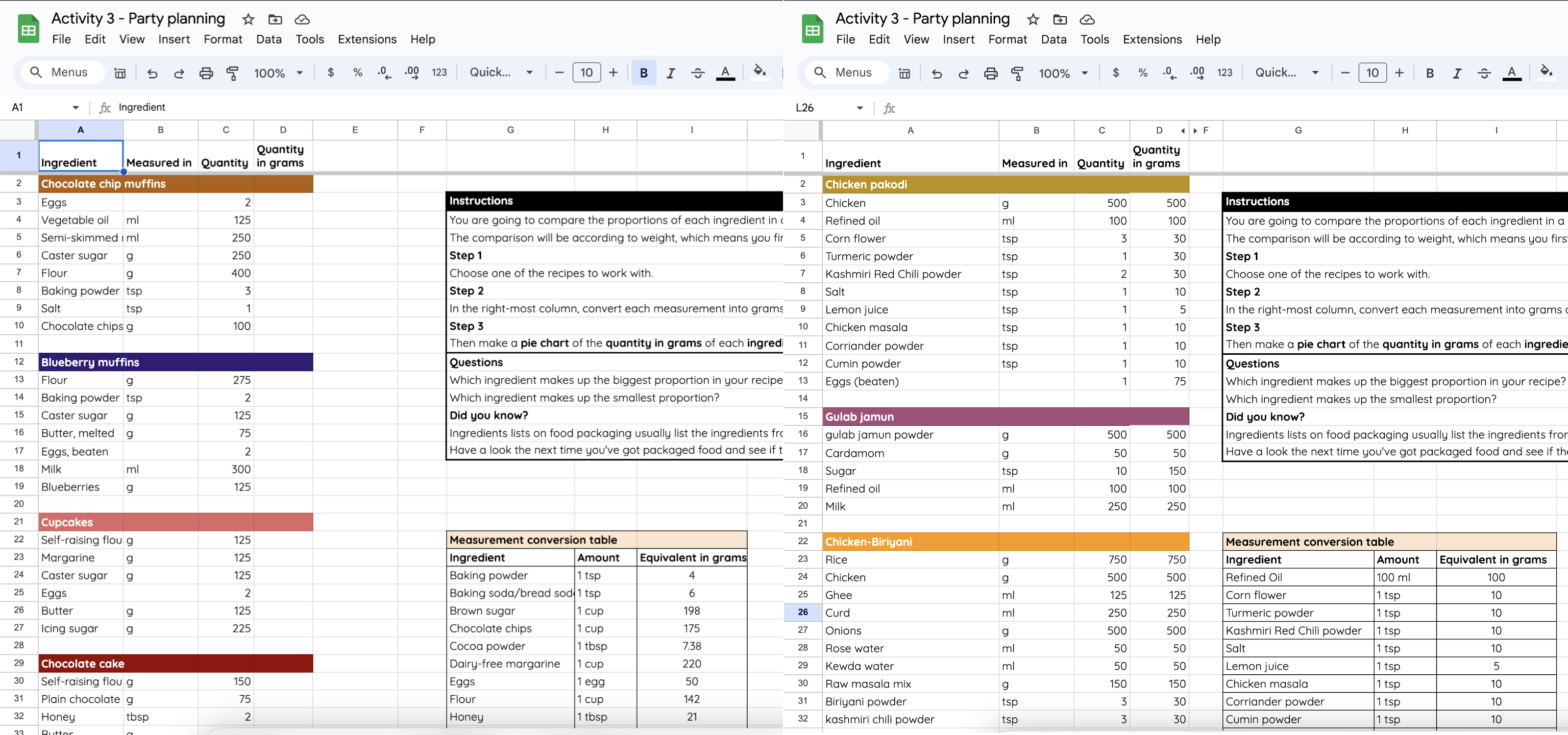

In 2023, Leo and Daniel introduced GitHub Copilot in their introductory programming CS1-LLM course at UC San Diego with 550 students. The course included creative, open-ended projects that allowed students to explore their interests while applying the skills they’d learnt. The projects covered the following areas:

- Data science: Students used Kaggle datasets to explore questions related to their fields of study — for example, neuroscience majors analysed stroke data. The projects encouraged interdisciplinary thinking and practical applications of programming.

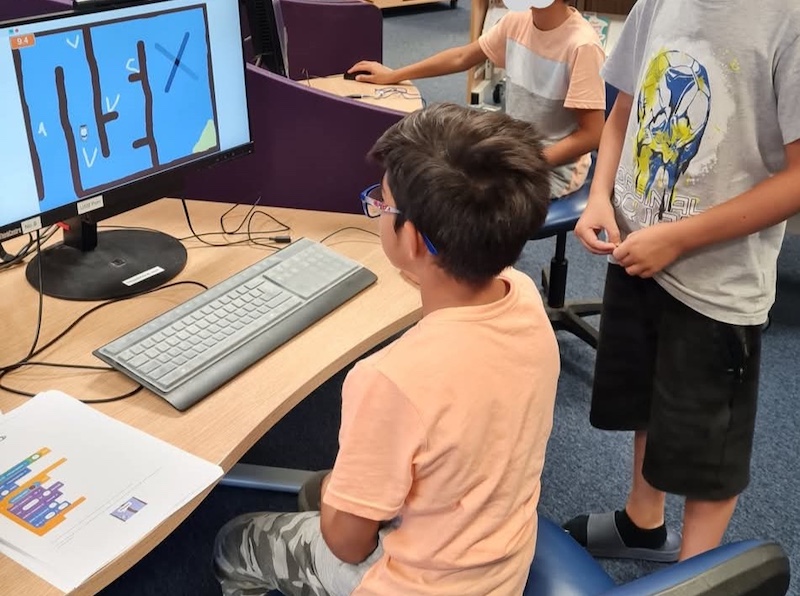

- Image manipulation: Students worked with the Python Imaging Library (PIL) to create collages and apply filters to images, showcasing their creativity and technical skills.

- Game development: A project focused on designing text-based games encouraged students to break down problems into manageable components while using AI tools to generate and debug code.

Students consistently reported that these projects were not only enjoyable but also responsible for deepening their understanding of programming concepts. A majority (74%) found the projects helpful or extremely helpful for their learning. One student noted that.

“Programming projects were fun and the amount of freedom that was given added to that. The projects also helped me understand how to put everything that we have learned so far into a project that I could be proud of.“

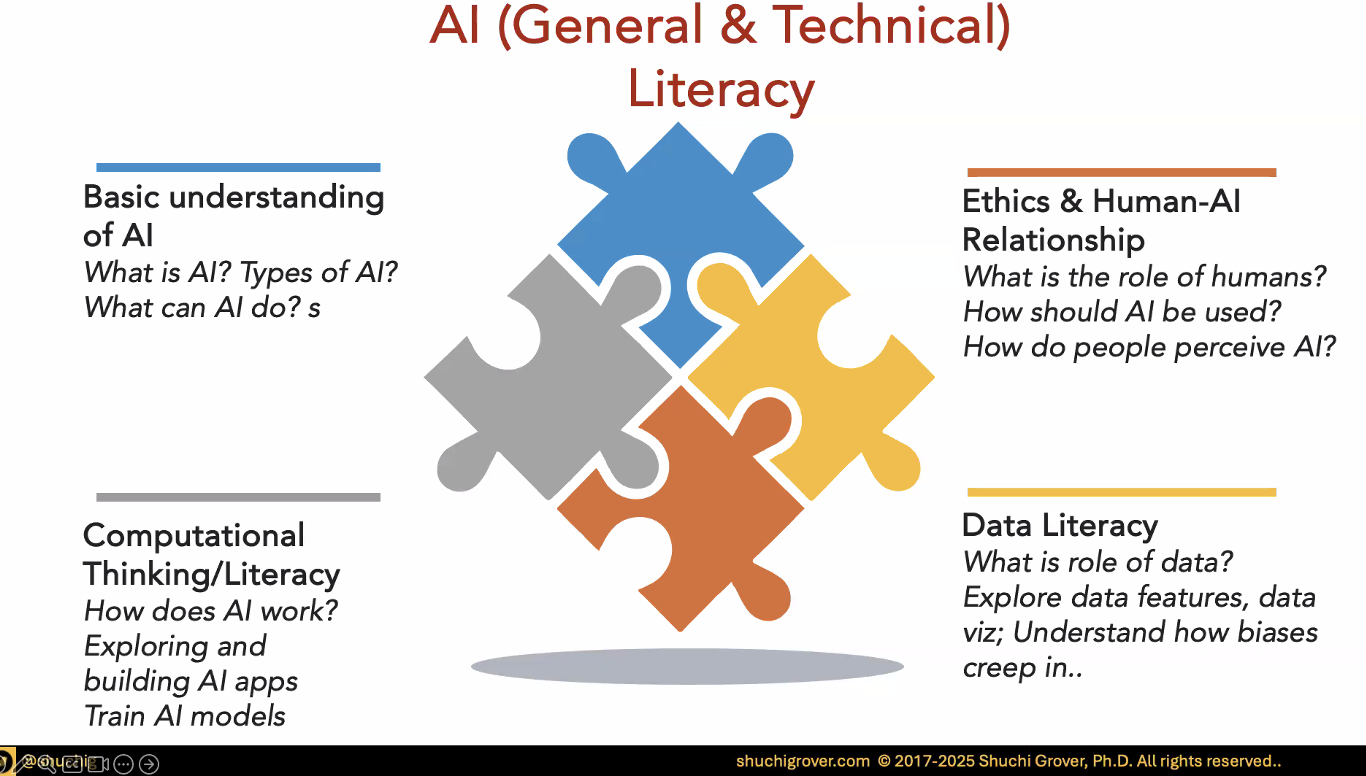

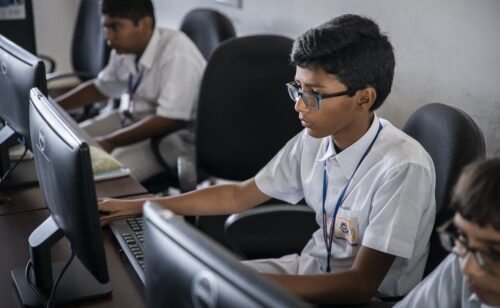

Core skills for programming with Generative AI

Leo and Daniel emphasised that teaching programming with GenAI involves fostering a mix of traditional and AI-specific skills.

Their approach centres on six core competencies:

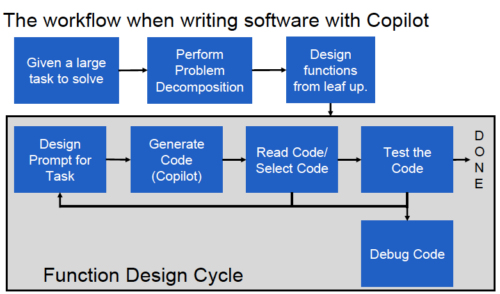

- Prompting and function design: Students learn to articulate precise prompts for AI tools, honing their ability to describe a function’s purpose, inputs, and outputs, for instance. This clarity improves the output from the AI tool and reinforces students’ understanding of task requirements.

- Code reading and selection: AI tools can produce any number of solutions, and each will be different, requiring students to evaluate the options critically. Students are taught to identify which solution is most likely to solve their problem effectively.

- Code testing and debugging: Students practise open- and closed-box testing, learning to identify edge cases and debug code using tools like doctest and the VS Code debugger.

- Problem decomposition: Breaking down large projects into smaller functions is essential. For instance, when designing a text-based game, students might separate tasks into input handling, game state updates, and rendering functions.

- Leveraging modules: Students explore new programming domains and identify useful libraries through interactions with Copilot. This prepares them to solve problems efficiently and creatively.

Ethical and metacognitive skills: Students engage in discussions about responsible AI use and reflect on the decisions they make when collaborating with AI tools.

Adapting assessments for the AI era

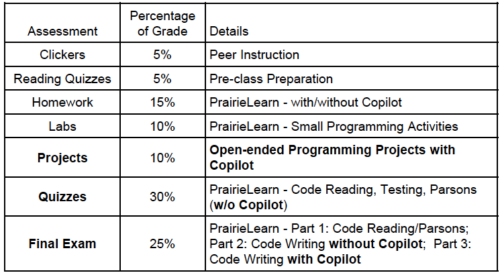

The rise of GenAI has prompted educators to rethink how they assess programming skills. In the CS1-LLM course, traditional take-home assignments were de-emphasised in favour of assessments that focused on process and understanding.

- Quizzes and exams: Students were evaluated on their ability to read, test, and debug code — skills critical for working effectively with AI tools. Final exams included both tasks that required independent coding and tasks that required use of Copilot.

- Creative projects: Students submitted projects alongside a video explanation of their process, emphasising problem decomposition and testing. This approach highlighted the importance of critical thinking over rote memorisation.

Challenges and lessons learnt

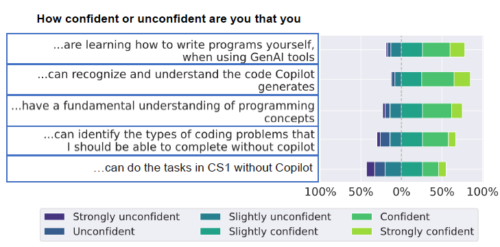

While Leo and Daniel reported that the integration of AI tools into their course has been largely successful, it has also introduced challenges. Surveys revealed that some students felt overly dependent on AI tools, expressing concerns about their ability to code independently. Addressing this will require striking a balance between leveraging AI tools and reinforcing foundational skills.

Additionally, ethical concerns around AI use, such as plagiarism and intellectual property, must be addressed. Leo and Daniel incorporated discussions about these issues into their curriculum to ensure students understand the broader implications of working with AI technologies.

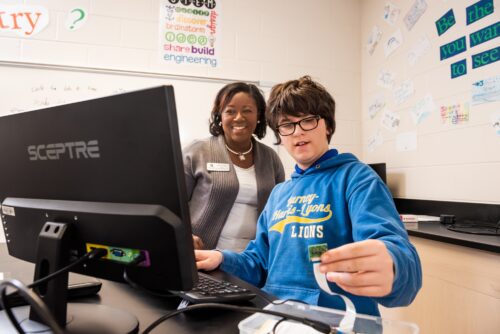

A future-oriented approach

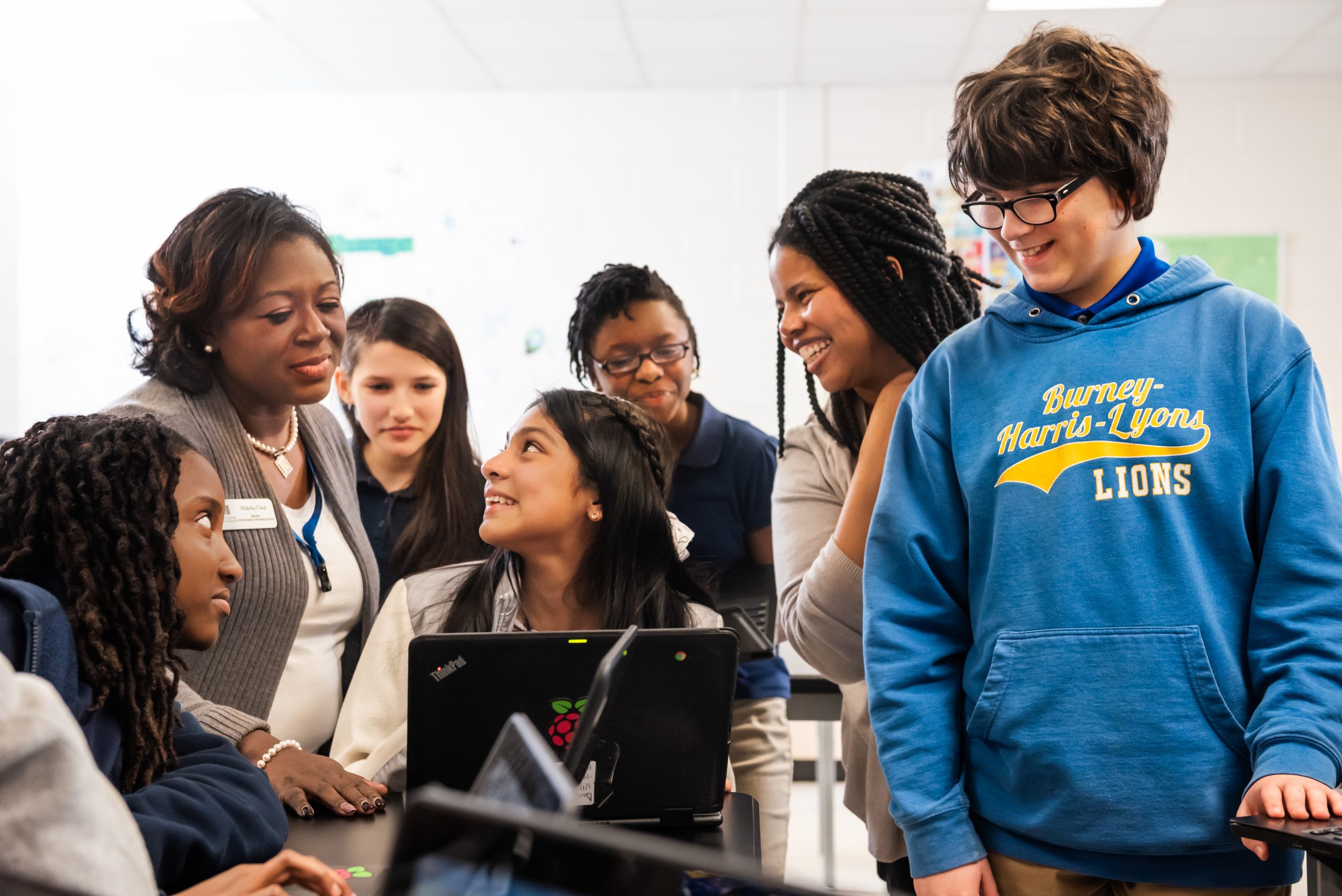

Leo and Daniel’s work demonstrates that GenAI can transform programming education, making it more inclusive, engaging, and relevant. Their course attracted a diverse cohort of students, as well as students traditionally underrepresented in computer science — 52% of the students were female and 66% were not majoring in computer science — highlighting the potential of AI-powered learning to broaden participation in computer science.

By embracing this shift, educators can prepare students not just to write code but to also think critically, solve real-world problems, and effectively harness the AI innovations shaping the future of technology.

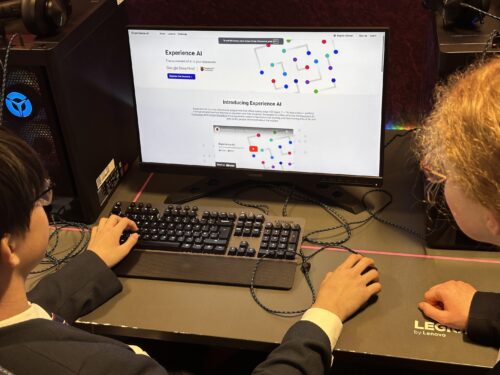

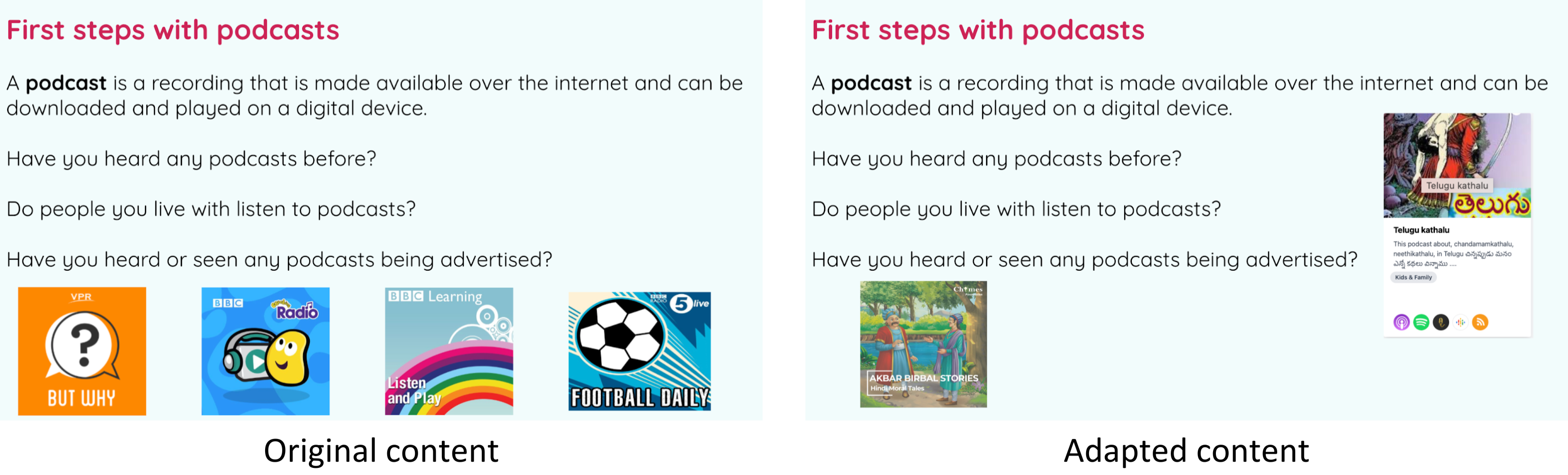

If you’re an educator interested in using GenAI in your teaching, we recommend checking out Leo and Daniel’s book, Learn AI-Assisted Python Programming, as well as their course resources on GitHub. You may also be interested in our own Experience AI resources, which are designed to help educators navigate the fast-moving world of AI and machine learning technologies.

Join us at our next online seminar on 11 March

Our 2025 seminar series is exploring how we can teach young people about AI technologies and data science. At our next seminar on Tuesday, 11 March at 17:00–18:00 GMT, we’ll hear from Lukas Höper and Carsten Schulte from Paderborn University. They’ll be discussing how to teach school students about data-driven technologies and how to increase students’ awareness of how data is used in their daily lives.

To sign up and take part in the seminar, click the button below — we’ll then send you information about joining. We hope to see you there.

The schedule of our upcoming seminars is online. You can catch up on past seminars on our previous seminars and recordings page.

The post Integrating generative AI into introductory programming classes appeared first on Raspberry Pi Foundation.