Some Ubisoft developers feel like they’ve been here before and they’re tired of it

The post Frustration Inside Ubisoft: ‘This Is Probably The Most Embarrassed I Have Felt Working Somewhere’ appeared first on Kotaku.

Some Ubisoft developers feel like they’ve been here before and they’re tired of it

The post Frustration Inside Ubisoft: ‘This Is Probably The Most Embarrassed I Have Felt Working Somewhere’ appeared first on Kotaku.

What a wicked game to play, to make me feel this way

The post 10-Year-Old <i>Sims 4</i> Sex Mod With Full Nudity Is Still Shockingly Popular appeared first on Kotaku.

The White House doubled down after posting a digitally altered photo of Minnesota protester Nekima Levy Armstrong, dismissing it as a “meme” despite objections from her attorney and comparisons to reality-distorting propaganda. “YET AGAIN to the people who feel the need to reflexively defend perpetrators of heinous crimes in our country I share with you this message: Enforcement of the law will continue. The memes will continue. Thank you for your attention to this matter,” White House spokesperson Kaelan Dorr wrote in a post on X. The Hill reports: The statement came after Homeland Security Secretary Kristi Noem posted a photo of Armstrong’s arrest Thursday showing Armstrong with what appears to be a blank facial expression. However, the White House later posted an altered version of the same photo that shows Armstrong crying.

Armstrong’s attorney Jordan Kushner said in an interview with CNN that an agent was recording Armstrong’s arrest on their cellphone. “I’ve never seen anything like it. It’s so unprofessional,” Kushner said. “He was ordered to do it because the government was looking to make a spectacle of this case. I observed the whole thing. She was dignified, calm, rational the whole time.” Kushner went on to call the move to alter the photo “a hallmark of a fascist regime where they actually alter reality.”

Read more of this story at Slashdot.

Xbox and Playground Games are reviving the Fable series later this year on both Xbox and PlayStation

The post <i>Fable</i>: Everything We Know About The Open-World Fantasy RPG Reboot appeared first on Kotaku.

Watching an NBA game in the Apple Vision Pro feels like a glimpse of where sports and entertainment need to go, even if the path forward is still taking shape. Apple is clearly experimenting with what watching sports can feel like when you are no longer locked into a flat television broadcast.

I recently went onto the court at an immersive Lakers game from the confines of Ian Hamilton’s Vision Pro I borrowed from him in New York City. This was not a live broadcast, I watched the game on demand via the Spectrum SportsNet app, after the fact, in guest mode on his headset wearing my own personal Dual Knit Band. The experience leaving my Quest 3 behind and spending extended time in an immersive Apple experience left me both impressed, and conflicted.

Viewers are given a choice about how to watch an NBA game in headset.

You can watch the game on a floating virtual screen, which already feels cleaner and more cinematic than a traditional TV. Or switch into fully immersive 180-degree 3D view for a full two-hour cut-together view of the game from start to finish. That second option is where the experience shows the most potential, but we also shouldn’t dismiss the first mode. That first mode can be more easily shared in mixed reality with other apps and people, making the experience of watching there a bit like an IMAX version of an NBA game that’s simultaneously without any of the typical distractions. Ian showed me a Jupiter environment in his headset too, and I could’ve watched the game there, surrounded by the gigantic planet and glimmering stars. All that said, instead, I dropped into the immersive mode for most of my time with the game.

In immersive mode, you are limited to a small set of camera perspectives and a singular timeline through the game. There are cameras mounted beneath each basket at opposite ends of the court, a ground-level center-court view, and a wider angle from up in the stands. Those angles are sufficient for following the game. Most intriguing about my time in this mode is that some of the most compelling moments had little to do with the action on the court.

The cutaways to commentators and sideline reporters stood out immediately. Interviews are presented in 3D and human scale, and that changes how you perceive the people on screen. You see their entire bodies rather than a cropped head-and-shoulders shot, and they feel more like they’re standing right there talking to you. The sense of scale is immediate and lasting. You can also tell how tall these players actually are and start noticing details you would never catch on television, like a birthmark on a shoulder or sweat collecting along an arm.

An Apple Immersive NBA broadcast feels intimate in a way traditional broadcasts are not. That intimacy is powerful, but it also highlights a challenge immersive sports production will have to solve. At one moment, feeling present on the court can be a good thing, and the next it can feel uncomfortably close. Immersive broadcasts still need to learn where that line is, and how to stay on the right side of it from moment to moment. In something like the recent Tour De Force MotoGP documentary, the immersive filmmakers had quite a bit more time to prepare around a very specific narrative, and you can feel the difference moment to moment.

UploadVRIan Hamilton

UploadVRIan Hamilton

For basketball, the immersive cameras provided terrific close-up views of plenty of interesting things outside the game too. Instead of watching commercials you’re watching the Laker Girls during breaks, and their performances in 3D at human scale again reinforces the difference from television. You feel as if you are standing there, close enough to appreciate movement, spacing, and physicality. During commercial breaks, you can watch the crew wipe down the court, see players and staff milling about, and catch the in-between moments that usually disappear when the feed cuts away. Those behind-the-scenes details add texture and strengthen the feeling that you are actually inside the arena, not just consuming a polished broadcast.

The experience shows more friction once active gameplay ramps up. When using the center-court camera, the action constantly moves left to right and back again. That means repeatedly turning your head to follow the play unless the feed switches to one of the basket cameras. Over time, that motion becomes tiring.

I found myself wishing for more camera options, or better yet, the ability to manually switch views during the replay. An Immersive Highlights clip separate from the full broadcast pulls together some of the best moments seen from Apple‘s cameras over the course of the game, and at less than 10 minutes long, it offers a great way to see some of LeBron James’ best moments from behind the backboard without giving too much time to neck strain. Basketball broadcasts have always been built around wide shots that let you see the entire floor at once. In immersive VR at certain angles, the constant side-to-side motion means your head and neck are doing more work than they ever would in front of a TV or even at the game itself.

Even with the Dual Knit Strap, the Vision Pro is heavy and coming from extended daily use with a Meta Quest 3, I felt the Vision Pro’s weight immediately pushing down on my face, and it stayed there throughout my time. For shorter sessions, it is manageable. For longer viewing, headset weight may be the biggest thing holding this use case back even if it isn’t the only thing.

Immersive viewing isn’t just the future of sports, concerts, and entertainment – it’s here today, to quote William Gibson, just “not evenly distributed.” The sense of presence here is too compelling to ignore. What feels less certain is how quickly the hardware evolves, how the technical implementation will improve, and how it will scale to become mainstream.

Ian’s hands-on experiences with Steam Frame would suggest a much more lightweight experience that could be worn for extended periods, and he showed me how slim the Bigscreen Beyond headset is, which takes the minimal small and light form factor to the extreme. He also hasn’t worn the Frame for an extended period, yet, and neither Apple nor the headset manufacturers have shown any indication that Apple’s top tier immersive programming is coming to any headset other than one with an Apple logo shown at startup.

So, much as it was in 2016, and in 2024, right now immersive sports still feel like a glimpse of the future even if it works now. It is not a default viewing mode. What Apple is doing with Vision Pro and Apple Immersive is not a finished product. It is a preview. And as previews go, this one is strong enough to make me want more, even as it makes clear how much work remains to create a mass-market experience.

Jeffrey Snover, the driving force behind PowerShell, has retired after a career that reshaped Windows administration. The Register reports: Snover’s retirement comes after a brief sojourn at Google as a Distinguished Engineer, following a lengthy stint at Microsoft, during which he pulled the company back from imposing a graphical user interface (GUI) on administrators who really just wanted a command line from which to run their scripts. Snover joined Microsoft as the 20th century drew to a close. The company was all about its Windows operating system and user interface in those days — great for end users, but not so good for administrators managing fleets of servers. Snover correctly predicted a shift to server datacenters, which would require automated management. A powerful shell… a PowerShell, if you will.

[…] Over the years, Snover has dropped the occasional pearl of wisdom or shared memories from his time getting PowerShell off the ground. A recent favorite concerns the naming of Cmdlets and their original name in Monad: Function Units, or FUs. Snover wrote: “This abbreviation reflected the Unix smart-ass culture I was embracing at the time. Plus I was developing this in a hostile environment, and my sense of diplomacy was not yet fully operational.” Snover doubtless has many more war stories to share. In the meantime, however, we wish him well. Many admins owe Snover thanks for persuading Microsoft that its GUI obsession did not translate to the datacenter, and for lengthy careers in gluing enterprise systems together with some scripted automation.

Read more of this story at Slashdot.

This is a short video of a bengal cat that cows instead of meows. What a cutie! My cats meow. And sometimes they chitter at birds outside the window. Occasionally they’ll hack up some fur on the comforter. They don’t cow though. Neither do my dogs. My dogs do have a cow though if you don’t feed them on time. Which, according to their horrible internal clocks, is actually an hour before time. It’s dark early because it’s cloudy out, get it together.

An anonymous reader quotes a report from TechCrunch: Microsoft provided the FBI with the recovery keys to unlock encrypted data on the hard drives of three laptops as part of a federal investigation, Forbes reported on Friday. Many modern Windows computers rely on full-disk encryption, called BitLocker, which is enabled by default. This type of technology should prevent anyone except the device owner from accessing the data if the computer is locked and powered off.

But, by default, BitLocker recovery keys are uploaded to Microsoft’s cloud, allowing the tech giant — and by extension law enforcement — to access them and use them to decrypt drives encrypted with BitLocker, as with the case reported by Forbes. The case involved several people suspected of fraud related to the Pandemic Unemployment Assistance program in Guam, a U.S. island in the Pacific. Local news outlet Pacific Daily News covered the case last year, reporting that a warrant had been served to Microsoft in relation to the suspects’ hard drives.

Kandit News, another local Guam news outlet, also reported in October that the FBI requested the warrant six months after seizing the three laptops encrypted with BitLocker. […] Microsoft told Forbes that the company sometimes provides BitLocker recovery keys to authorities, having received an average of 20 such requests per year.

Read more of this story at Slashdot.

We may earn a commission from links on this page. Deal pricing and availability subject to change after time of publication.

360-degree cameras like the GoPro Max2 are designed for creators who want to capture more than what a single-lens camera, like the GoPro Hero series, can handle. Right now, the GoPro Max2 action camera is down from $499 to $399 on Amazon, marking its lowest price ever.

Compared to the Max1, the Max2 has a higher 8K resolution and 29MP stills, resulting in sharper, more detailed footage and photos, while 10-bit color, GP-Log, and up to 14FPS Raw capture with 3D Tracking focus make it appealing for pros or anyone who wants more flexibility in post-production. It shoots 360-degree spherical video, unlike the single-lens action cams like the Hero, earning it an Editors’ Choice Award from PCMag. This lets you record everything in your vicinity and reframe at any level, allowing for even more creative possibilities when editing.

With a magnesium chassis and weather protection, it’s built for adventures and high-impact environments, with waterproofing up to 5 meters, a compact build, easy-to-replace lenses, and a variety of mounting options. As part of the GoPro ecosystem, it works seamlessly with the GoPro Quik video editing app for edits and reframing, while Bluetooth mic support and voice control make it even more versatile.

Still, compared to the Hero series and its sensor design, low-light performance may be weaker, and battery life varies depending on whether you’re shooting with both lenses, the resolution, and the frame rate. Long 8K sessions will be more demanding, during which heat buildup can also happen. Slow motion also maxes out at 100 fps, and editing isn’t as slick or built for social shares as platforms like Insta360.

If your priority is immersive storytelling, post-shoot reframing, and more creative freedom, the GoPro Max2 action camera is a strong choice for pros and casual users at $100 off, and a major upgrade over the original. But if you mainly want traditional action footage, often shoot in low-light conditions, and want longer battery life, the less-niche Hero line may suffice.

Whiskerwood is out now on PC via early access, but for the most part it feels like a finished game

The post Careful, This City-Builder Starring Cute Mice And Evil Royal Cats Might Suck Away All Your Free Time appeared first on Kotaku.

If you’ve ever fallen down a Wikipedia rabbit hole or spent a pleasant evening digging through college library stacks, you know the joy of a good research puzzle. Every new source and cross-reference you find unlocks an incremental understanding of a previously unknown world, forming a piecemeal tapestry of knowledge that you can eventually look back at as a cohesive and well-known whole.

TR-49 takes this research process and operationalizes it into an engrossing and novel piece of heavily non-linear interactive fiction. Researching the myriad sources contained in the game’s mysterious computer slowly reveals a tale that’s part mystery, part sci-fi allegory, part family drama, and all-compelling alternate academic history.

The entirety of TR-49 takes place from a first-person perspective as you sit in front of a kind of Steampunk-infused computer terminal. An unseen narrator asks you to operate the machine but is initially cagey about how or why or what you’re even looking for. There’s a creepy vibe to the under-explained circumstances that brought you to this situation, but the game never descends into the jump scares or horror tropes of so many other modern titles.

Because the internet is long past its expiration date but we’re still gulping it down anyways curdled chunks and all, this is a video created by Bill McClintock (previously) featuring NSYNC’s ‘Bye Bye Bye’ mashed up with Slipknot’s ‘The Devil In I’, with a dash of Mötley Crüe to complete the recipe. Now this is not a dish I ever thought anybody would make, but look how amazing it turned out! It belongs on the cover of Bone Appleteeth magazine.

In Big Tech’s never-ending quest to increase AI adoption, Google has unveiled a meme generator. The new Google Photos feature, Me Meme, lets you create personalized memes starring a synthetic version of you.

Google describes Me Meme as “a simple way to explore with your photos and create content that’s ready to share with friends and family.” You can choose from a variety of templates or “upload your own funny picture” to use in their place.

The feature isn’t live for everyone yet, so you may not yet have access to it. (A Google representative told TechCrunch that the feature will roll out to Android and iOS users over the coming weeks.) But once it arrives, you can use it in the Google Photos app by tapping Create (at the bottom of the screen), then Me Meme. It will then ask you to choose a template and add a reference photo. There’s an option to regenerate it if you don’t like the result.

Google says Me Meme works best with well-lit, focused and front-facing portrait photos. “This feature is still experimental, so generated images may not perfectly match the original photo,” the company warns.

This article originally appeared on Engadget at https://www.engadget.com/ai/google-photos-can-now-turn-you-into-a-meme-213930935.html?src=rss

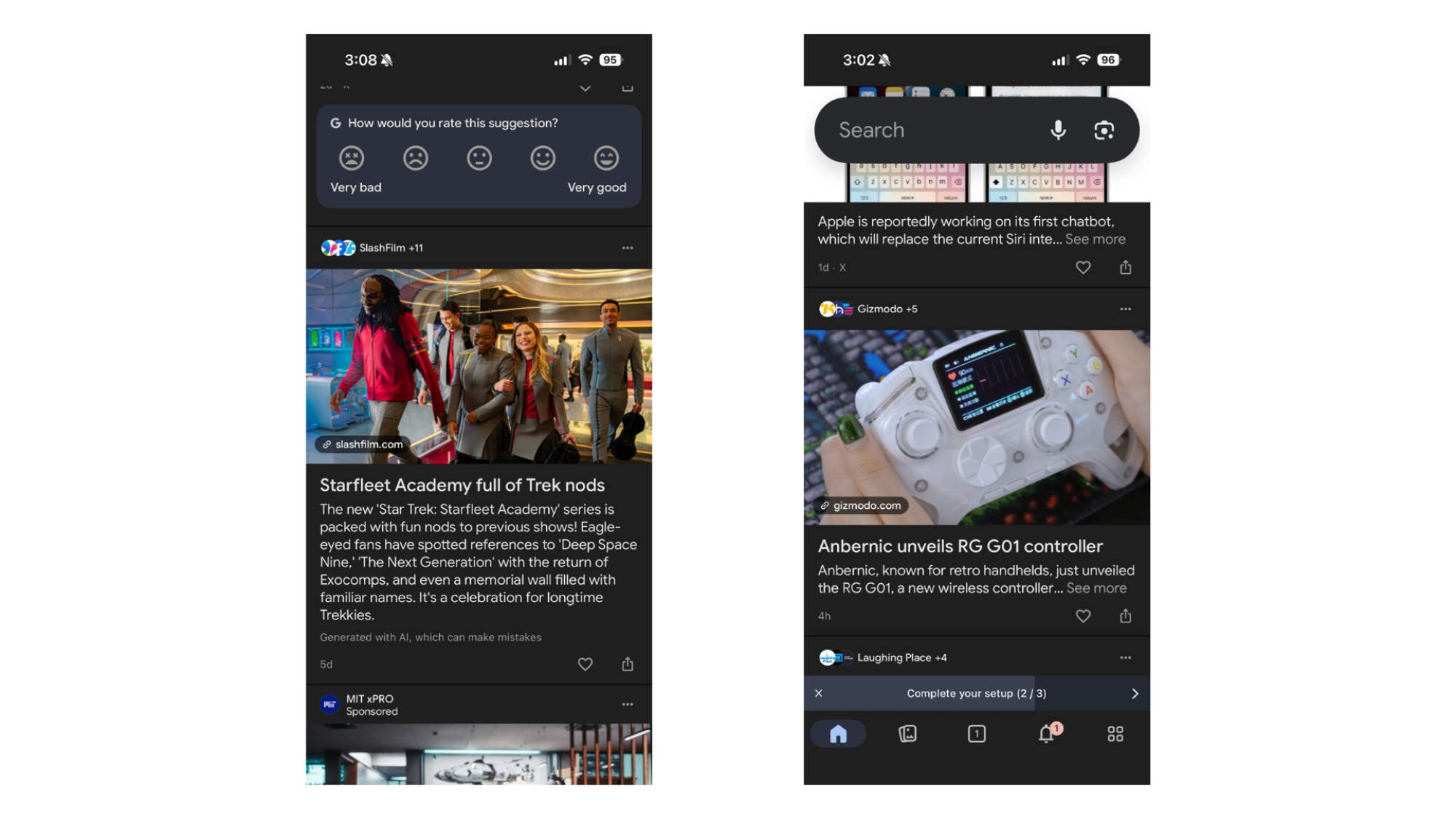

Last month, Google told online publishers that it had started testing AI-generated headlines in Google Discover, replacing stories’ carefully handcrafted titles with truncated alternatives made up by Gemini. Some journalists were predictably unhappy, but now, the company says that the AI headlines are no longer an experiment—they’re a “feature.”

Back when the testing began, the results ranged from poorly worded to straight up misinformation. For instance, one AI-generated headline promised “Steam Machine price revealed,” when the original article made no such claim. Another said “BG3 players exploit children,” which sounds serious, until you click through to the article and see that it’s about a clever way to recruit invincible party members in Baldur’s Gate 3 (which, to be fair, does involve turning child NPCs into sheep at one point).

At the time, Google said that the test was a “small UI experiment for a subset of Discover users,” and simply rearranged how users saw AI previews, which were introduced in October of last year and feature short AI summaries of articles, including an occasional AI headline. However, while that AI headline was previously hidden below the original, authored headline, the test put it up top, while getting rid of the authored headline entirely.

For a while, it seemed like Google might have been willing to back away from the AI headlines, but now the company says it’s doubling down. In a statement to The Verge, Google said that its AI headlines are no longer in testing, but are now a full-fledged feature. The company didn’t elaborate on why, but did say that the update “performs well for user satisfaction.”

When 9to5Google then reached out for more detail, the publication was told, “The overview headline reflects information across a range of sites, and is not a rewrite of an individual article headline.”

Well, that hasn’t quite been the case for me: When I first wrote about this “experiment,” I actually had yet to run into one of the AI headlines. But perusing my Google Discover feed today (to see yours, swipe right from the home screen on an Android phone, or scroll down in the Google app), I’ve finally seen some first hand. To Google’s credit, these AI previews do seem to synthesize several sources as claimed—you can see them above the linked story. However, they still call out one article in particular, linking to it and using its header photo. That can easily lead users to think the AI generated headline was written by the linked publication.

That can have consequences for the publication or writer if the AI gets something wrong, which a disclaimer at the bottom of these AI previews admits can happen. For instance, The Verge said it saw an AI Discover headline on a story from Lifehacker’s sister site PCMag that said, “US reverses foreign drone ban,” even though the linked story goes out of its way to say headlines that claim this are “misleading.”

The AI headlines I’ve seen personally haven’t been quite that bad, but as someone with a more than decade-long career in journalism, I do question their helpfulness. For instance, “Starfleet Academy full of Trek Nods” is much less informative than the original, “One of TNG’s Strangest Species Is Getting a Second Life In Modern Star Trek.” I guess “Star Trek show has Star Trek things” is apparently clickier or more useful to the reader than just saying what the specific Star Trek thing is?

Another example: “Anbernic unveils RG G01 Controller.” I hope you know what those letters and numbers mean, because this AI headline completely buries the context in the original headline, “Anbernic’s New Controller Has a Screen and Built-In Heartbeat Sensor, for Some Reason.”

I guess this is a future that I’ll have to get used to though. That I’m starting to see these headlines myself, despite not being part of the initial experiment, does suggest we can expect them to stick around, and to roll out to more people. If you see something that seems questionable while scrolling Google Discover, the feature has probably rolled out to you now too.

To check whether that suspicious headline was written by a human or not, try clicking the “See more” button at the bottom of the article’s description and looking for a “Generated with AI” disclaimer.

On the plus side, only about half of the articles in my Google Discover are currently using AI headlines, so not every piece of “content” is being affected. But for journalists, the move still comes at a tough time: According to Reuters, Google traffic from organic search was down by 38% on test sites in the United Stated between November 2024 and November 2025, and while Google Discover isn’t Search, editors write headlines the way they do for a reason. Using a robot to overwrites those decisions probably isn’t the best way to tackle eroding trust in media.

I’ve reached out to Google for comment on its AI headlines and will provide an update when I hear back.

An anonymous reader shares a report: Shares of Japanese toilet maker Toto gained the most in five years after booming memory demand excited expectations of growth in its little-known chipmaking materials operations. The stock surged as much as 11%, its steepest rise since February 2021, after Goldman Sachs analysts said Toto’s electrostatic chucks used in NAND chipmaking will likely benefit from an AI infrastructure buildout that’s tightening supplies of both high-end and commodity memory.

[…] Known for its heated toilet seats, the maker of washlets has for decades been part of the semiconductor and display supply chain via its advanced ceramic parts and films. Its electrostatic chucks — which it began mass producing in 1988 — are used to hold silicon wafers in place during chipmaking while helping to control temperature and contamination, according to the company. The company’s new domain business accounted for 42% of its total operating income in the fiscal year ended March 2025, Bloomberg-compiled data show.

Read more of this story at Slashdot.

Not every Xbox-published game will come to PS5 right away, but also maybe most of them will, probably, I think

The post Xbox Exec Struggles To Explain Why <i>Fable</i> Is Coming To PS5 Day One, But Not <i>Forza Horizon 6</i> appeared first on Kotaku.

Meta is being sued by Solos, a rival smart glasses maker, for infringing on its patents, Bloomberg reports. Solos is seeking “multiple billions of dollars” in damages and an injunction that could prevent Meta from selling its Ray-Ban Meta smart glasses as part of the lawsuit.

Solos claims that Meta’s Ray-Ban Meta Wayfarer Gen 1 smart glasses violate multiple patents covering “core technologies in the field of smart eyewear.” While less well known than Meta and its partner EssilorLuxottica, Solos sells multiple pairs of glasses with similar features to what Meta offers. For example, the company’s AirGo A5 glasses lets you control music playback and automatically translate speech into different languages, and integrates ChatGPT for answering questions and searching the web.

Beyond the product similarities, Solos claims that Meta was able to copy its patents because Oakley (an EssilorLuxottica subsidiary) and Meta employees had insights into the company’s products and road map. Solos says that in 2015, Oakley employees were introduced to the company’s smart glasses tech, and were even given a pair of Solos glasses for testing in 2019. Solos also says that a MIT Sloan Fellow who researched the company’s products and later became a product manager at Meta, brought knowledge of the company to her role. According to the logic of Solos’ lawsuit, by the time Meta and EssilorLuxottica were selling their own smart glasses, “both sides had accumulated years of direct, senior-level and increasingly detailed knowledge of Solos’ smart glasses technology.”

Engadget has asked both Meta and EssilorLuxottica to comment on Solos’ claims. We’ll update this article if we hear back.

While fewer people own Ray-Ban Meta smart glasses than use Instagram, Meta considers the wearable one of its few hardware success stories. The company is so convinced it can make smart glasses happen that it recently restructured its Reality Labs division to focus on AI hardware like smart glasses and hopefully build on its success.

This article originally appeared on Engadget at https://www.engadget.com/wearables/a-rival-smart-glasses-company-is-suing-meta-over-its-ray-ban-products-205000997.html?src=rss

Intel reported its earnings for the fourth quarter of 2025 yesterday, and the news both for the quarter and for the year was mixed: year-over-year revenue was down nearly imperceptibly, from $53.1 billion to $52.9 billion, while revenue for the quarter was down about four percent, from $14.3 billion last year to $13.7 billion this year. (That number was, nevertheless, on the high end of Intel’s guidance for the quarter, which ranged from $12.8 to $13.8 billion.)

Diving deeper into the numbers makes it clear exactly where money is being made and lost: Intel’s data center and AI products were up 9 percent for the quarter and 5 percent for the year, while its client computing group (which sells Core processors, Arc GPUs, and other consumer products) was down 7 percent for the quarter and 3 percent for the year.

That knowledge makes it slightly easier to understand the bind that company executives talked about on Intel’s earnings call (as transcribed by Investing.com). In short, Intel is having trouble making (and buying) enough chips to meet demand, and it makes more sense to allocate the chips it can make to the divisions that are actually making money—which means that we could see shortages of or higher prices for consumer processors, just as Intel is gearing up to launch the promising Core Ultra Series 3 processors (codenamed Panther Lake).

Global hiring remains 20% below pre-pandemic levels and job switching has hit a 10-year low, according to a LinkedIn report, and new university graduates are bearing the brunt of a labor market that increasingly favors experienced candidates over fresh talent.

In the UK, the Institute of Student Employers found that graduate hiring fell 8% in the last academic year and employers now receive 140 applications for each vacancy, up from 86 per vacancy in 2022-23. US data from the New York Federal Reserve shows unemployment among recent college graduates aged 22-27 stands at 5.8% versus 4.1% for all workers.

Recruiter Reed had 180,000 graduate job postings in 2021 but only 55,000 in 2024. In a survey of Reed clients last year, 15% said they had reduced hiring because of AI. London mayor Sadiq Khan said the capital will be “at the sharpest edge” of AI-driven changes and that entry-level jobs will be first to go.

Read more of this story at Slashdot.

GNU Guix 1.5 is out after three years, delivering Plasma 6.5, GNOME 46 on Wayland, rootless package management, and more than 12,500 new packages.