At Flash Memory Summit this week (online for the first time), Western Digital is showing off three new SSD products and have outlined the company’s areas of strategic focus in a keynote presentation.

First up, Western Digital is commercializing NVMe Zoned Namespaces (ZNS) technology with the new Ultrastar DC ZN540 datacenter SSD. We covered ZNS in depth earlier this year after the extension to the NVMe standard was ratified. Western Digital has been one of the strongest proponents of ZNS, so it’s no surprise that they’re one of the first to launch a zoned SSD product.

The ZN540 is based on a similar hardware platform to their existing traditional enterprise/datacenter SSDs like the Ultrastar DC SN640 and SN840. The ZN540 is a 2.5″ U.2 SSD using 3D TLC NAND and a Western Digital-designed SSD controller, and offers capacities up to 8TB with dual-port PCIe 3.0 support. The most significant hardware difference is a big decrease in the amount of RAM the SSD needs compared to the usual 1GB per 1TB ratio; Western Digital isn’t ready to disclose exactly how much RAM they are shipping in the ZN540, but it should be a nice decrease in BOM.

The new ZN540 also renders the Ultrastar DC SN340 mostly obsolete. The SN340 was designed to get some of the benefits of a zoned SSD by using a Flash Translation Layer that works with 32kB blocks instead of the usual 4kB. That enables a DRAM reduction by a factor of eight, at the expense of much lower random write performance, especially for small block sizes. ZNS SSDs simply prohibit random writes in the first place rather than silently deliver horrible performance with extremely high write amplification, and the ZNS interface allows software to be properly informed of these limitations and provides tools to cope with them.

The Ultrastar DC ZN540 is currently sampling to major customers. Software support for ZNS SSDs is fairly mature at the OS level in the Linux kernel and related tooling. Application-level support for zoned storage is more of a work in progress, but Western Digital and others have been hard at work. Zoned storage backends already exist for some well-known applications like the Ceph cluster filesystem and RocksDB key-value database.

Next up, Western Digital is introducing their first industrial-grade NVMe SSD. Western Digital’s industrial and automotive lineup currently consists of eMMC and UFS modules and SD/microSD cards. The new Western Digital IX SN530 NVMe SSD is an industrial/automotive grade version of the PC SN530, OEM counterpart to the retail SN550. These are DRAMless NVMe SSDs, albeit some of the best-performing DRAMless SSDs on the market. The IX SN530 will be available with capacities of 256GB to 2TB of TLC NAND, or operating as SLC NAND with capacities of 85-340GB and drastically higher write endurance. One of the main target markets for the IX SN530 will be automotive applications, where the push toward self-driving cars is increasing storage capacity and performance requirements.

The TLC-based variants of the IX SN530 are sampling now, and the SLC versions will start sampling in January.

| Western Digital IX SN530 SSD Specifications |

| Capacity |

85 GB |

170 GB |

340 GB |

256 GB |

512 GB |

1 TB |

2 TB |

| Form Factor |

M.2 2280 or M.2 2230 |

M.2 2280 |

| Controller |

WD in-house |

| DRAM |

None |

| NAND Flash |

Western Digital 96L SLC |

Western Digital 96L TLC |

| Sequential Read |

2400 MB/s |

2400 MB/s |

2500 MB/s |

Burst Sequential Write

|

900 MB/s |

1750 MB/s |

1950 MB/s |

900 MB/s |

1750 MB/s |

1950 MB/s |

1800 MB/s |

| Sustained Sequential Write |

900 MB/s |

1750 MB/s |

1950 MB/s |

140 MB/s |

280 MB/s |

540 MB/s |

525 MB/s |

| Random Read IOPS |

160k |

310k |

410k |

160k |

310k |

410k |

370k |

| Random Write IOPS |

180k |

330k |

350k |

85k |

150k |

350k |

300k |

| Projected Write Endurance |

6000 TB |

12000 TB |

24000 TB |

650 TB |

1300 TB |

2600 TB |

5200 TB |

Since the IX SN530 will be available in capacities up to 2TB, Western Digital is also adding a 2TB model to the related WD Blue SN550 consumer NVMe SSD, extending their entry-level NVMe product line now that such high capacities are no longer just for high-end SSDs. The new WD Blue SN550 2TB model is already in production and working its way through the supply chain, so it should be available for purchase soon.

| WD Blue SN550 SSD Specifications |

| Capacity |

250 GB |

500 GB |

1 TB |

2 TB |

| Form Factor |

M.2 2280 PCIe 3.0 x4 |

| Controller |

WD in-house |

| DRAM |

None |

| NAND Flash |

Western Digital/SanDisk 96L 3D TLC |

| Sequential Read |

2400 MB/s |

2400 MB/s |

2400 MB/s |

2600 MB/s |

| Sequential Write |

950 MB/s |

1750 MB/s |

1950 MB/s |

1800 MB/s |

| Random Read |

170k IOPS |

300k IOPS |

410k IOPS |

360k IOPS |

| Random Write |

135k IOPS |

240k IOPS |

405k IOPS |

384k IOPS |

| Warranty |

5 years |

| Write Endurance |

150 TB

0.3 DWPD |

300 TB

0.3 DWPD |

600 TB

0.3 DWPD |

900 TB

0.25 DWPD |

| MSRP |

$44.99 |

$53.99 |

$94.99 |

$249.99 |

Several performance metrics for the 2TB SN550 are slower than the 1TB model and the write endurance rating didn’t scale with capacity, so the 2TB WD Blue SN550 isn’t a groundbreaking product. The initial MSRP is quite a bit higher than a DRAMless NVMe SSD should be going for, even accounting for the fact that WD tends to have the best-performing DRAMless SSDs on the market.

Western Digital also used their keynote presentation to give a rundown on various areas the company is focusing on as part of their strategy to be more than just a NAND and drive manufacturer.

And in fact, we didn’t get to hear much at all about their NAND flash memory itself, despite the name of the conference. Western Digital and Kioxia announced their 112-layer fifth-generation BiCS 3D NAND in January 2020, but the new WD drives announced today are still using 96-layer TLC. We did catch a few potential references to future generations of 3D NAND: they have above 100 layers in production now and will reach 200 layers “pretty soon”, they’ll be moving the peripheral circuits to be above and below the memory rather than alongside (following in the footsteps of Micron, Intel and Hynix), 2Tbit dies will be coming at some point, and I/O speeds going from 400MT/s to 2GT/s over four generations. Since those were all passing mentions, we’re hesitant to take any of it as a solid indication of what to expect from their sixth generation 3D NAND, and we certainly don’t have any indication of when that will be going into production or hitting the market.

Aside from the Zoned Storage work we’ve already covered, Western Digital mentioned several areas of ongoing development. They are a big proponent of the RISC-V CPU architecture and have open-sourced some RISC-V core designs already, but we don’t have a clear picture of what—if any—Western Digital products have already started using RISC-V CPU cores. NVMe over Fabrics is one of the most important datacenter storage technologies, and Western Digital is participating through their OpenFlex storage systems and the RapidFlex NVMeoF controller technology they acquired last as from Kazan Networks.

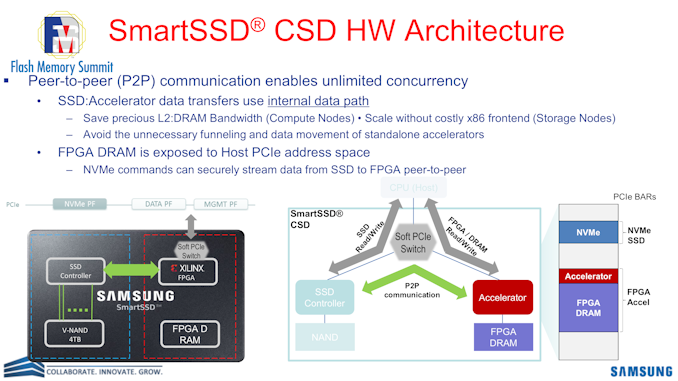

Western Digital is talking about computational storage, but only in the broadest terms—reiterating all the tantalizing possibilities, but not yet announcing any specific hardware development plans. In the area of security, Western Digital highlighted their membership in the OpenTitan project for developing open-source hardware root of trust technology. This is driven by the industry consensus that features like Secure Boot aren’t just useful for protecting the boot process of your operating system, but for verifying all the intelligent components in a system that handle sensitive data.

![]()

![]()

Source: AnandTech – Western Digital at FMS 2020: Zoned SSDs, Automotive NVMe And More