If you’re looking to do some upgrades, here’s an easy path to doing so.

The post AMD Puts Its Ryzen 7 7700X on Clearance at Nearly 40% Off, the Lowest Price for 8-Core and 16-Thread Unlocked Desktop CPU appeared first on Kotaku.

If you’re looking to do some upgrades, here’s an easy path to doing so.

The post AMD Puts Its Ryzen 7 7700X on Clearance at Nearly 40% Off, the Lowest Price for 8-Core and 16-Thread Unlocked Desktop CPU appeared first on Kotaku.

There were no idle hands at Sharpa’s CES booth. The company’s humanoid may have been the busiest bot at show, autonomously playing ping-pong, dealing blackjack games and taking selfies with passersby. On display wasn’t just the robot and its smarts, but also SharpaWave, a highly dexterous 1:1 scale human hand.

The hand has 22 active degrees of freedom, according to the company, allowing for precise and intricate finger movements. It mirrored my gestures as I wiggled my hand in front of its camera, getting everything mostly right, which was honestly pretty cool. Each fingertip contains a minicamera and over 1,000 tactile pixels so it can pick up objects with the appropriate amount of delicateness for the task at hand, like plucking a playing card from a deck and placing it gently on the table.

Sharpa’s robot was a pretty good ping-pong player, too. We’ve seen ping-pong robots plenty of times before, but these typically come in the form of a disembodied robotic arm, not one that’s humanoid from the waist up. The company’s products are meant to be general-purpose, with the ability to handle a wide range of jobs, and its humanoid wore a lot of hats at CES to drive the point home.

This article originally appeared on Engadget at https://www.engadget.com/ai/sharpas-ping-pong-playing-blackjack-dealing-humanoid-is-working-overtime-at-ces-2026-150000488.html?src=rss

Abstract of a paper on NBER: We construct an international panel data set comprising three distinct yet plausible measures of government indebtedness: the debt-to-GDP, the interest-to-GDP, and the debt-to-equity ratios. Our analysis reveals that these measures yield differing conclusions about recent trends in government indebtedness. While the debt-to-GDP ratio has reached historically high levels, the other two indicators show either no clear trend or a declining pattern over recent decades. We argue for the development of stronger theoretical foundations for the measures employed in the literature, suggesting that, without such grounding, assertions about debt (un)sustainability may be premature.

Read more of this story at Slashdot.

Revealed in its latest prototype at CES 2026, the IXI autofocusing glasses specifically target

The KDE Project released today KDE Gear 25.12.1 as the first maintenance update to the latest KDE Gear 25.12 series of this collection of open-source apps for the KDE Plasma desktop environment and other platforms.

The writer and comedian Megan Koester got her first writing job, reviewing Internet pornography, from a Craigslist ad she responded to more than 15 years ago. Several years after that, she used the listings website to find the rent-controlled apartment where she still lives today. When she wanted to buy property, she scrolled through Craigslist and found a parcel of land in the Mojave Desert. She built a dwelling on it (never mind that she’d later discover it was unpermitted) and furnished it entirely with finds from Craigslist’s free section, right down to the laminate flooring, which had previously been used by a production company.

“There’s so many elements of my life that are suffused with Craigslist,” says Koester, 42, whose Instagram account is dedicated, at least in part, to cataloging screenshots of what she has dubbed “harrowing images” from the site’s free section; on the day we speak, she’s wearing a cashmere sweater that cost her nothing, besides the faith it took to respond to an ad with no pictures. “I’m ride or die.”

Koester is one of untold numbers of Craigslist aficionados, many of them in their thirties and forties, who not only still use the old-school classifieds site but also consider it an essential, if anachronistic, part of their everyday lives. It’s a place where anonymity is still possible, where money doesn’t have to be exchanged, and where strangers can make meaningful connections—for romantic pursuits, straightforward transactions, and even to cast unusual creative projects, including experimental TV shows like The Rehearsal on HBO and Amazon Freevee’s Jury Duty. Unlike flashier online marketplaces such as DePop and its parent company, Etsy, or Facebook Marketplace, Craigslist doesn’t use algorithms to track users’ moves and predict what they want to see next. It doesn’t offer public profiles, rating systems, or “likes” and “shares” to dole out like social currency; as a result, Craigslist effectively disincentivizes clout-chasing and virality-seeking—behaviors that are often rewarded on platforms like TikTok, Instagram, and X. It’s a utopian vision of a much earlier, far more earnest Internet.

Enjoy immersive, smooth gameplay for all your favorite titles.

The post Samsung Goes All-In on Odyssey Gaming Monitors, 32″ G50D Series Hits an All-Time Low at 42% Off appeared first on Kotaku.

The My Mario toys are being marketed toward parents with children too young to pick up a Switch

The post Nintendo Markets <em>Mario</em> Toys With Mom Whose Thumb Is A Medical Miracle Or AI Slop appeared first on Kotaku.

You can pinpoint the exact minute of the high-water mark for tech-based enthusiasm: January 9, 2007, 9:41 AM PST, the moment Apple CEO Steve Jobs introduced the iPhone to the world.

Cell phones weren’t new—neither were cellphones with touch screens—but this one was different: so high-tech it seemed like it couldn’t be real, but so perfectly designed, it felt inevitable. And people were hyped. Not just tech nerds: normal people. The crowd at the 2007 Macworld Conference & Expo broke into rapturous applause when Jobs showed off the iPhone’s multi-touch—an ovation for a software feature!—because it seemed like Jobs was touching a better future.

The iPhone, people said, was like something out of Star Trek. But unlike communicators or tri-corders, it was obtainable (if you had $500) evidence of a future where technology would finally free us from the drudgery of our lives so we could boldly go—wherever, it doesn’t matter.

Steve Jobs mentioned Star Trek as an inspiration for the iPhone all the time; apparently the show is quite popular among tech people. Gene Roddenberry created Star Trek, and thus was the spiritual father of the iPhone. He spent the 1960s lounging poolside in Los Angeles, dreaming of a post-scarcity tomorrow where the wise, brave men of The Federation kept the Romulans at bay and there were hot alien chicks on every Class-M planet. At the same time, the future’s real prophet, Philip K. Dick, was huddled in a dank Oakland apartment, a stone’s throw from Silicon Valley, popping amphetamines like breath mints and feverishly typing dystopian visions of corporate surveillance states and nightmare techno-realities into his Hermes Rocket typewriter.

Roddenberry’s Federation promised technology would help humanity evolve beyond its baser instincts. Dick saw technology amplifying our worst impulses.

So what happened? How did we go from a Roddenberry future where each new product release seemed like another step closer to collective utopia to our Dick-esque present, where the first question we ask of any new technology is “How is this going to hurt me?”

Visionary heads of start-ups like to blather about “paradigm shifts” and “world-changing technology” but people don’t get excited for tech products that are going to, say, cure cancer. Most of life (for pampered Westerners, anyway) is dealing with routine annoyances, and tech promises a way out. Remember printing MapQuest directions before leaving the house? It was a pain in the ass. People were excited for the iPhone because it solved the MapQuest problem and so many other small, intimate problems, like “I can’t instantly send a photo to my friend” or “I get bored while I’m riding the bus.” Products that do this flourish, and ones that fail are discarded like a Juicero.

It’s hard to overstate how great the iPhone was back in 2007 in terms of solving annoyances. Buying one meant you no longer had to carry a notepad, camera, laptop, MP3 player, GPS device, flashlight, or alarm clock. It was all crammed into a single black mirror. But speaking of black mirror …

“We’re in an era of incremental updates, not industry-defining breakthroughs,” says Heather Sliwinski, founder of tech public relations firm Changemaker Communications. “Today’s new iPhone offers a slightly better camera, marginally different dimensions or AI features that no one is asking for. Those aren’t updates that go viral or justify consumers shelling out thousands of dollars for a device that’s only slightly better than what they already own.”

In economics, “marginal utility” is the additional satisfaction or benefit a consumer gets from consuming one more unit of a good or service. The marginal utility leap between a flip phone and the first iPhone was huge. But economics teaches us that marginal utility diminishes with each additional unit consumed. Each new iPhone release provided progressively less additional satisfaction compared to what users already had. Slightly faster chips, slightly better cameras, USB-C instead of Lightning, titanium instead of aluminum—who cares?

If we were merely bored with tech products, it would be one thing. But increasingly, devices that were desired because we want to make our lives easier or more enjoyable are making them harder and worse.

“When you buy a new tech product today, you’re not just buying one physical product. You’re committing to downloading another app, creating another account and managing another subscription,” Sliwinski says. “Consumers are exhausted by the endless management that comes with each new device.”

In economics, you’d call that “diseconomies of scale”: what happens when a business becomes so large its bureaucracy costs outweigh efficiency gains. In personal terms, it’s when the time and energy it takes to sync, charge, and coordinate your “time-saving” device makes you the middle manager of your own life.

Then there’s the kipple. In Do Androids Dream of Electric Sheep?, Philip K. Dick defines “kipple” as useless objects that accumulate: ”junk mail or match folders after you use the last match or gum wrappers or yesterday’s homeopape.” That drawer full of orphaned power cords and connectors, your broken earbuds, the extra game controllers, the Roku, Chomecast, and old Fitbit are physical kipple, but the virtual kipple is worse. “Personally, I have at least four different apps that I need to download and manage just to live in my apartment complex—smart lock system, community laundry, rent payments, maintenance requests,” Sliwinski says.

According to Dick, kipple doesn’t just accumulate; it metastasizes, growing constantly until the Star Trek lifestyle you envisioned becomes a Dick-esque swamp of dependencies, and The future goes from being a place you want to live to somewhere you’re trapped.

The door refused to open. It said, “Five cents, please.”He searched his pockets. No more coins; nothing. “I’ll pay you tomorrow,” he told the door. ― Philip K. Dick, Ubik

“Corporations have spent years trying to manufacture excitement around relatively low-importance features instead of genuinely useful developments, and consumers have learned to recognize that pattern,” says Kaveh Vahdat, founder of RiseOpp, a Fractional CMO and SEO firm based in San Francisco.

Nowhere does this consumer indifference seem greater than with AI. “Consumers are testing Sora or testing Grok and all of that, but there’s really not been a single use case or product for AI that I think consumers are excited about,” says Sliwinski.

This will not stop tech companies. Even without excitement, artificial intelligence is everywhere in tech, from toothbrushes to baby strollers (I think PKD would have found the AI stroller darkly funny: it’s self-driving, but it won’t work if you put a baby in it.) “There’s a lot of buzz around AI but we’re missing the ‘so what?'”

Beyond “so what?” consumers have started asking “How will this hurt me?” “Is AI going to encourage my child to take their own life? Is it going to steal my job? Is it destroying everything pure about humanity?”

Tech companies don’t seem like they’re scaling back on AI or doing an effective job of explaining its benefits, and if the recent past is an indicator, if they can’t make our lives easier, they’ll try to imprison us instead, employing psychologists, neuroscientists, and “growth hackers” specifically to make products harder to put down. The innovation isn’t in new products that make life easier, but in encouraging addiction through variable reward schedules, social validation metrics, parasocial relationships, and other dark arts until eventually we end up like the half-lifers in Ubik, husks in cryopods, living in a manufactured reality where we still have to pay for the doors to open. That’s the PKD take, anyway.

“Maybe 10 to 20 years down the road we will have another huge step change like the iPhone that can condense all these different devices that we’re using or apps that we’re using —but the tech isn’t there yet,” Sliwinski says.

In Star Trek, humanity doesn’t abandon scarcity. Technology eventually makes scarcity indefensible, and that’s only possible after a planet-wide war. From that Roddenberry-esque perspective, enshittification is what happens when old economic systems try to survive in a world where technology keeps eroding their justification, and each tiny “I don’t care” iteration to tech products is a small step closer to Star Trek‘s promised land of holodecks, abundance, and hot aliens.

Meta released an open source language learning app for Quest 3 that combines mixed reality passthrough and AI-powered object recognition.

Called Spatial Lingo: Language Practice, the app not only aims to help users practice their English or Spanish using objects in their own room, but also give developers a framework to make their own apps.

Meta calls Spatial Lingo a “cutting-edge showcase app” meant to transform your environment into an interactive classroom thanks to its ability to overlay translated words onto real-world objects, which uses AI and Quest’s Passthrough Camera API.

The app’s AI not only focuses on object detection for vocab building, but also features a 3D companion to guide you, which Meta says will offer encouragement and feedback as you practice speaking.

It also listens to your voice, evaluates your responses, and helps you master pronunciation in real time, Meta says.

Spatial Lingo is also open source, letting developers use its code, contribute, or build their own new Unity-based experiences.

Developers looking to use Spatial Lingo can find it over on GitHub. You can also find it for free on the Horizon Store for Quest 3 and 3S.

I’m usually pretty wary of most language learning apps since many of them look to maximize engagement, or otherwise appeal to what a language learner thinks they need rather than what the answer actually is: hours and hours of dedicated multimedia consumption combined with speaking practice with native speakers. I say this as a native English speaker who speaks both German and Italian.

Spatial Lingo isn’t promising fluency though; it’s primarily a demo that comes with some pretty cool building blocks—secondary to its use as a nifty ingress point into the basics of common nouns in English or Spanish. That, and it actually might also be pretty useful for beginners who not only want to see the word they’re trying to memorize, but get some feedback on how to pronounce it. It’s a neat little thing that maybe someone could take and make into an even neater big thing.

That said, the highest quality language learning you could hope for should invariably involve a native speaker (or AI indistinguishable from a native speaker) who not only points to a cat and can say “el gato “, but uses the word in full sentences so you can also get a feel for syntax and flow of a language—things that are difficult to teach directly, and are typically absorbed naturally during the language acquisition process.

Now that’s the sort of XR language learning app that would make me raise an eyebrow.

The post Meta’s Language Learning App for Quest Combines Mixed Reality and AI appeared first on Road to VR.

Meta is rolling out the Conversation Focus feature to the Early Access program of its smart glasses in the US & Canada.

Announced at Connect 2025 back in September, Conversation Focus is an accessibility feature that amplifies the voice of the person in front of you.

It’s the result of more than six years of research into “perceptual superpowers” at Meta. Unlike the hearing aid feature of devices like Apple’s AirPods Pro 2, Meta’s Conversation Focus is designed to be highly directional, amplifying only the voice directly in front of you.

0:00

Conversation Focus on Meta smart glasses.

Conversation Focus is available in Early Access on Ray-Ban Meta and Oakley Meta HSTN glasses in the US and Canada.

To join the Early Access program, visit this URL in the US or Canada.

Once your glasses have the feature, you can activate it at any time using “Hey Meta, start conversation focus,” or (perhaps more practically) you can assign it as the long-press action for the touchpad on the side of the glasses.

Save 20% on this ‘piece of junk’ Star Wars Millennium Falcon set from LEGO for a limited time.

The post LEGO Restocks the Star Wars Millennium Falcon at a Record Low for Another Clearance Run appeared first on Kotaku.

The world’s oceans absorbed yet another record-breaking amount of heat in 2025, continuing an almost unbroken streak of annual records since the start of the millennium and fueling increasingly extreme weather events around the globe. More than 90% of the heat trapped by humanity’s carbon emissions ends up in the oceans, making ocean heat content one of the clearest indicators of the climate crisis’s trajectory.

The analysis, published in the journal Advances in Atmospheric Sciences, drew on temperature data collected across the oceans and collated by three independent research teams. The measurements cover the top 2,000 meters of ocean depth, where most heat absorption occurs. The amount of heat absorbed is equivalent to more than 200 times the total electricity used by humans worldwide.

This extra thermal energy intensifies hurricanes and typhoons, produces heavier rainfall and greater flooding, and results in longer marine heatwaves that decimate ocean life. The oceans are likely at their hottest in at least 1,000 years and heating faster than at any point in the past 2,000 years.

Read more of this story at Slashdot.

American automakers who got overenthusiastic about electric vehicles continue to pay the price—literally. Yesterday, General Motors told investors that building and selling fewer EVs will cost the company $6 billion. Still, things could be worse—last month, rival Ford said it would write down $19.5 billion as a result of its failed EV bet.

GM is not actually abandoning its EV portfolio, even as it reduces shifts at some plants and repurposes others—like the one in Orion, Michigan—into assembling combustion-powered pickups and SUVs instead of EVs. The electric crossovers, SUVs, and pickups from Cadillac, Chevrolet, and GMC will remain on sale, with the rebatteried Chevy Bolt joining their ranks this year.

But GM says it expects to sell many fewer EVs than once planned. For one thing, the US government abolished the clean vehicle tax credit, which cut the price of an American-made EV by up to $7,500. That government has also told automakers it no longer cares if they sell plenty of inefficient vehicles. Add to that the extreme hostility shown by car dealers toward having to sell EVs in the first place and one can see why GM has decided to retreat, even if we might not sympathize.

Hackers continue to find ways to sneak malicious extensions into the Chrome web store—this time, the two offenders are impersonating an add-on that allows users to have conversations with ChatGPT and DeepSeek while on other websites and exfiltrating the data to threat actors’ servers.

On the surface, the two extensions identified by Ox Security researchers look pretty benign. The first, named “Chat GPT for Chrome with GPT-5, Claude Sonnet & DeepSeek AI,” has a Featured badge and 2.7K ratings with over 600,000 users. “AI Sidebar with Deepseek, ChatGPT, Claude and more” appears verified and has 2.2K ratings with 300,000 users.

However, these add-ons are actually sending AI chatbot conversations and browsing data directly to threat actors’ servers. This means that hackers have access to plenty of sensitive information that users share with ChatGPT and DeepSeek as well as URLs from Chrome tabs, search queries, session tokens, user IDs, and authentication data. Any of this can be used to conduct identity theft, phishing campaigns, and even corporate espionage.

Researchers found that the extensions impersonate legitimate Chrome add-ons developed by AITOPIA that add a sidebar to any website with the ability to chat with popular LLMs. The malicious capabilities stem from a request for consent for “anonymous, non-identifiable analytics data.” Threat actors are using Lovable, a web development platform, to host privacy policies and infrastructure, obscuring their processes.

Researchers also found that if you uninstalled one of the extensions, the other would open in a new tab in an attempt to trick users into installing that one instead.

If you’ve added AI-related extensions to Chrome, go to chrome://extensions/ and look for the malicious impersonators. Hit Remove if you find them. As of this writing, the extensions identified by Ox no longer appear in the Chrome Web Store.

As I’ve written about before, malicious extensions occasionally evade detection and gain approval from browser libraries by posing as legitimate add-ons, even earning “Featured” and “Verified” tags. Some threat actors playing the long game will convert extensions to malware several years after launch. This means you can’t blindly trust ratings and reviews, even if they’ve been accrued over time.

To minimize risk, you should always vet browser extensions carefully (even those that appear legit) for obvious red flags, like misspellings in the description and a large number of positive reviews accumulated in a short time. Head to Google or Reddit to see if anyone has identified the add-on as malicious or found any issues with the developer or source. Make sure you’re downloading the right extension—threat actors often try to confuse users with names that appear similar to popular add-ons.

Finally, you should regularly audit your extensions and remove those that aren’t essential. Go to chrome://extensions/ to see everything you have installed.

NASA has decided to bring the Crew-11 astronauts home a month earlier than originally planned due to a “medical concern” with one of them. This is the first time in its history that the space agency is cutting a mission short due to a medical issue, but it didn’t identify the crew member or divulge the exact situation and its severity. The astronauts will be heading back to Earth in the coming days. NASA Administrator Jared Isaacman said the agency will be releasing more details about their flight back home within 48 hours.

The agency previously postponed an International Space Station (ISS) spacewalk scheduled for January 8, citing a medical concern with a crew member that appeared the day before. NASA’s chief health and medical officer, James “JD” Polk, said the affected astronaut is “absolutely stable” and that this isn’t a case of an emergency evacuation. The ISS has a “robust suite of medical hardware” onboard, he said, but not enough for a complete workup to determine a diagnosis. Without a proper diagnosis, NASA doesn’t know if the astronaut’s health could be negatively affected by the environment aboard the ISS. That is why the agency is erring on the side of caution.

Crew-11 left for the space station on August 1 and was supposed to come back to Earth on or around February 20. After they leave the station, only three people will remain: Two cosmonauts and one astronauts who’ll be in charge of all the experiments currently being conducted on the orbiting lab. The team’s replacement, Crew-12, was supposed to head to the ISS mid-February, but NASA is considering sending the astronauts to the station earlier than that.

This article originally appeared on Engadget at https://www.engadget.com/science/space/nasa-is-ending-crew-11-astronauts-mission-a-month-early-140000750.html?src=rss

Dolby may have announced Dolby Vision 2 a few months ago, but the company gave the new platform its first big reveal at CES 2026. I got the chance to see the improvements in person for the first time, thanks to a variety of demos and Q&A sessions. Dolby Vision 2 will be available this year, but initially, it will be limited. As such, I’ve compiled the info on where the image engine will be available first, and what’s likely to come next in terms of where and how you can use it. But first, let’s quickly summarize what Dolby Vision 2 will even do for your TV.

Dolby Vision 2 is Dolby’s next-generation image engine that the company announced in September. The new standard will do several things to improve picture quality on your TV, including content recognition that optimizes your TV based on what and where you’re watching. This first element will improve scenes that many viewers complain are too dark, compensate for ambient lighting and apply motion adjustments for live sports and gaming.

Dolby Vision 2 will also deliver new tone mapping for improved color reproduction. I witnessed this first hand in various demos at CES, and this is the biggest difference between the current Dolby Vision and DV2 for me.

There’s also a new Authentic Motion feature that will provide the optimal amount of smoothing so that content appears more “authentically cinematic,” according to Dolby. This means getting rid of unwanted judder, but stopping short of the so-called soap opera effect.

Essentially, Dolby is taking advantage of all of the capabilities of today’s TVs, harnessing the improvements to display quality and processing power that companies have developed in the decade since Dolby Vision first arrived.

The biggest Dolby Vision 2 news at CES was the first three TV makers that have pledged support for the new standard. Hisense is bringing it to its 2026 RGB MiniLED TVs — including UX, UR9 and UR8. The company also plans to add it to more MiniLED TVs with an OTA update. TCL’s 2026 X QD-Mini LED TV Series and C Series will support Dolby Vision 2 via a future update. It will be available on TP Vision’s Philips’ 2026 OLED TVs, including the 2026 Philips OLED811, and OLED911 series as well as the flagship OLED951.

There are sure to be other companies that announce Dolby Vision 2 support in 2026. Sony doesn’t announce its new TVs at CES anymore, so that’s just one of the bigger names that’s yet to reveal its hand. Any upcoming TVs that seek to leverage the full suite of tools that Dolby Vision 2 offers will need to have an ambient light sensor as that’s one of the key facets of Dolby’s upgrade.

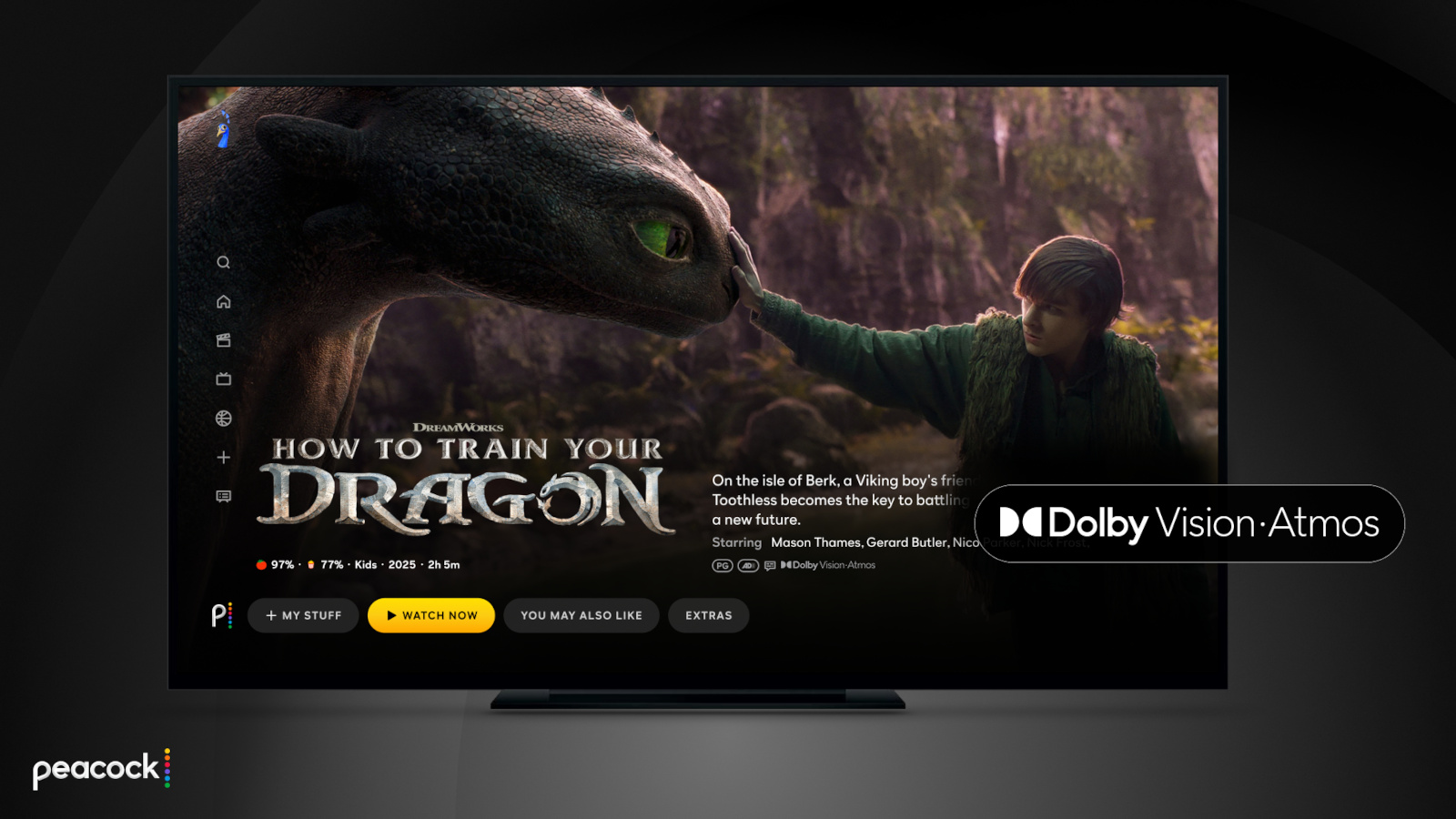

The other big piece of Dolby Vision 2 news at CES 2026 was the first streaming service that will support the platform. Peacock grabbed that honor, and so far it’s the only streamer to pledge support. However, several services support the current version of Dolby Vision, including Netflix, Disney+, Apple TV+, Amazon Prime Video, HBO Max and Paramount+. Like the additional TV support that’s sure to be announced throughout the year, I expect more streaming services will jump on board soon as well.

This article originally appeared on Engadget at https://www.engadget.com/home/home-theater/dolby-vision-2-is-coming-this-year-heres-what-you-need-to-know-140000034.html?src=rss

The Divinity maker elaborated on controversial comments about gen AI from last year

The post <i>Baldur’s Gate 3</i> Maker Says Next RPG Could Have Gen AI Assets As Long As They’re Created With ‘Data We Own’ appeared first on Kotaku.