One of the most efficient and stylish tower fans available.

The post Dyson Is Going Nuts with Its Tower Fan, Now Selling for Peanuts Ahead of Prime Day appeared first on Kotaku.

One of the most efficient and stylish tower fans available.

The post Dyson Is Going Nuts with Its Tower Fan, Now Selling for Peanuts Ahead of Prime Day appeared first on Kotaku.

Not to be confused with AMD’s Platform Security Processor (PSP), but Google’s PSP Security Protocol (PSP) for encryption in-transit for TCP network connections is now ready for the mainline kernel. This initial PSP encryption support for network connections is set to arrive with the upcoming Linux 6.18 kernel…

This charger powers all your devices, including your laptop.

The post If Your Laptop Needs Power, This 145W Portable Power Bank Is Selling for Peanuts Ahead of October Prime Day appeared first on Kotaku.

This sub-$200 laptop is flying off the shelves on Amazon.

The post 3x Cheaper Than Apple Headphones, This HP Laptop with Windows 11 Is Flying Off the Shelves appeared first on Kotaku.

PDFs remain a common way to share documents because they preserve formatting across devices and platforms. Sometimes, however, you end up with several separate PDFs that would be easier to handle as a single file. Perhaps you’re sending a client multiple contracts or keeping all your scanned bills in one place. Whatever the reason, combining PDFs is simple once you know which tools to use. Adobe Acrobat offers the most direct approach, but there are plenty of free alternatives to consider as well.

Adobe Acrobat has long been the default solution for editing and managing PDFs. If you already use the desktop app, you can merge files without relying on any third-party tools. Start by opening Acrobat and selecting the Tools tab. From there, choose Combine Files. You’ll see an option to add your documents. Usefully, this doesn’t limit you to just PDFs either. Word files, Excel sheets and image formats are all supported, too.

Once the files appear in the workspace, you can rearrange them by dragging them into the order you want. If there are pages you don’t need, they can be removed before finalizing. Acrobat also gives you the option to turn off automatic bookmarks if you prefer a cleaner output. When everything looks correct, click Combine and the program will generate a single PDF that you can save anywhere on your device.

If you don’t have the desktop version, Adobe also provides a free online tool. The web-based version works in any modern browser. You simply go to Adobe’s merge tool page and drag your PDFs into the upload window. The interface lets you change the order before confirming. Once you click Merge, the combined document is processed and offered as a download.

The service supports up to 100 individual files or 1,500 total pages, which is more than enough for most everyday needs.

If you prefer not to use Adobe or are working with smaller projects, several free services handle PDF merging in a browser. I Love PDF is one of the more popular choices. Using I Love PDF is nice and simple too: you upload your documents, arrange them as needed and download the finished file.

However, there are limits to keep in mind: I Love PDF’s free tier allows up to 25 files or 100 MB per merge, but it remains a quick option when you don’t want to install extra software.

Sejda is another widely recommended tool. It works similarly, but places an emphasis on security and deletes files after processing. You can upload multiple PDFs, make changes such as reordering or removing pages and then merge them into one file. The free version of Sejda comes with task limits, but for occasional use, it covers the basics well.

Foxit also offers its own online merge service that requires little more than adding files, clicking merge and downloading the result. These web-based solutions are fast and convenient, though they are best suited to smaller, non-sensitive projects, given the need to upload documents to a server.

For those who prefer keeping documents offline, open source and freeware options are available. PDFsam Basic is a long-standing desktop application that runs on Windows, macOS and Linux. Unlike online tools, everything happens locally, which is good if you have concerns about privacy.

After installing the program, you open the Merge module, select your files and choose whether to include features such as bookmarks or a table of contents. Once you’re satisfied with the settings, the program merges the documents into a single file stored directly on your computer.

Windows users can also turn to PDF24 Creator. It adds a virtual printer and a toolbox with options for editing. Within the toolbox, you select Merge Files and then arrange them in the preview window. After confirming, the program saves the combined PDF to your system.

Both PDFsam and PDF24 are free, lightweight and reliable, making them strong alternatives if you need an offline solution without subscribing to Adobe Acrobat.

If you’re on a Mac, be aware that you don’t necessarily need third-party software. Apple’s Preview app, which comes pre-installed on macOS, has a built-in way to combine PDFs.

Open one of your files in Preview, then enable the thumbnail sidebar from the View menu. With the sidebar visible, you can drag another PDF directly into it, dropping the new document either before or after the existing pages. Once the arrangement looks correct, choose Export as PDF from the File menu to create the combined version. It’s a simple solution that requires no downloads and works well for basic needs.

The best option depends on how often you work with PDFs and whether privacy is a concern. Adobe Acrobat provides the most features and the smoothest workflow, but it requires a subscription for desktop use. The free online version is fine for occasional merging, especially if you want something quick and easy.

Services like I Love PDF, Sejda and Foxit offer comparable convenience, though file size limits may restrict larger projects. Desktop tools such as PDFsam Basic and PDF24 Creator are better suited for anyone who wants to keep files local and avoid upload restrictions. Mac users benefit from the built-in Preview app, which covers the basics without requiring extra software.

This article originally appeared on Engadget at https://www.engadget.com/computing/how-to-combine-pdf-files-120046452.html?src=rss

Last week’s Apple event meant we were in for a barrage of reviews this week. We spent lots of time putting the iPhone 17 family, including the new iPhone Air, through its paces. We also tested the AirPods Pro 3 and Apple Watch Series 11 that were unveiled just over a week ago. In non-Apple reviews, there’s in-depth analysis of a premium Chromebook and the latest Tamagotchi device. Read on to catch up on everything you might’ve missed over the last few weeks.

Apple replaced the iPhone 16 Plus with the ultra-thin iPhone Air, but the new phone is more than just a gimmick. As senior reviews writer Sam Rutherford observed, the company opted for sleekness with a purpose, and it did so without sacrificing too much battery life. “While Apple might not want to say so just yet, I’m willing to bet that this device will also be the template for an upcoming foldable iPhone,” he concluded.

We’ve become bored with Apple’s tickle-down scheme for the regular iPhone over the years. The company has repeatedly opted to bring features from the Pro line down to these devices. This time, it finally gave the iPhone 17 a 120Hz display, and you won’t have to wait for the best camera updates. “Even if you’re coming from the iPhone 16, you’ll reap the benefits of the overhauled display and improved cameras,” I wrote. “I don’t say any of that lightly as I’m not a person who recommends getting a new phone every year.”

The Pro and Pro Max versions of the iPhone have always held a camera advantage over the regular model, and that’s still true. But the main differences now also include better thermal management, an aluminum unibody case and an optional 6.9-inch display. “This year’s iPhone lineup is forcing me to re-think the idea of a Pro phone,” managing editor Cherlynn Low said. “Is it one that looks and feels expensive or is it one that’s slightly more durable and maybe doesn’t appear as stylish?”

If Apple had only improved both the active noise cancellation (ANC) and sound performance of the AirPods Pro 3, it would’ve been a decent upgrade. However, the company went well beyond that with the additions of Live Translation and heart-rate tracking. But the best part about this model is the strong possibility that the company isn’t done with it yet. “If recent history is any indication, the company will continue to add new features to this third-generation version,” I said. “I highly doubt that Apple is finished exploiting the power of the H2 chip, so it’s just getting started with what the AirPods Pro 3 can do.”

The best smartwatch for iPhone owners keeps getting better. With upgrades to design, battery life, health monitoring and more, the Series 11 is a big refresh for the wearable device. However, it might not be the best option for most people. “With the Watch SE 3, you’ll still be able to access a wide range of health and fitness features like wrist temperature monitoring, sleep score, emergency SOS, fall and crash detection and more,” Cherlynn concluded. “It’s a compelling option at an appealing price.”

There’s some wide variance in performance among Chromebooks, but Acer’s Chromebook Plus Spin 514 offers power, longevity and utility. The main downside is you’ll have to pay a premium for it. “At $700, we’re pushing the top of what anyone should spend on a Chromebook,” deputy editor Nathan Ingraham wrote. “While the more powerful chip and long battery life will be worth it for some people, Acer itself is providing some strong competition with its standard Chromebook Plus 514 which came out this summer.”

If taking care of a virtual pet is more your speed these days, weekend editor Cheyenne McDonald spent some time tending to her digital flock. “All in all, Tamagotchi Paradise feels fuller than 2023’s Uni, especially as the latter existed at its launch before all the downloadable content started coming in,” she said. “There’s a lot of fun to be had with this one, so long as you’re open to a little (okay, a lot of) change.”

This article originally appeared on Engadget at https://www.engadget.com/engadget-review-recap-iphone-17-lineup-airpods-pro-3-apple-watch-series-11-and-more-120014319.html?src=rss

This Harry Potter LEGO set is selling like hotcakes at this price.

The post LEGO Is Offloading the Harry Potter Hogwarts Castle for Early Prime Day, Now Selling at a Steal appeared first on Kotaku.

Mickey Mouse’s first movie Steamboat Willie entered the public domain in 2024.

Now one of America’s largest personal injury firms is suing Disney, reports the Associated Press, “in an effort to get a ruling that would allow it to use Steamboat Willie in advertisements…”

[The law firm said] it had reached out to Disney to make sure the entertainment company wouldn’t sue them if they used images from the animated film for their TV and online ads. Disney’s lawyers responded by saying they didn’t offer legal advice to third parties, according to the lawsuit. Morgan & Morgan said it was filing the lawsuit to get a decision because it otherwise feared being sued by Disney for trademark infringement if it used Steamboat Willie.

“Without waiver of any of its rights, Disney will not provide such advice in response to your letter,” Disney’s attorneys wrote in their letter (adding “Very truly yours…”). A local newscast showed a glimpse of the letter, along with a few seconds of the ad (which ends with Minnie Mouse pulling out a cellphone to call for a lawyer…)

Attorney John Morgan tells the newscast that Disney’s legal team “is playing cute, and so we’re just trying to get a yes or no answer.. They wrote us back a bunch of mumbo-jumbo that made no sense, didn’t answer the question. We tried it again, they didn’t answer the question…” (The newscast adds that the case isn’t expected to go to court for at least a year.)

Read more of this story at Slashdot.

Code was open-sourced this week and posted to the Linux kernel mailing list as a “request for comments” (RFC) for a multi-kernel architecture. This proposal could allow for multiple independent kernel instances to co-exist on a single physical machine. Each kernel could run on dedicated CPU Cores while sharing underlying hardware resources. This could also allow for some complex use-cases such as real-time (RT) kernels running on select CPU cores…

The past few years Google engineers have been reimplementing Android’s Binder driver in the Rust programming language. Binder is a critical part of Android for inter-process communication (IPC) and now with Linux 6.18 it looks like the Rust rewrite will be upstreamed…

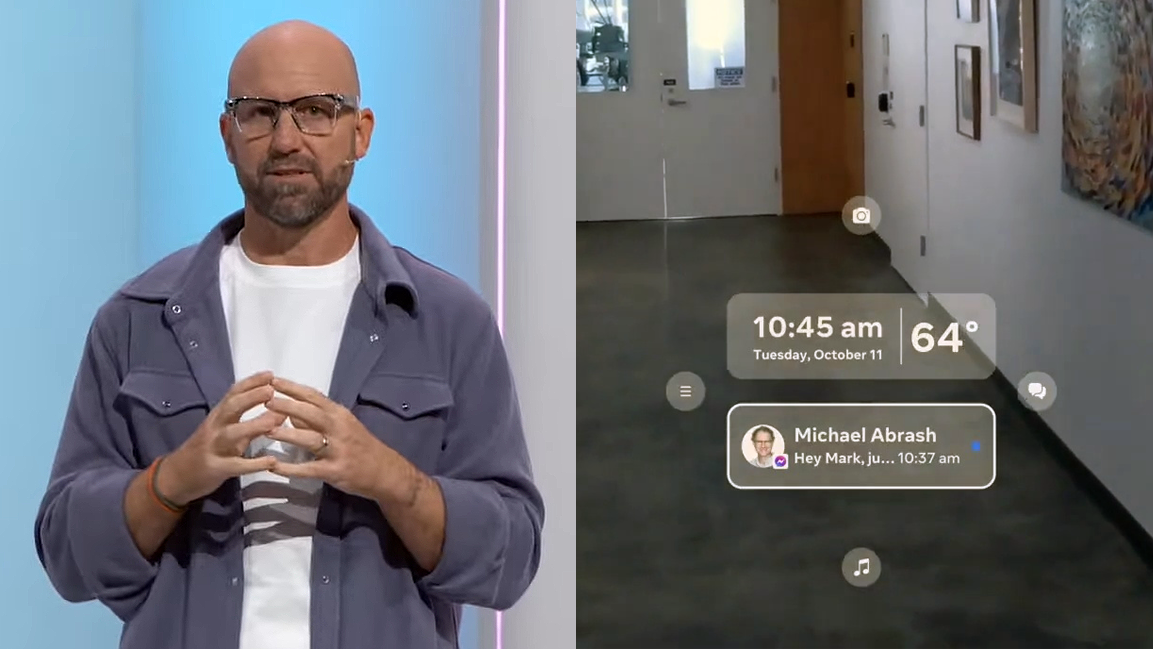

When Meta finally unveiled its newest smart glasses, CEO Mark Zuckerberg “drew more snickers than applause,” wrote the New York Times. (Mashable points out a video call failing onstage followed by an unsuccessful recipe demonstration.)

Meta chief technology officer Andrew Bosworth later explained the funny reason their demo didn’t work, reports TechCrunch, while answering questions on Instagram:

“When the chef said, ‘Hey, Meta, start Live AI,’ it started every single Ray-Ban Meta’s Live AI in the building. And there were a lot of people in that building,” Bosworth explained. “That obviously didn’t happen in rehearsal; we didn’t have as many things,” he said, referring to the number of glasses that were triggered… The second part of the failure had to do with how Meta had chosen to route the Live AI traffic to its development server to isolate it during the demo. But when it did so, it did this for everyone in the building on the access points, which included all the headsets. “So we DDoS’d ourselves, basically, with that demo,” Bosworth added… Meta’s dev server wasn’t set up to handle the flood of traffic from the other glasses in the building — Meta was only planning for it to handle the demos alone.

The issue with the failed WhatsApp call, on the other hand, was the result of a new bug. The smart glasses’ display had gone to sleep at the exact moment the call came in, Bosworth said. When Zuckerberg woke the display back up, it didn’t show the answer notification to him. The CTO said this was a “race condition” bug… “We’ve never run into that bug before,” Bosworth noted. “That’s the first time we’d ever seen it. It’s fixed now, and that’s a terrible, terrible place for that bug to show up.” He stressed that, of course, Meta knows how to handle video calls, and the company was “bummed” about the bug showing up here… “It really was just a demo fail and not, like, a product failure,” he said.

Thanks to Slashdot reader fjo3 for sharing the news.

Read more of this story at Slashdot.

This month, Shuttle Computers launched the SPCNV03, an ultra-compact edge computer based on the NVIDIA Jetson Orin Nano. It delivers up to 40 TOPS of AI performance in a fanless aluminum chassis and carries EMC, Safety, and RoHS certifications for use in robotics, automation, video analytics, and smart city applications. The SPCNV03 is built on […]

The consumer advocacy nonprofit PIRG (Public Interest Research Group) is now petitioning Microsoft to reconsider pulling support for Windows 10 in 2025, since “as many as 400 million perfectly good computers that can’t upgrade to Windows 11 will be thrown out.” In a petition addressed to Microsoft CEO Satya Nadella, the group warned the October 14 end of free support could cause “the single biggest jump in junked computers ever, and make it impossible for Microsoft to hit their sustainability goals.”

About 40% of PCs currently in use can’t upgrade to Windows 11, even if users want to… Less than a quarter of electronic waste is recycled, so most of those computers will end up in landfills.

Consumer Reports recently also urged Microsoft to not to “strand millions of customers.”. And now more groups are also pushing back, according to a post from the blog Windows: Central

The Restart Project co-developed the “End of 10” toolkit, which is designed to support Windows 10 users who can’t upgrade to Windows 11 after the operating system hits its end-of-support date.

They also note that a Paris-based company called Back Market plans to sell Windows 10 laptops refurbished with Ubuntu Linux or ChromeOS Flex. (“We refuse to watch hundreds of millions of perfectly good computers end up in the trash as e-waste,” explains their web site.) Back Market’s ad promises an “up-to-date, secure operating system — so instead of paying for a new computer you don’t need, you can help us give this one a brand new life.”

Right now Windows 10 holds 71.9% of Microsoft’s market share, with Windows 11 at 22.95%, according to figures from StatCounter cited by the blog Windows Central. And HP and Dell “recently indicated that half of the global PCs are still running Windows 10,” according to another Windows Central post…

Read more of this story at Slashdot.

The consumer advocacy nonprofit PIRG (Public Interest Research Group) is now petitioning Microsoft to reconsider pulling support for Windows 10 in 2025, since “as many as 400 million perfectly good computers that can’t upgrade to Windows 11 will be thrown out.” In a petition addressed to Microsoft CEO Satya Nadella, the group warned the October 14 end of free support could cause “the single biggest jump in junked computers ever, and make it impossible for Microsoft to hit their sustainability goals.”

About 40% of PCs currently in use can’t upgrade to Windows 11, even if users want to… Less than a quarter of electronic waste is recycled, so most of those computers will end up in landfills.

Consumer Reports recently also urged Microsoft to not to “strand millions of customers.”. And now more groups are also pushing back, according to a post from the blog Windows: Central

The Restart Project co-developed the “End of 10” toolkit, which is designed to support Windows 10 users who can’t upgrade to Windows 11 after the operating system hits its end-of-support date.

They also note that a Paris-based company called Back Market plans to sell Windows 10 laptops refurbished with Ubuntu Linux or ChromeOS Flex. (“We refuse to watch hundreds of millions of perfectly good computers end up in the trash as e-waste,” explains their web site.) Back Market’s ad promises an “up-to-date, secure operating system — so instead of paying for a new computer you don’t need, you can help us give this one a brand new life.”

Right now Windows 10 holds 71.9% of Microsoft’s market share, with Windows 11 at 22.95%, according to figures from StatCounter cited by the blog Windows Central. And HP and Dell “recently indicated that half of the global PCs are still running Windows 10,” according to another Windows Central post…

Read more of this story at Slashdot.

An anonymous reader shared this article from the Washington Post:

A student taking an online quiz sees a button appear in their Chrome browser: “homework help.” Soon, Google’s artificial intelligence has read the question on-screen and suggests “choice B” as the answer. The temptation to cheat was suddenly just two clicks away Sept. 2, when Google quietly added a “homework help” button to Chrome, the world’s most popular web browser. The button has been appearing automatically on the kinds of course websites used by the majority of American college students and many high-schoolers, too. Pressing it launches Google Lens, a service that reads what’s on the page and can provide an “AI Overview” answer to questions — including during tests.

Educators I’ve spoken with are alarmed. Schools including Emory University, the University of Alabama, the University of California at Los Angeles and the University of California at Berkeley have alerted faculty how the button appears in the URL box of course sites and their limited ability to control it.

Chrome’s cheating tool exemplifies Big Tech’s continuing gold rush approach to AI: launch first, consider consequences later and let society clean up the mess. “Google is undermining academic integrity by shoving AI in students’ faces during exams,” says Ian Linkletter, a librarian at the British Columbia Institute of Technology who first flagged the issue to me. “Google is trying to make instructors give up on regulating AI in their classroom, and it might work. Google Chrome has the market share to change student behavior, and it appears this is the goal.”

Several days after I contacted Google about the issue, the company told me it had temporarily paused the homework help button — but also didn’t commit to keeping it off. “Students have told us they value tools that help them learn and understand things visually, so we’re running tests offering an easier way to access Lens while browsing,” Google spokesman Craig Ewer said in a statement.

Read more of this story at Slashdot.

A patch queued into the PCI subsystem’s “next” branch ahead of the Linux 6.18 merge window will uniformally expose the PCI device serial number of devices via sysfs for easy programmatic parsing…

Meta Ray-Ban Display is undeniably impressive technology, but it has notable flaws, while the Meta Neural Band works so well it feels like magic.

If you somehow missed it: Meta Ray-Ban Display is the company’s first smart glasses product with a display of any kind. Unveiled at Connect 2025 this week, the product will hit physical US retailers on September 30, priced at $800 with Meta’s long-in-development sEMG wristband, called Meta Neural Band, in the box.

UploadVRDavid Heaney

UploadVRDavid Heaney

You can read about the specifications, features, and availability of Meta Ray-Ban Display here. But what is it actually like to use? At Meta Connect 2025, I found out.

Meta invited UploadVR to Connect 2025 and provided accommodation for two nights. But it did not provide us with a Meta Ray-Ban Display demo ahead of the keynote. Outlets with much smaller reach than the leading XR news site received private behind-closed-doors demos before the product was unveiled, but UploadVR did not. Last year, we didn’t get a demo of the Orion AR glasses prototype at all, but developer Alex Coulombe shared his impressions on our site.

My time with the glasses and wristband took place much later in the day, alongside other attendees at Meta’s communal hardware demo area, where I had less time to explore what the devices are capable of, and was constrained by being shepherded along a route as part of a group.

Whatever Meta’s reason for not including UploadVR in the pre-keynote private demos, that’s partially why this article is coming to you later than other sources. I flew across the Atlantic to attend Connect. By the time of my informal demo, I had spent all day writing up my Horizon Hyperscape impressions and the keynote announcements, and it was 4am in my home time zone. Afterwards I slept, and upon waking it was time to cover the developer keynote announcements. After this I tried Meta Ray-Ban Display for a second time, and then headed to the airport for the 10-hour flight home.

While some of the other hands-on impressions of Meta Ray-Ban Display I’ve read and watched seem to follow a similar format, with repeated phrases that suggest distributed talking points, the following are my unfiltered opinions. I wasn’t given a private walkthrough, so I’m not burdened with any priming for my thoughts.

Other than the cost, the primary tradeoff of adding a display to smart glasses is that it also adds weight and bulk, especially to maintain acceptable battery life.

Meta Ray-Ban Display weighs 69 grams, compared to the 52 grams of the regular Ray-Ban Meta glasses, and 45 grams of the non-smart Ray-Ban equivalent. It’s also noticeably bulkier. The rims are thicker, and the temples even more so.

With the regular Ray-Ban Meta glasses, people unfamiliar with them almost never clock that you’re wearing smart glasses. The temples are slightly thicker than usual, but the frames are essentially the same. It’s only the camera that gives them away. With Meta Ray-Ban Display, it’s clear that you’re not wearing regular glasses. And I don’t mean due to the display.

The clickbait YouTube thumbnails you may have seen are fake — none of the nearby Connect attendees I asked could tell whether I even had the display on or not (Meta says the display has just 2% light leakage). But the noticeably increased thickness of the entire frame suggests that something’s not normal. These glasses are unavoidably chunky.

How much that matters will vary greatly from person to person. For some people it won’t matter at all. These days, thick framed glasses can even be a fashion choice. For others it will be a total dealbreaker. I’ll be extremely curious to see how much the bulk affects sales and retention, as it should get worse with eventual true AR glasses.

When it comes to comfort, few people not employed by Meta have had enough time with these glasses to say whether they’re comfortable enough for all-day wear. For me, in the two 15-20 minute sessions they felt perfectly fine, and I felt no discomfort at all. But this isn’t enough time to make a true assessment.

UploadVR plans to purchase a unit for a review next month – we haven’t heard anything about getting a unit from Meta – and we’ll wear the HUD glasses until the battery runs out to answer the comfort question more definitively.

Like the regular Ray-Ban Meta glasses, you can control Meta Ray-Ban Display with Meta AI by using your voice, or use the button and touchpad on the side for basic controls like capturing images or videos and playing or pausing music. But unlike any other smart glasses to date, it also comes with an sEMG wristband in the box, Meta Neural Band.

Meta Neural Band works by sensing the activation of the muscles in your wrist which drive your finger movements, a technique called surface electromyography (sEMG).

sEMG enables precise finger tracking with very little power draw, and without the need to be in view of a camera.

Meta Neural Band should get around 18 hours of battery life, Meta claims, and it has an IPX7 water rating.

In its current form, Meta Neural Band is set up to detect four finger gestures:

Meta also plans to release a firmware update in December that will let you finger-trace letters on a physical surface, such as your leg, to enter text. It sounds straight out of science fiction, and The Verge’s Victoria Song says it works “shockingly well”. But I wasn’t able to try it.

The Meta Neural Band gestures control a floating fixed heads-up display (HUD) visible only to your right eye, positioned slightly below and to the right of center.

This fixed HUD covers around 20 degrees of your vision. To understand how wide that is, extend your right arm fully straight and then turn just your hand 90 degrees inward, keeping the rest of your arm straight. To understand how tall, do the same but turn your hand upwards. I apologize for the physical discomfort you just experienced, but you’ve now learned how little of your right eye’s vision Meta’s HUD occupies.

0:00

This clip from Meta shows the 3 tabs of the system interface, and how you swipe between them.

Meta’s system interface for the HUD looks much like that of a smartwatch, which makes sense given the 600×600 resolution. It has 3 tabs, which you horizontally scroll between:

The problem is that, given the form factor and input system, this is all just too much. It’s too finicky, and takes too many gestures to do what you want. The interface almost certainly could, and very much so should, be significantly streamlined.

The current home tab feels like a waste of space when you don’t have any notifications, showing just the time and a Meta AI button. You should invoke Meta AI through a custom finger gesture, not a UI button, and the home tab should instead have shortcuts to all the other key functionality of the glasses.

Interestingly, all the way back at Connect 2022, Meta showed a prototype demo clip of exactly this. The prototype HUD had a singular tab, with the date, time, weather and latest notification in the center, and shortcuts to the camera, messages, music, and more apps above, to the right, below, and to the left respectively. I’d much prefer to have this in smart glasses than what Meta has today. What happened to it?

0:00

Meta Connect 2022 demo of a prototype of the sEMG wristband and HUD. Why doesn’t the shipping product’s interface look like this?

A lot of the friction here will eventually be solved with the integration of eye-tracking. Instead of needing to swipe around menus, you’ll be able to just look at what you want and pinch, akin to the advantages of a touchscreen over arrow keys on a phone. But for now, it feels like using MP3 players before the iPod, or smartphones before the iPhone. sEMG is obviously going to be a huge part of the future of computing. But I strongly suspect it will only be one half of the interaction answer, with eye tracking making the whole.

The other problem with navigating and interacting on Meta Ray-Ban Display is that it’s sluggish, with frequent lag across both of my sessions, which involved two separate units. The interface looked sluggish at times during Mark Zuckerberg and Andrew Bosworth’s keynote demo too.

To be clear, the problem here is clearly the glasses, not the wristband. I know that because the wristband provided immediate haptic feedback when a gesture was recognized, and any time lag happened, the frame rate of animations slowed down too.

Meta confirmed to UploadVR that Meta Ray-Ban Display is powered by Qualcomm’s original Snapdragon AR1 Gen 1 chipset, the exact same as used in 2023’s Ray-Ban Meta glasses, their new refresh, and both Oakley Meta smart glasses. I specifically asked whether this was a misprint, given that Qualcomm announced the new higher-end AR1+ chip back in June. But Meta confirmed that it was not a typo. The glasses really are using a two year old chip – and it shows.

(If you’re in the VR space: does this remind you of anything?)

The Meta Neural Band itself picked up every gesture, every time, with a 100% success rate in my time with it. It works so well that it’s hard to believe it’s real. The volume adjustment gesture, for example, where you pinch and twist an imaginary knob, feels like magic. But the glasses Meta Neural Band is paired with today let it down.

Meta Neural Band in Black (left) and Sand (right).

While I’ve heard some people complain that the Meta Neural Band felt too tight, I suspect that their Meta handlers were prioritizing making sure that it performed as well as possible over comfort. In my second demo I adjusted mine to the same tightness I would for my Fitbit, and found the gesture recognition to remain flawless. It just works, and its woven mesh material felt very comfortable.

Speaking of Fitbit, there’s clearly enormous potential here for Meta to evolve the wristband to do more than just sEMG. During the Connect keynote, the company announced Garmin Watch integration for all its smart glasses. But for Meta Ray-Ban Display, why can’t the Meta Neural Band itself collect fitness metrics? I imagine this will be a big focus of successive generations.

The image delivered to your right eye by Meta Ray-Ban Display is sharp and clear, albeit translucent, with higher angular resolution than Apple Vision Pro. But that your left eye sees nothing is a major flaw.

If you’re a seasoned VR user, you’ve likely run into a frustrating bug, or played a mod, where a user interface element, shader, or effect only renders in one eye. In smart glasses, it feels just as bad.

A lot of people have asked me whether you could use Meta Ray-Ban Display to watch a video while on the go. And my answer is that while the glasses actually already do let you watch videos sent to you on Instagram and WhatsApp, I wouldn’t actually want to watch them. It’s not something I want to do with any monocular display.

Throughout both my Meta Ray-Ban Display sessions, when the display was on I experienced eyestrain. The binocular mismatch induced by a monocular display is just downright uncomfortable. And to be clear, I’ve experienced this before with other monocular glasses – it isn’t unique to Meta’s technology.

I’d want to use Meta Ray-Ban Display to briefly check notifications without needing to look down, and for occasionally glancing at a navigation route. But I definitely wouldn’t want to keep the display up for longer than this, and that rules out use cases like video calling.

Reading and watching impressions of Meta Ray-Ban Display from other outlets and influencers, I’ve been surprised at how many don’t explore the monocular issue at all, beyond briefly mentioning it as a minor tradeoff.

Could I just be more sensitive to binocular rivalry than most people? Maybe. But it was a sentiment I heard within earshot from other attendees demoing the glasses too.

“I’ve actually heard that all day”, an event staffer guiding the demo groups remarked, regarding the visually uncomfortable feeling of the display only showing to one eye.

UploadVRDavid Heaney

UploadVRDavid Heaney

The problem here is that Meta’s CTO Andrew Bosworth is absolutely correct to point out that the components for a binocular display system would be more than twice as expensive as a monocular one, since it also requires implementing disparity correction. It would also drive up the bulk and weight even further.

Based on my on background conversations with people in the LCOS and waveguide supply chains, had Meta decided to go with a binocular design, including the extra battery and disparity correction required, I’d estimate the product would probably have ended up around $1200 at least, with a weight of around 85 grams.

And yet, all that said, I’d still argue it would have been worth it. Meta Ray-Ban Display is already by its nature an early adopter product, and the form factor already will only appeal to those who don’t care about its bulk. I may be wrong, but I suspect the early adopters of HUD glasses will gravitate to binocular options, once they’re available. Which of the major players will be the first to offer it?

In many ways, the Meta Ray-Ban Display glasses remind me of the Meta Quest Pro headset. It too was held back by having an outdated chip and only one of a key component (in its case a color camera, in Meta Ray-Ban Display’s case a display).

Don’t get me wrong here. I could imagine Meta Ray-Ban Display being immensely useful for checking notifications and directions on the go. But it’s tantalizingly close to being so much more. If it was binocular, had eye tracking, and was driven by a slightly more powerful chip, I think I’d want to use it all day long.

But we’re not there yet, and the product I tried was finicky and monocular.

In the present, as a pair, the glasses and wristband deliver equal parts frustration and delight. But they also fill me with excitement for the future. The Meta Neural Band works so well it feels like magic, and once it’s paired with better glasses, I strongly suspect Meta could have its iPod moment.

Its iPhone moment, on the other hand, will have to wait for true AR.

UploadVRDavid Heaney

UploadVRDavid Heaney

An anonymous reader shared this report from the Washington Post:

Finnish tech firm Bluefors, a maker of ultracold refrigerator systems critical for quantum computing, has purchased tens of thousands of liters of Helium-3 from the moon — spending “above $300 million” — through a commercial space company called Interlune. The agreement, which has not been previously reported, marks the largest purchase of a natural resource from space.

Interlune, a company founded by former executives from Blue Origin and an Apollo astronaut, has faced skepticism about its mission to become the first entity to mine the moon (which is legal thanks to a 2015 law that grants U.S. space companies the rights to mine on celestial bodies). But advances in its harvesting technology and the materialization of commercial agreements are gradually making this undertaking sound less like science fiction. Bluefors is the third customer to sign up, with an order of up to 10,000 liters of Helium-3 annually for delivery between 2028 and 2037…

Helium-3 is lighter than the Helium-4 gas featured at birthday parties. It’s also much rarer on Earth. But moon rock samples from the Apollo days hint at its abundance there. Interlune has placed the market value at $20 million per kilogram (about 7,500 liters). “It’s the only resource in the universe that’s priced high enough to warrant going out to space today and bringing it back to Earth,” said Rob Meyerson [CEO of Interlune and former president of Blue Origin]…

[H]eat, even in small doses, can cause qubits to produce errors. That’s where Helium-3 comes in. Bluefors makes the cooling technology that allows the computer to operate — producing chandelier-type structures known as dilution refrigerators. Their fridges, used by quantum computer leader IBM, contain a mixture of Helium-3 and Helium-4 that pushes temperatures below 10 millikelvins (or minus-460 degrees Fahrenheit)… Existing quantum computers have been built with more than a thousand qubits, he said, but a commercial system or data center would need a million or more. That could require perhaps thousands of liters of Helium-3 per quantum computer. “They will need more Helium-3 than is available on planet Earth,” said Gary Lai [a co-founder and chief technology officer of Interlune, who was previously the chief architect at Blue Origin]. Most Helium-3 on Earth, he said, comes from the decay of tritium (an isotope of hydrogen) in nuclear weapons stockpiles, but between 22,000 and 30,000 liters are made each year…

“We estimate there’s more than a million metric tons of Helium-3 on the moon,” Meyerson said. “And it’s been accumulating there for 4 billion years.” Now, they just need to get it.

Interlune CEO Meyerson tells the post “It’s really all about establishing a resilient supply chain for this critical material” — adding that in the long-term he could also see Helium-3 being used for other purposes including fusion energy.

Read more of this story at Slashdot.

A large U.S.-made bomb left over from World War II was discovered at a construction site, reports the Associated Press:

Police said the bomb was 1.5 meters (nearly 5 feet) in length and weighed about 1,000 pounds (450 kilograms). It was discovered by construction workers in Quarry Bay, a bustling residential and business district on the west side of Hong Kong island… [A police official] said that because of “the exceptionally high risks associated with its disposal,” approximately 1,900 households involving 6,000 individuals were “urged to evacuate swiftly.” The operation to deactivate the bomb began late Friday and lasted until around 11:30 a.m. Saturday. No one was injured in the operation.

Bombs left over from World War II are discovered from time to time in Hong Kong. The city was occupied by Japanese forces during the war, when it became a base for the Japanese military and shipping. The United States, along with other Allied forces, targeted Hong Kong in air raids to disrupt Japanese supply lines and infrastructure.

“Bombs from the war have triggered evacuations and emergency measures around the globe in recent months,” reports CBS News:

Earlier this month, a 500-pound bomb was discovered in Slovakia’s capital during construction work, prompting evacuations. In August, large parts of Dresden, Germany, were evacuated so experts could defuse an unexploded World War II bomb found during clearance work for a collapsed bridge. In June, over 20,000 people were evacuated from Cologne after three unexploded U.S. bombs from the war were found… In March, a World War II bomb was found near the tracks of Paris’ Gare du Nord station. In February, more than 170 bombs were found near a children’s playground in northern England. And in October 2024, a World War II bomb exploded at a Japanese airport.

Read more of this story at Slashdot.