Between CES at the beginning of the month, a series of presentations at Storage Field Day last week and a quarterly earnings report this week, we’ve heard from just about every division of Western Digital about their latest priorities, strategy and roadmaps. Here are the highlights.

Hard Drive Tech: Energy-Assisted Recording, Improved Actuators and Suspension

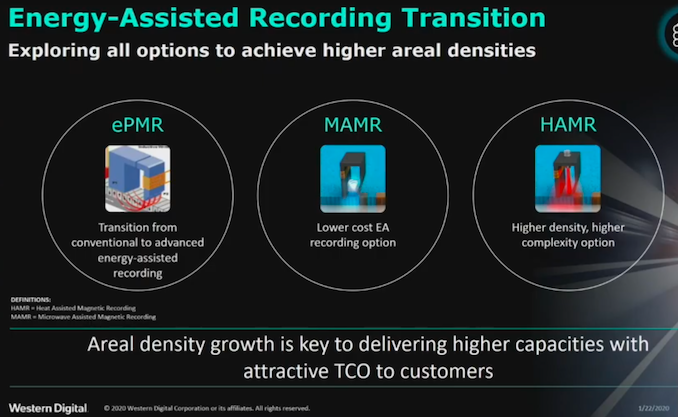

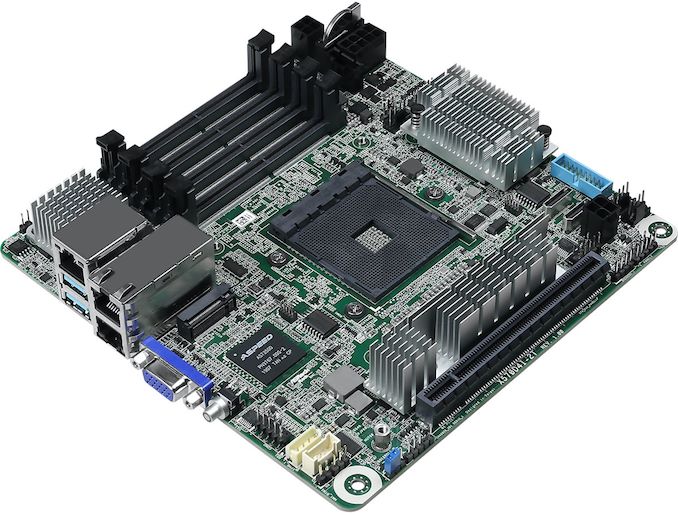

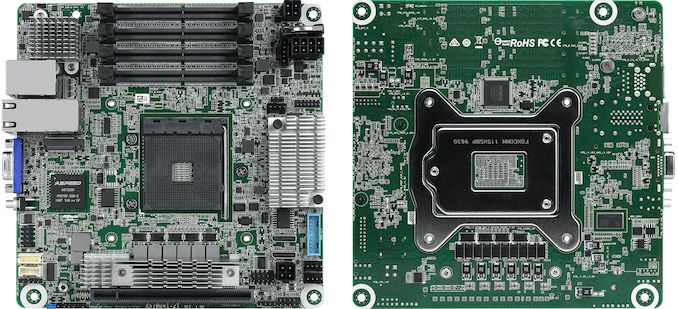

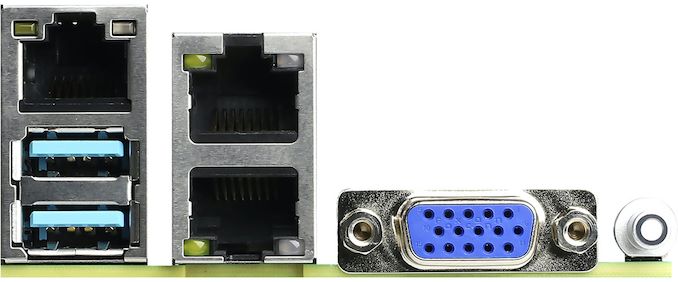

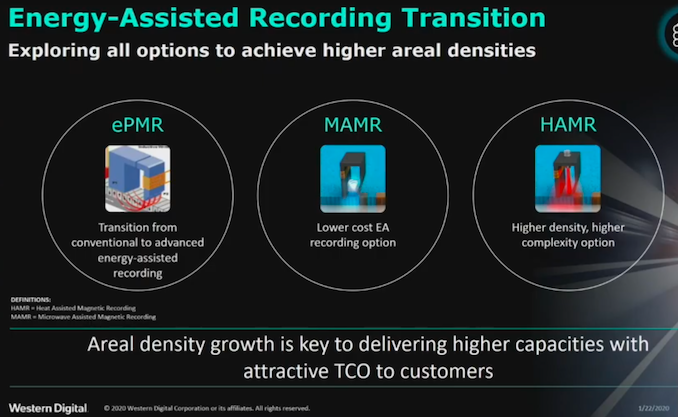

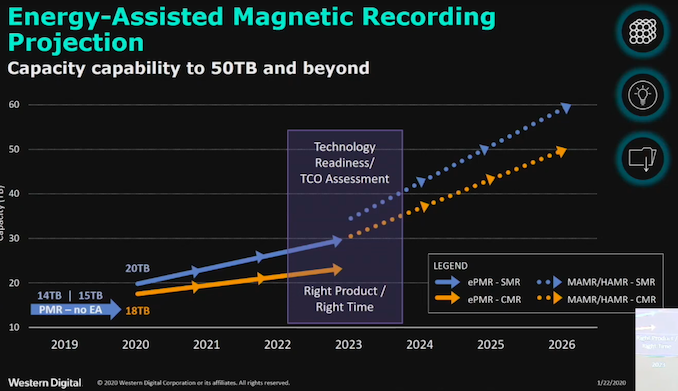

Western Digital’s development of hard drive technology is advancing on several fronts to push the limits of their high-capacity enterprise HDDs. Helium is old news, and used in all their drives larger than 10TB. Lately, the most attention has been focused on Heat Assisted Magnetic Recording (HAMR) and Microwave Assisted Magnetic Recording (MAMR), both of which fall under the heading of energy-assisted recording. Western Digital is still a few years away from deploying either HAMR or MAMR, but their upcoming generation of hard drives takes the first steps in that direction.

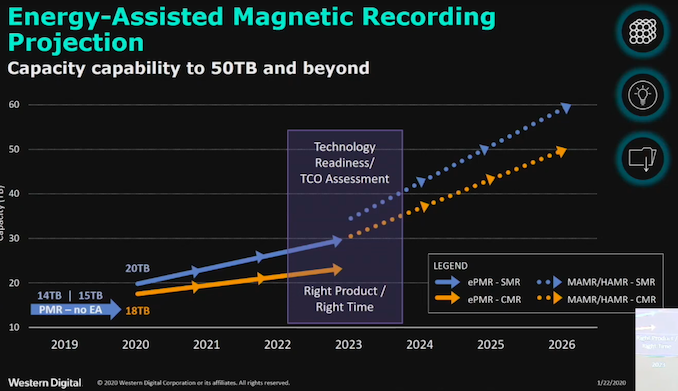

This year, WDC is starting to deliver their latest generation of high-capacity enterprise hard drives which were announced in 2019: the 16TB and 18TB Ultrastar DC HC550 and the 20TB Ultrastar DC HC640 with shingled magnetic recording (SMR). All of these new models will feature WDC’s first energy-assisted recording technology which they have dubbed ePMR. This is still a fairly ill-defined transitional feature, but it is based on some of the parts needed to implement MAMR. WDC’s roadmaps have them sticking with ePMR for a few more years before fully implementing either HAMR or MAMR technology.

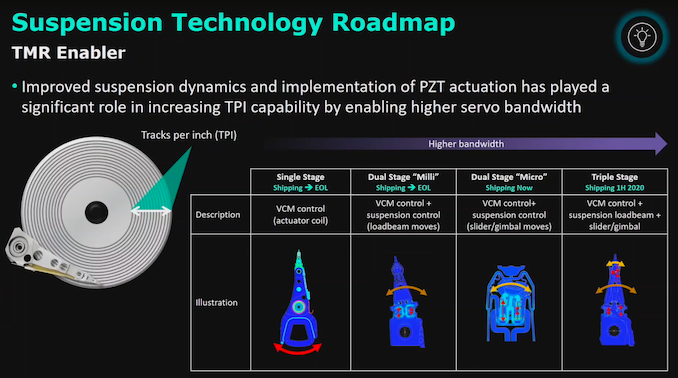

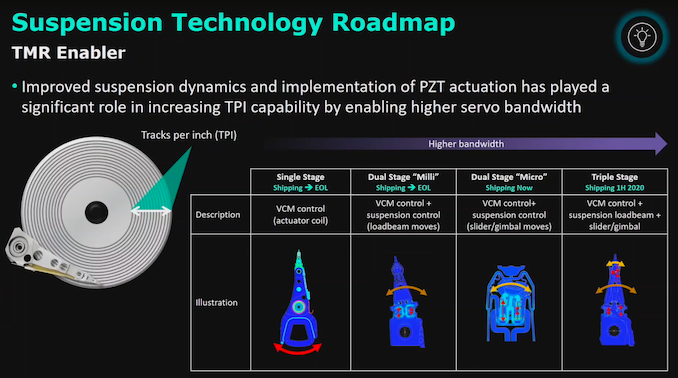

The technology to position hard drive heads has also been improving. The new generation of capacity enterprise HDDs will be Western Digital’s first to use three-stage actuators for faster and more precise seeks. Very roughly, this is akin to giving the arms elbow and write joints. This is not to be confused with multi-actuator drives that allow the heads for some platters to move independently from the heads for other platters. Seagate has been making more noise about dual-actuator hard drives and their potential to significantly improve the IOPS/TB figures that have been in decline, but Western Digital is also working on multi-actuator drives—they just haven’t shared plans for bringing them to market.

3D NAND: Price And Layer Count Rising

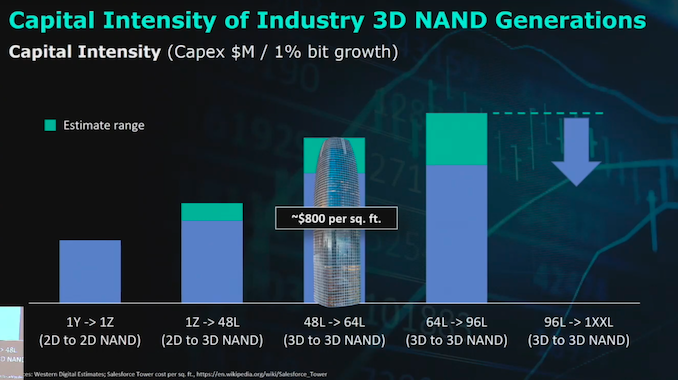

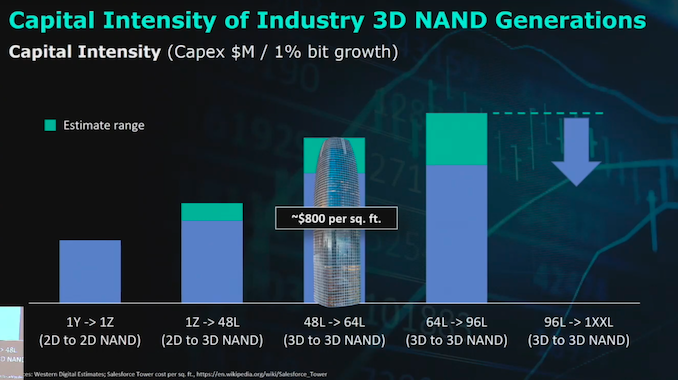

The flash memory side of Western Digital is currently focused more on delivering incremental improvements rather than introducing major technological changes. Low flash memory prices in 2018 and 2019 caused WDC and competitors to take it slow during the 64L to 96L transition, and WDC planned to make the next generation less capital-intensive after several generations of increasing transition costs.

Demand for flash memory has now caught up with supply and is expected to significant outgrow supply later this year, in part due to the CapEx cuts across most of the industry. Western Digital doesn’t expect to significantly increase their wafer output this year, but as they transition to BiCS5 that will help increase bit output somewhat. Western Digital and Kioxia have brought online their new K1 fab in Kitakami, Iwate prefecture, Japan, joining their several fabs in Yokkaichi, Mie prefecture. However, for now the extra fab capacity simply gives them the slack necessary to transition other fabs to BiCS5 and it is not yet helping increase total wafer output.

The fifth generation of Western Digital/Kioxia BiCS 3D NAND has now officially been revealed as a 112 layer design, a modest 16% increase in layer count over the 96-layer BiCS 4. The two companies have been working to also improve density in several ways other than increasing layer count, so the memory array density of BiCS5 is more like a 20% improvement over BiCS4—still not exactly revolutionary. Western Digital and Kioxia have started limited production and sampling of BiCS5 parts, but BiCS4 is still the overwhelming majority of their NAND bit production and BiCS5 won’t start ramping up seriously until the second half of 2020.

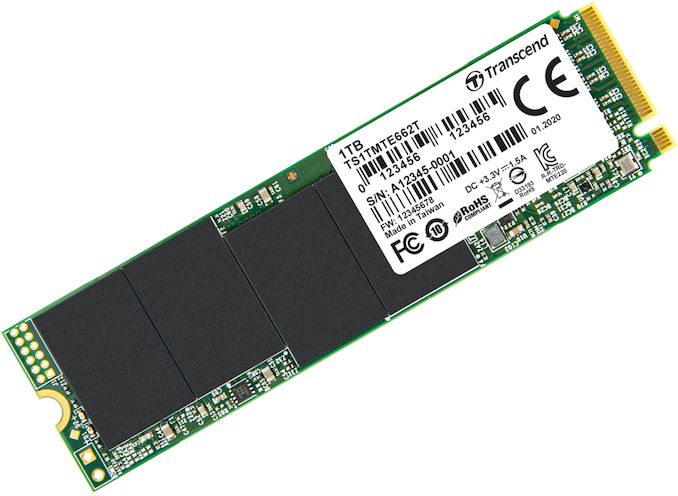

Consumer Storage: WD_BLACK for Gamers

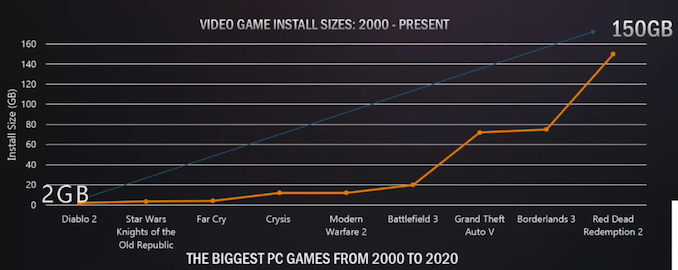

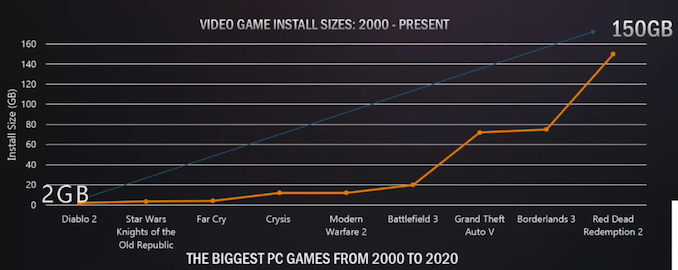

Western Digital’s consumer strategy seems to be mostly business as usual: nothing interesting is happening with their SATA products, and their consumer NVMe drives might not see any further updates until they move to PCIe 4.0—which WD hasn’t said much about. The main exception to the stagnation is their focus on products for gamers. WDC views gamers as a more reliable and less price-sensitive customer base than consumers as a whole. Gamers are certainly one of the largest segments of consumers that still have strong growth in their local storage needs:

Western Digital now has a broad range of gamer-oriented products under their WD Black brand, which has migrated to a new look. Their SN750 NVMe SSD and all the external drives for gamers are now styled as WD_BLACK, and share many of their visual design elements.

With three main families of external WD_BLACK drives plus the internal NVMe SSD and a few extra variants, the WD_BLACK brand is pretty broad. This has somewhat diluted the meaning of WD Black as referring only to the highest performance products in each category, but it means WD has plenty to offer both console and PC gamers. With a new generation of consoles arriving late this year, WD estimates the console storage market to be a multi-exabyte opportunity for 2020 alone.

Datacenter Storage: Almost Ready for SMR to Take Off?

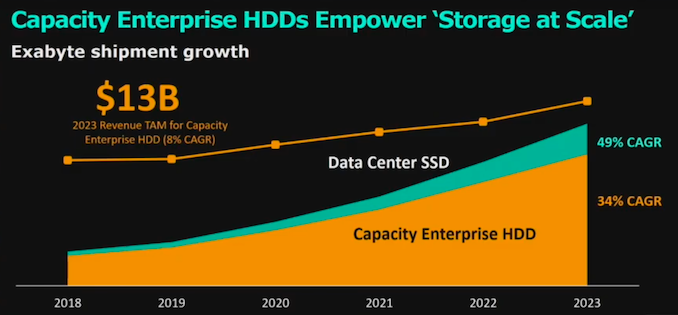

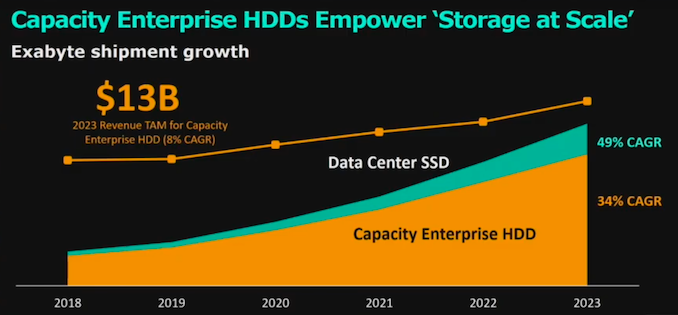

SSDs are an important and lucrative part of Western Digital’s datacenter and enterprise storage lineup, but in terms of bits shipped, their high-capacity hard drives are still way ahead. Both markets are still experiencing healthy growth, and the hard drives won’t be fading into irrelevance anytime soon.

Western Digital’s hard drive R&D is focused almost exclusively on serving their enterprise and datacenter customers. The flash memory business isn’t quite so narrowly focused, but the server market is definitely where Western Digital would prefer to be selling most of their NAND.

Currently, Western Digital’s market share for enterprise SSDs is just under 10%, and their ability to expand was a bit limited during the last quarter due to supply chain issues with components other than their NAND flash. With that issue now out of the way, WDC hopes to double their market share over the next year, with a target of 20%. They have scored several new design wins recently for their enterprise NVMe drives, and one of their new customers is a major hyperscale cloud service provider. Even if they fall short of their market share target, they’ll be making a lot more money in this segment over the next year as prices rise.

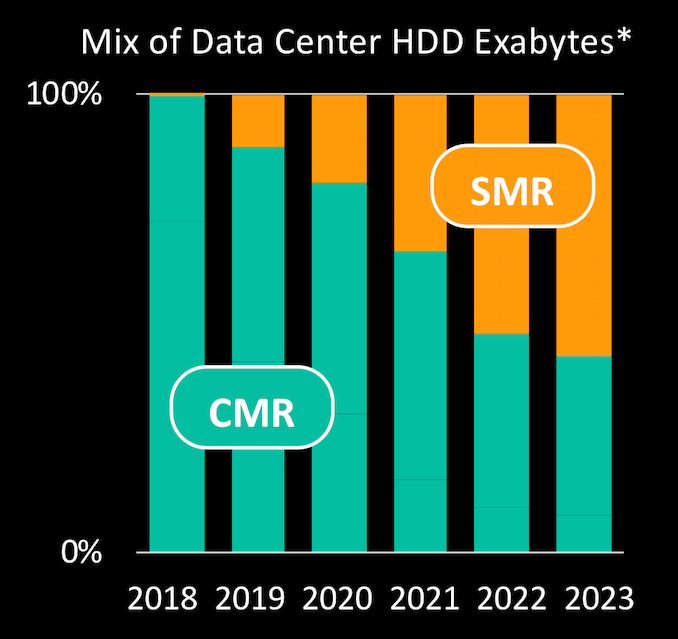

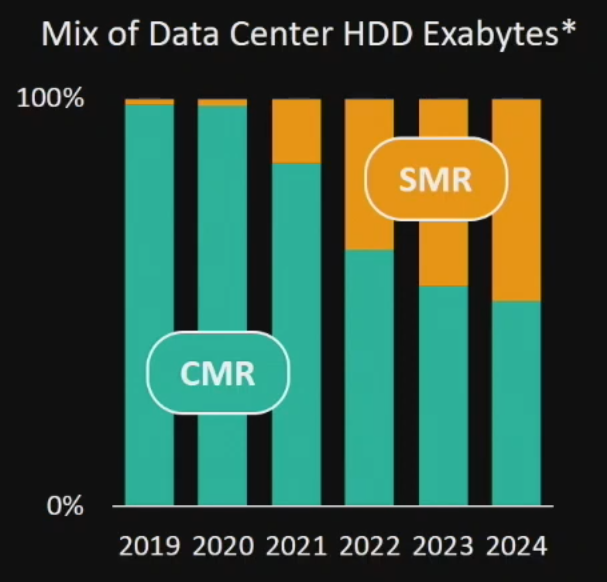

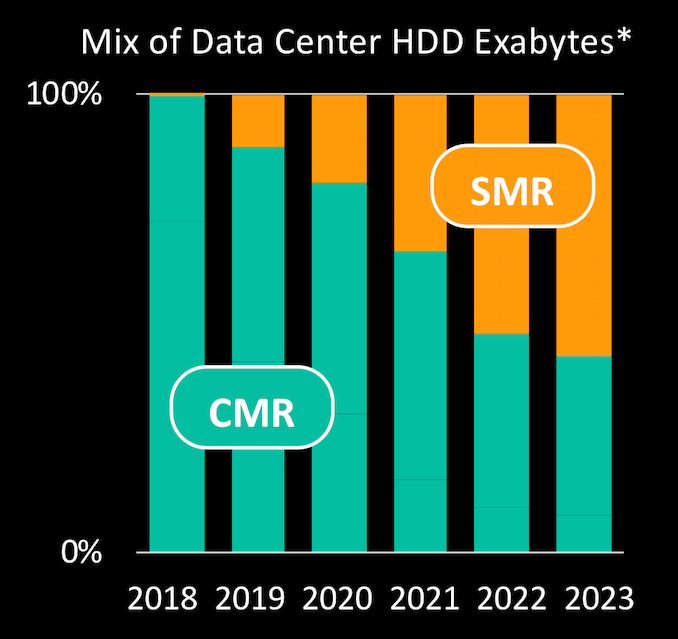

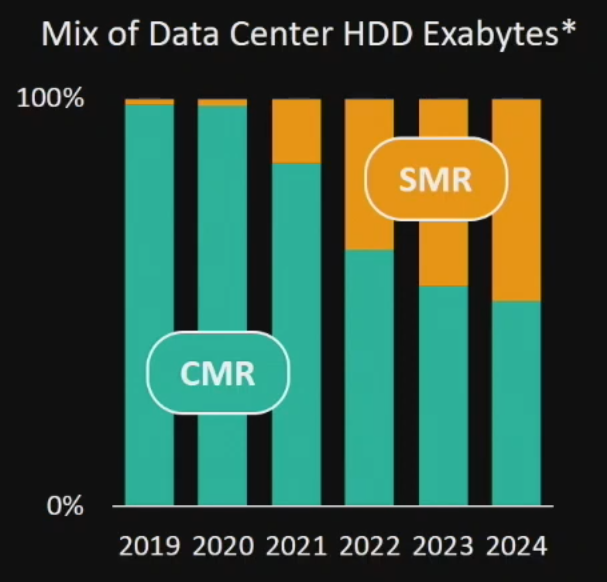

Left: April 2019 projections Right: January 2020 projections

SMR hard drives have been available for years, but have not yet seen mass adoption in the datacenter. The performance downsides of SMR are significant, and when SMR only makes the difference between 18TB and 20TB, it’s a tough sell. As recently as last spring, Western Digital was projecting that SMR hard drives would make up a non-trivial fraction of datacenter hard drive exabytes shipped starting in 2019, with growth toward ~50% market hare by around 2023. Now Western Digital’s updated projections acknowledge that SMR went basically nowhere in 2019 an will do the same for 2020, but they are adamant that it will begin to take off in 2021. Several things need to change about the current situation before that can happen.

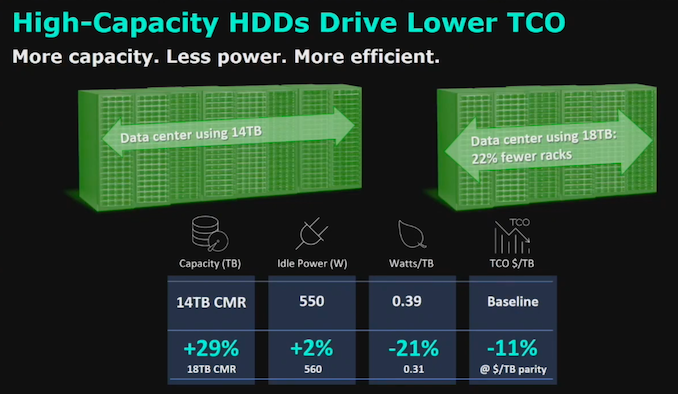

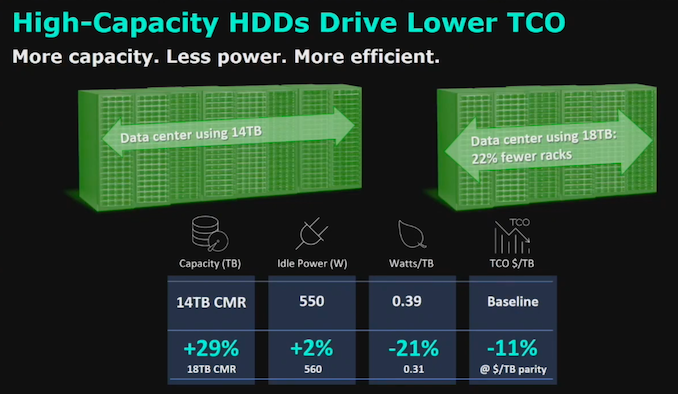

First, SMR hard drives need to have compelling advantages. Western Digital promises that the capacity gap between CMR and SMR drives will widen in the coming years, though they stop short of promising that SMR will have a significant advantage in $/TB. Even at comparable $/TB, larger drives can provide a lower TCO for large deployments by reducing the number of servers needed to hit a certain capacity point. This is an argument Western Digital has been making to push their larger CMR drives as well:

WDC’s estimates for how their 18TB HDDs can offer lower TCO than 14TB at the same $/TB

The other big change necessary for SMR to succeed is that the performance penalties need to become more manageable. SMR drives are fundamentally unable to support random writes. They need to buffer and write an entire track at a time, and tracks that overlap can only be rewritten in-order. Drive-Managed SMR HDDs let the host system pretend those restrictions don’t exist by using large write caches. Western Digital believes the best way to get good performance out of SMR drives is to instead do Host-Managed SMR where software is aware of the restrictions on writes within a shingled zone. This obviously requires major changes to the software stack, and according to WDC this is what’s been holding back adoption of SMR in the datacenter. Western Digital has been putting in a lot of work to help prepare the software ecosystem for SMR hard drives, and they believe that by 2021 they will have major customers will be ready to roll out SMR-aware software as part of a mass deployment of SMR drives.

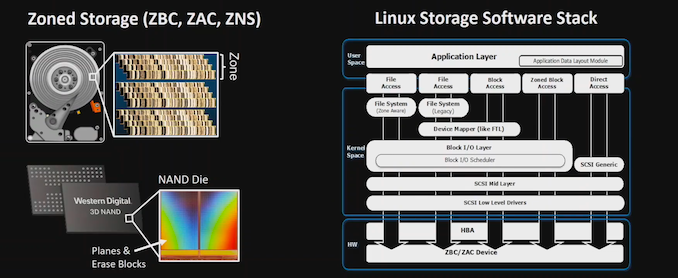

Zoned Storage Initiative

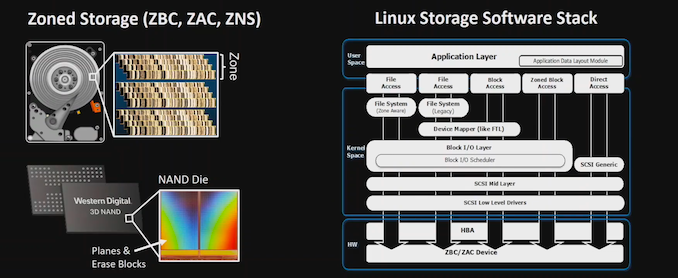

The data access pattern restrictions imposed by SMR hard drives are eerily similar to some of the fundamental challenges of working with NAND flash memory’s small page vs huge erase block structure. For the past year, Western Digital has been promoting their Zoned Storage Initiative that seeks to address both of these challenges at once and allow applications to deal generically with zoned storage devices, be they SSDs or SMR HDDs. The ATA and SCSI command sets already have extensions for host-managed SMR. WDC has been helping NVMe develop the Zoned Namespace (ZNS) extension to provide a similar interface to SSDs.

For hard drives the benefits of SMR are small but due to get bigger. For SSDs, switching to a zoned model can allow for drastically smaller overprovisioning ratios and onboard DRAM, so drives can be cheaper while offering similar performance on many workloads. Properly host-managed IO can also significantly reduce write amplification, allowing for higher effective write endurance.

Western Digital is already shipping the Ultrastar SN340 NVMe SSD that provides some of those advantages without using the upcoming ZNS extension, by having the Flash Translation Layer work with 32kB blocks rather than 4kB. Like a drive-managed SMR HDD, this means the SN340 will allow the host to issue random write commands, but performance for those will suck. Thus, the SN340 is targeted only at the most read-intensive workloads. A ZNS SSD would more likely deal with zone sizes on the order of tens of megabytes rather than 32kB, and would require the host system to ensure its writes are sequential within each zone to avoid the horrible random write performance.

The NVMe Zoned Namespace extension is likely to be ratified this year. Western Digital’s software work in this space has been all open-source, focusing on support in the Linux kernel and developing the necessary tools and libraries for a zoned storage ecosystem.

![]()

![]()

Source: AnandTech – Western Digital Roadmap Updates: Energy Assisted Recording, Multi-Stage Actuators, Zoned Storage