While NVIDIA’s annual GPU Technology Conference has been extensively dialed back and the bulk of NVIDIA’s announcements tabled for another day, as it turns out, the company still has an announcement up their sleeve this week. And a gaming-related announcement, no less. This morning NVIDIA is finally taking the wraps off of their DLSS 2.0 technology, which the company is shipping as a major update to their earlier AI-upscaling tech.

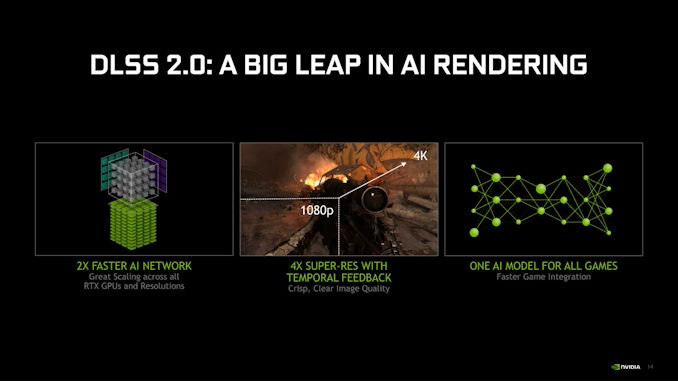

Responding to both competitive pressure and the realization of their own technology limitations, the latest iteration of NVIDIA’s upscaling technology is a rather significant overhaul of the technique. While NVIDIA is still doing AI upscaling at a basic level, DLSS 2.0 is no longer a pure upscaler; NVIDIA is now essentially combining it with temporal anti-aliasing. The results, NVIDIA is promising, is both better image quality than DLSS 1.0, as well as faster integration within individual games by doing away with per-game training.

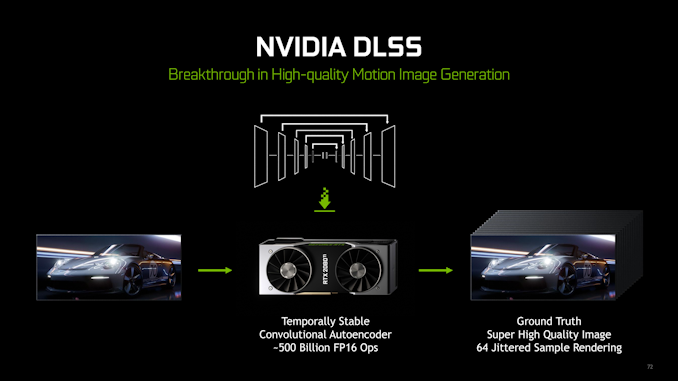

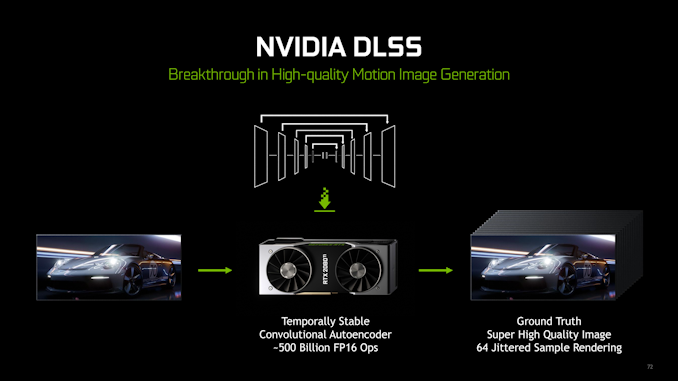

As a quick refresher, Deep Learning Super Sampling (DLSS) was originally released around the launch of the Turing (GeForce RTX 20 series) generation in the fall of 2018. DLSS was NVIDIA’s first major effort to use their rapidly growing experience in AI programming and AI hardware to apply the technology to image quality in video games. With all of their GeForce RTX cards shipping with tensor cores, what better way to put them to use than to use them to improve image quality in games in a semi-abstracted manner? It was perhaps a bit of a case of a hammer in search of a nail, but the fundamental idea was reasonable, especially as 4K monitors get cheaper and GeForce 2080 Tis do not.

Unfortunately, DLSS 1.0 never quite lived up to its promise. NVIDIA took a very image-centric approach to the process, relying on an extensive training program that involved creating a different neural network for each game at each resolution, training the networks on what a game should look like by feeding them ultra-high resolution, 64x anti-aliased images. In theory, the resulting networks should have been able to recognize how a more detailed world should work, and produce cleaner, sharper images accordingly.

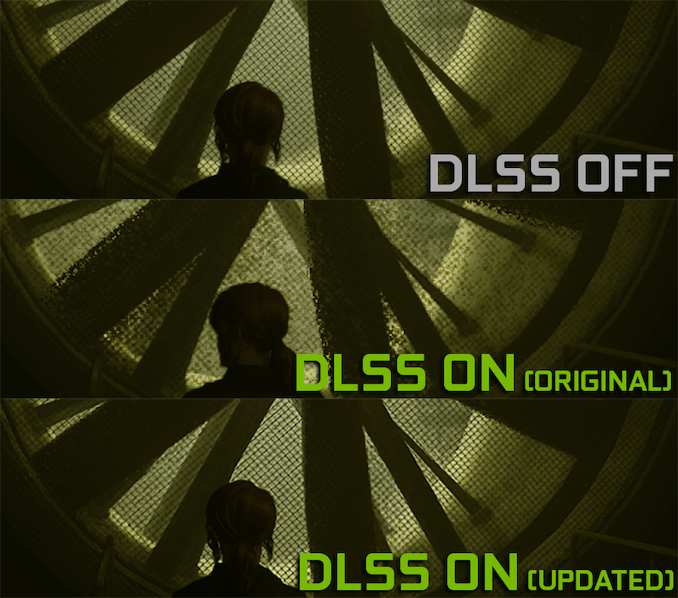

Sometimes this worked well. More often the results were mixed. NVIDIA primarily pitched the technology as a way to reduce the rendering costs of higher resolutions – that is, rendering a game at a lower resolution and then upscaling – with a goal of matching a game’s native resolution with temporal anti-aliasing. The end results would sometimes meet or beat this goal, and at other times an image would still be soft and lacking detail, revealing its lower-resolution origins. And all the while it took a lot of work to add DLSS to a game: every game and every resolution supported required training yet another neural network. Meanwhile, a simple upscale + sharpening filter could deliver a not-insignificant increase in perceived image quality with only a fraction of the work and GPU usage.

Enter DLSS 2.0

While DLSS 1.0 was pure, in retrospect it was perhaps a bit naïve. As NVIDIA plainly states now, DLSS 1.0 was hard to work with because it hinged on the idea that video games are deterministic – that everything would behave in a pre-defined and predictable manner. In reality games aren’t deterministic, and even if AI characters do the same thing every time, second-order effects like particles and the like can be off doing their own thing. As a result it was difficult to train DLSS 1.0 networks, which needed this determinism to improve, let alone applying them to games.

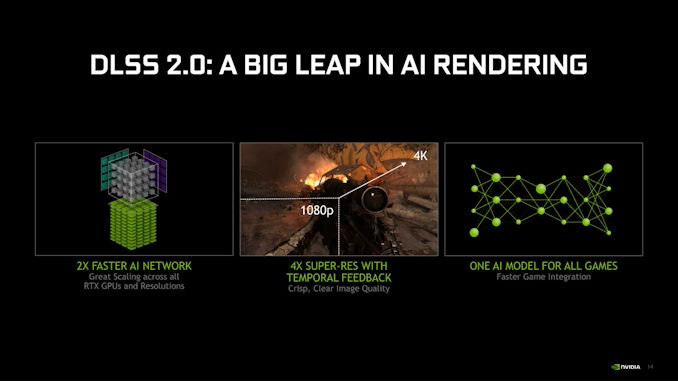

So for their second stab at AI upscaling, NVIDIA is taking a different tack. Instead of relying on individual, per-game neural networks, NVIDIA has built a single generic neural network that they are optimizing the hell out of. And to make up for the lack of information that comes from per-game networks, the company is making up for it by integrating real-time motion vector information from the game itself, a fundamental aspect of temporal anti-aliasing (TAA) and similar techniques. The net result is that DLSS 2.0 behaves a lot more like a temporal upscaling solution, which makes it dumber in some ways, but also smarter in others.

The single biggest change here is of course the new generic neural network. Looking to remove the expensive per-game training and the many (many) problems that non-deterministic games presented in training, NVIDIA has moved to a single generic network for all games. This newer neural network is based on a fully synthetic training set rather than individual games, which in turn is fully deterministic, allowing NVIDIA to extensively train the new network in exactly fashion they need for it to iterate and improve over generations. According to NVIDIA, this new network is also faster to execute on the GPU as well, reducing the overhead from using DLSS to begin with.

Besides eliminating per-game training times and hassling developers on determinism, the other upshot for NVIDIA is that the generic network gives them more resolution scaling options. NVIDIA can now upscale frames by up to 4x in resolution – from 1080p input to 4K – both allowing DLSS 2.0 to be used with a wider range of input/output resolutions, and allowing it to be more strongly used, for lack of a better term. DLSS 1.0, by contrast, generally targeted a 2x upscale.

This new flexibility also means that NVIDIA is now offering multiple DLSS quality modes, trading off the internal rendering resolution (and thus image quality) for more performance. Those modes are performance, balanced, and quality.

Otherwise, the actual network training process hasn’t entirely changed. NVIDIA is still training against 16K images, with the goal of teaching the neural network as much about quality as possible. And this is still being executed as neural networks via the tensor cores as well, though I’m curious to see if DLSS 2.0 pins quite as much work to the tensor cores as 1.0 did before it.

The catch to DLSS 2.0, however, is that this still requires game developer integration, and in a much different fashion. Because DLSS 2.0 relies on motion vectors to re-project the prior frame and best compute what the output image should look like, developers now need to provide those vectors to DLSS. As many developers are already doing some form of temporal AA in their games, this information is often available within the engine, and merely needs to be exposed to DLSS. None the less, it means that DLSS 2.0 still needs to be integrated on a per-game basis, even if the per-game training is gone. It is not a pure, end-of-chain post-processing solution like FXAA or combining image sharpening with upscaling.

Past that, it should be noted that NVIDIA is still defining DLSS resolutions the same way they were before; which is to say, they are talking about the output resolution rather than the input resolution. So 1080p Quality mode, for example, would generally mean the internal rendering resolution is one-half the output resolution, or 1280×720 being upscaled to 1920×1080. And Performance mode, I’m told, would be a 4x upscale.

Meanwhile, it goes without saying that the subject of image upscaling and enhancements has been a hot topic since the introduction of DLSS, as well as AMD’s more recent efforts to counter it with Radeon Image Sharpening. So NVIDIA is hitting the ground running, as it were, on promoting DLSS 2.0.

In fact “promotion” is perhaps the key word for today. While NVIDIA is only finally announcing DLSS 2.0 today and outlining how it works, the company has already been shipping it to game developers for a bit. Both Deliver Us the Moon and Wolfenstein: Youngblood are already shipping with DLSS 2.0. And now that NVIDIA is happy with the state of the now field-tested technology, they are moving on to rolling it out to gamers and game-developers as a whole, including integrating it into Unreal Engine 4.

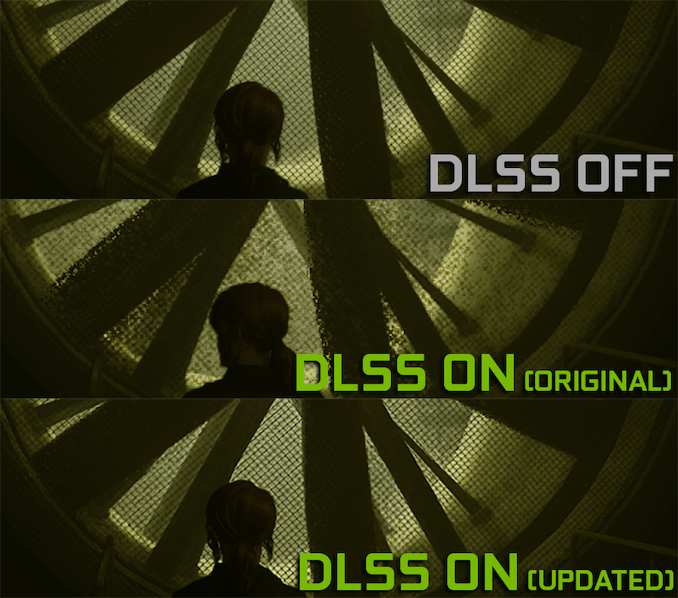

Along with the aforementioned games, both Control and MechWarrior 5 are getting DLSS 2.0 updates. Control in particular will be an interesting case, as it’s the only game in this set that also had a DLSS 1.x implementation, meaning that it can be used as a control to judge the image quality differences. Even NVIDIA is going that far to demonstrate some of the quality improvements.

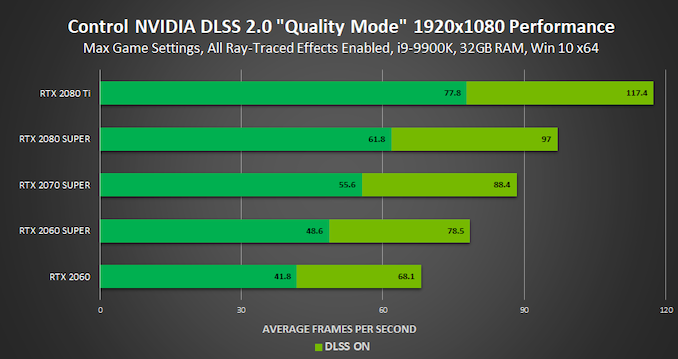

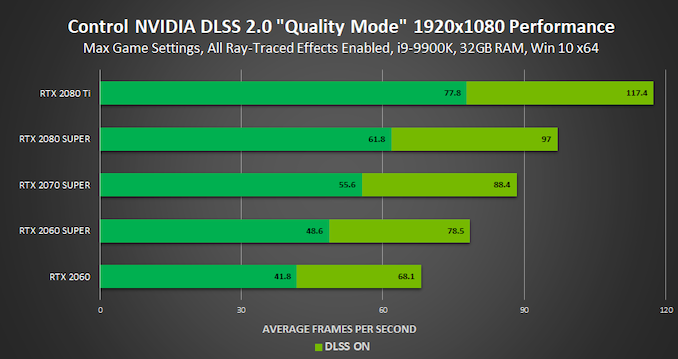

As for performance, NVIDIA is generally promising similar performance figures as DLSS 1.0. This means the comparable Quality mode may be a bit slower than DLSS 1.0 in games like Control, but overall that Quality mode and its one-half rendering resolution should deliver significant speed gains over native resolution games. All the while the resulting image quality should be better than what DLSS 1.0 could deliver. NVIDIA is even touting DLSS 2.0 as offering better image quality than native resolution games, though setting aside for the moment the subjective nature of image quality, it may not be quite an apples-to-apples comparison depending on what post-processing effects developers are using (e.g. replacing a very blurry TAA filter with DLSS 2.0).

At any rate, DLSS 2.0 is now officially available today. Updates for Control and MechWarrior 5 will be shipping this week, and if NVIDIA gets its way, more games will soon follow.

Source: AnandTech – NVIDIA Intros DLSS 2.0: Ditches Per-Game Training, Adds Motion Vectors for Better Quality