Over the last few years, we’ve seen a lot of new technologies in the mobile market trying to address the problem of attempting to gather depth information with a camera system. There’s been various solutions by different companies, ranging from IR dot-projectors and IR cameras (structured light), stereoscopic camera systems, to the latest more modern time-of-flight special dedicated sensors. One big issue of these various implementations has been the fact that they’re all using quite exotic hardware solutions that can significantly increase the bill of materials of a device, as well as influence its industrial design choices.

Airy3D is a smaller new company that has been to date only active on the software front, providing various imaging solutions to the market. The company is now ready to transition to a hybrid business model, describing themselves as a hardware-enabled software company.

The company’s main product to fame right now is the “DepthIQ” platform – a hardware-software solution that promises to enable high-quality depth sensing to single cameras at a much cheaper cost than any other alternative.

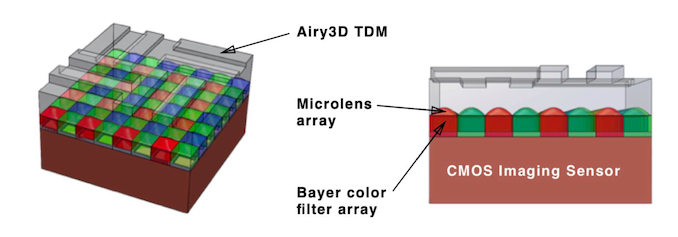

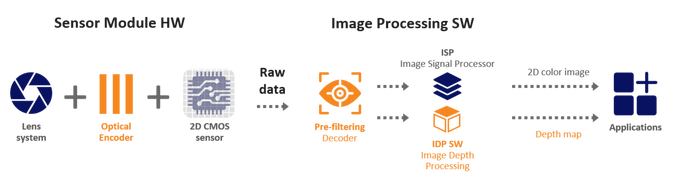

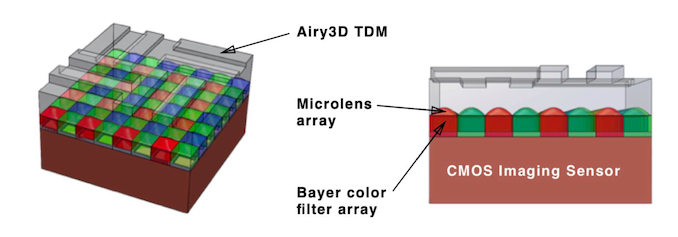

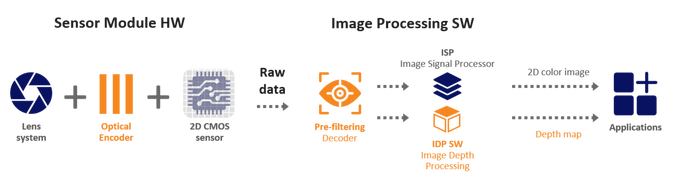

At the heart of Airy3D’s innovation is an added piece of hardware to existing sensors in the market, called a transmissive diffraction mask, or TDM. This TDM is an added transmissive layer manufactured on top of the sensor, shaped with a specific profile pattern, that is able to encode the phase and direction of light that is then captured by the sensor.

The TDM in essence creates a diffraction pattern (Talbot effect) onto the resulting picture, that differs based on the distance of a captured object. The neat thing that Airy3D is able to do here, is employ advanced software algorithms that are able to decode this pattern, and transform the raw 2D image capture into a 3D depth map as well as a 2D image with the diffraction pattern compensated out.

Airy3D’s role in the manufacturing chain of a DepthIQ enabled camera module is designing the TDM grating which they then license out and cooperate with sensor manufacturers, who then integrate it into their sensors during production. In essence, the company would be partnering with any of the big sensor vendors such as Sony Semiconductor, Samsung LSI or Omnivision in order to produce a complete solution.

I was curious whether the company had any limits in terms of the resolution the TDM can be manufactured at, since many of today’s camera sensors employ 0.8µm pixel pitches and we’re even starting to see 0.7µm sensors coming to market. The company sees no issues in scaling the TDM grating down to 0.5µm – so there’s still a ton of leeway for future sensor generations for years to come.

Adding a transmissive layer on top of the sensor naturally doesn’t come for free, and there is a loss in sharpness. The company is quoting MTF sharpness reductions of around 3.5%, as well as a reduction of the sensitivity of the sensor due to TDM, in the range of 3-5% across the spectral range.

Camera samples without, and with the TDM

The company shared with us some samples of a camera system using the same sensor, once without the TDM, and once with the TDM employed. Both pictures are using the exact same exposure and ISO settings. In terms of sharpness, I wouldn’t say there’s major immediately noticeable differences, but we do see that the darker image with the TDM employed, a result of the reduced QE efficiency of the sensor.

The software processing is said to be comparatively light-weight compared to other depth-sensor solutions, and can be done on a CPU, GPU, DSP or even small FPGA.

The resulting depth discernation the solution is able to achieve from a single image capture is quite astounding – and there’s essentially no limit to the resolution that can be achieved as it scales with the sensor resolution.

More complex depth sensing solutions can add anywhere from $15 to $30 to the BOM of a device. Airy3D sees this technology to see the biggest adoption in the low- and mid-range, as usually the higher end is able to absorb the cost of other solutions, as also unlikely to be willing to make the make any sacrifice in image quality on the main camera sensors. A cheaper device for example would be able to have depth-sensing face ID unlocking with just a simple front camera sensor, which would represent notable cost savings.

Airy3D says they have customers lined up for the technology, and see a lot of potential for it in the future. It’s an extremely interesting way to achieve depth sensing given it’s a passive hardware solution that integrates into an existing camera sensor.

![]()

![]()

Source: AnandTech – Airy3D’s DepthIQ: A Cheap Camera Depth Sensing Solution