Western Digital originally launched their Red lineup of hard disk drives for network-attached storage devices back in 2012. The product stack later expanded to service professional NAS units with the Red Pro. These drives have traditionally offered very predictable performance characteristics, thanks to the use of conventional magnetic recording (CMR). More recently, with the advent of shingled magnetic recording (SMR), WD began offering drive-managed versions in the direct-attached storage (DAS) space for consumers, and host-managed versions for datacenters.

Towards the middle of 2019, WD silently introduced WD Red hard drives (2-6TB capacities) based on drive-managed SMR. There was no fanfare or press-release, and the appearance of the drives in the market was not noticed by the tech press. Almost a year after the drives appeared on the shelves, the voice of customers dissatisfied with the performance of the SMR drives in their NAS units reached levels that WD could no longer ignore. In fact, as soon as we heard about the widespread usage of SMR in certain WD Red capacities, we took those drives off our recommended HDDs list.

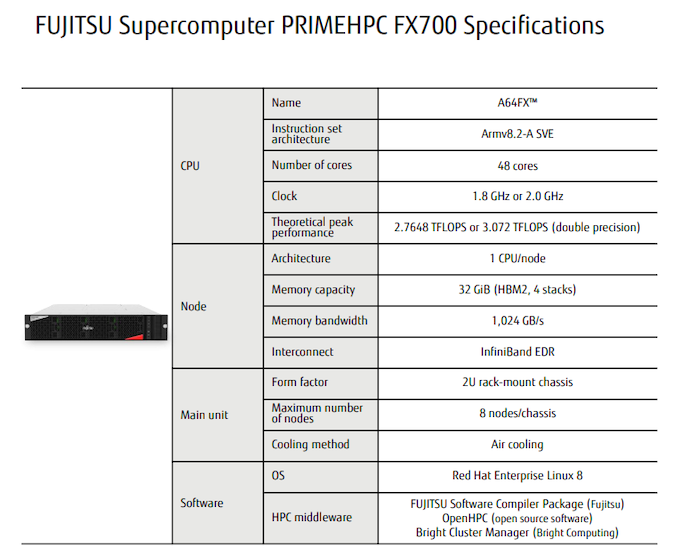

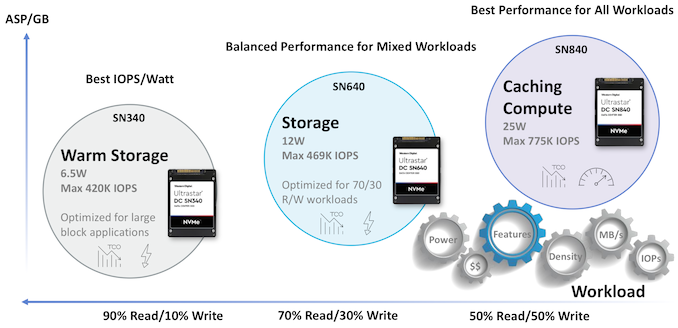

Finally, after starting to make amends towards the end of April 2020, Western Digital has gone one step further at last, and cleaned up their NAS drive branding to make it clear which drives are SMR-based. Re-organizing their Red portfolio, the vanilla WD Red family has become a pure SMR lineup. Meanwhile a new brand, the Red Plus, will encompass the 5400 RPM CMR hard drives that the WD Red brand was previously known for. Finally, the Red Pro lineup remains unchanged, with 7200 RPM CMR drives for high performance configurations.

WD NAS Hard Drives for Consumer / SOHO / SMB Systems (Source: Western Digital Blog)

While Western Digital (and consumers) should have never ended up in this situation in the first place, it’s nonetheless an important change to WD’s lineup that restores some badly-needed clarity to their product lines. The technical and performance differences between CMR and SMR drives are significant, and having the two used interchangeably in the Red line – in a lineup that previously didn’t contain any SMR drives to begin with – was always going to be a problem.

In particular, a look at various threads in NAS forums indicates that most customers of these SMR Red drives faced problems with certain RAID and ZFS operations. The typical consumer use-case for NAS drives – even just 1-8 bays – may include RAID rebuilds, RAID expansions, and regular scrubbing operations. The nature of drive-managed SMR makes it unsuitable for those types of configurations.

It was also not clear what WD hoped to achieve by using SMR for lower-capacity drives. Certain capacity points, such as the 2TB and 4TB, have one less platter in the SMR version compared to the CMR, which should result in lowered production costs. But the trade-offs associated with harming drive performance in certain NAS configurations – and subsequently ruining the reputation of Red drives in the minds of consumers – should have been considered.

In any case, it seems probable that the lower-capacity SMR WD Red drives were launched more as a beta test for the eventual launch of SMR-based high-capacity drives. Perhaps, the launch of these drives under a different branding – say, Red Archive, instead of polluting the WD Red branding, would have been better from a marketing perspective.

As SMR became entrenched in the consumer space, it was perhaps inevitable that NAS drives utilizing the technology would appear in the market. However in the process, WD has missed a golden chance to educate consumers on situations where SMR drives make sense in NAS units.

For our part, while the updated branding situation is a significant improvement, we do not completely agree with WD’s claim about SMR Reds being suitable for SOHO NAS units. This may lead to non-tech savvy consumers using them in RAID configurations, even in commercial off-the-shelf (COTS) NAS units such as those from QNAP and Synology. Our recommendation is to use these SMR Reds for archival purposes (an alternative to tape backups for the home – not that consumers are doing tape backups today!), or, in WORM (Write-Once Read-Many) scenarios in a parity-less configuration such as RAID1 or RAID10. It is not advisable to subject these drives to RAID rebuilds or scrubbing operations, and ZFS is not even in the picture. The upside, at least, is that in most cases users contemplating ZFS are tech-savvy enough to know the pitfalls of SMR for their application.

All said, WD has one of the better implementations of SMR (in the DAS space), as we wrote earlier. But that is for direct-attached storage, which gives SMR drives plenty of time to address the ‘garbage-collection’ needs. It is just that consumer NAS behavior (that is not explicitly user-triggered) may not be similar to that.

Consumers considering the WD Red lineup prior to the SMR fiasco can now focus on the Red Plus drives. We do not advise consumers to buy the vanilla Red (SMR) unless they are aware of what they are signing up for. To this effect, consumers need to become well-educated regarding the use-cases for such drives. Seagate’s 8TB Archive HDD was launched in 2015, but didn’t meet with much success in the consumer market for that very reason (and had to be repurposed for DAS applications). The HDD vendors’ marketing teams have their task cut out if high-capacity SMR drives for consumer NAS systems are in their product roadmap.

![]()

![]()

Source: AnandTech – Western Digital Announces Red Pro Plus HDDs, Cleans Up Red SMR Mess with Plus Branding