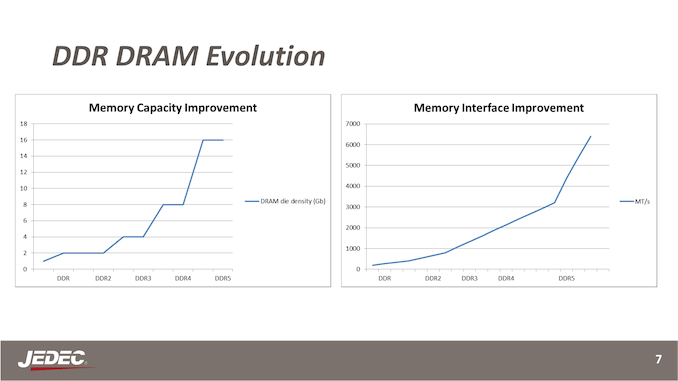

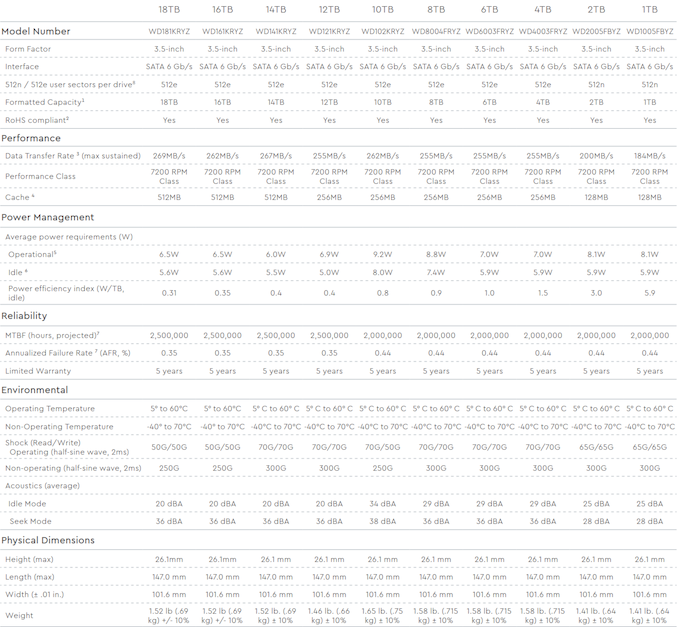

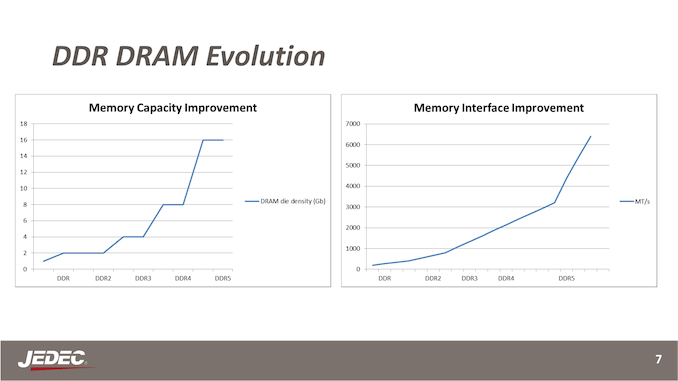

Marking an important milestone in computer memory development, today the JEDEC Solid State Technology Association is releasing the final specification for its next mainstream memory standard, DDR5 SDRAM. The latest iteration of the DDR standard that has been driving PCs, servers, and everything in-between since the late 90s, DDR5 once again extends the capabilities of DDR memory, doubling the peak memory speeds while greatly increasing memory sizes as well. Hardware based on the new standard is expected in 2021, with adoption starting at the sever level before trickling down to client PCs and other devices later on.

Originally planned for release in 2018, today’s release of the DDR5 specification puts things a bit behind JEDEC’s original schedule, but it doesn’t diminish the importance of the new memory specification. Like every iteration of DDR before it, the primary focus for DDR5 is once again on improving memory density as well as speeds. JEDEC is looking to double both, with maximum memory speeds set to reach at least 6.4Gbps while the capacity for a single, packed-to-the-rafters LRDIMM will eventually be able to reach 2TB. All the while, there are several smaller changes to either support these goals or to simplify certain aspects of the ecosystem, such as on-DIMM voltage regulators as well as on-die ECC.

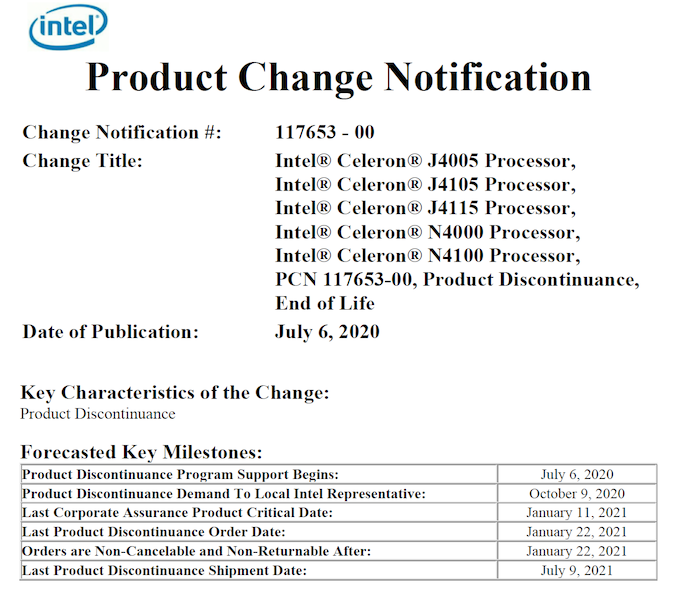

| JEDEC DDR Generations |

| |

DDR5 |

DDR4 |

DDR3 |

LPDDR5 |

| Max Die Density |

64 Gbit |

16 Gbit |

4 Gbit |

32 Gbit |

| Max UDIMM Size |

128 GB |

32 GB |

8 GB |

N/A |

| Max Data Rate |

6.4 Gbps |

3.2 Gbps |

1.6 Gbps |

6.4Gbps |

| Channels |

2 |

1 |

1 |

1 |

| Width (Non-ECC) |

64-bits (2×32) |

64-bits |

64-bits |

16-bits |

Banks

(Per Group) |

4 |

4 |

8 |

16 |

| Bank Groups |

8/4 |

4/2 |

1 |

4 |

| Burst Length |

BL16 |

BL8 |

BL8 |

BL16 |

| Voltage (Vdd) |

1.1v |

1.2v |

1.5v |

1.05v |

| Vddq |

1.1v |

1.2v |

1.5v |

0.5v |

Going Bigger: Denser Memory & Die-Stacking

We’ll start with a brief look at capacity and density, as this is the most-straightforward change to the standard compared to DDR4. Designed to span several years (if not longer), DDR5 will allow for individual memory chips up to 64Gbit in density, which is 4x higher than DDR4’s 16Gbit density maximum. Combined with die stacking, which allows for up to 8 dies to be stacked as a single chip, then a 40 element LRDIMM can reach an effective memory capacity of 2TB. Or for the more humble unbuffered DIMM, this would mean we’ll eventually see DIMM capacities reach 128GB for your typical dual rank configuration.

Of course, the DDR5 specification’s peak capacities are meant for later in the standard’s lifetime, when chip manufacturing catches up to what the spec can allow. To start things off memory manufacturers will be using today’s attainable densities 8Gbit and 16Gbit chips in order to build their DIMMs. So while the speed improvements from DDR5 will be fairly immediate, the capacity improvements will be more gradual as manufacturing densities improve.

Going Faster: One DIMM, Two Channels

The other half of the story for DDR5 is about once again increasing memory bandwidth. Everyone wants more performance (especially with DIMM capacities growing), and unsurprisingly, this is where a lot of work was put into the specification in order to make this happen.

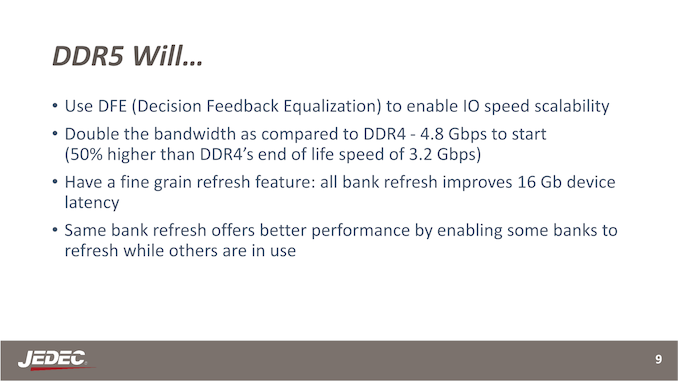

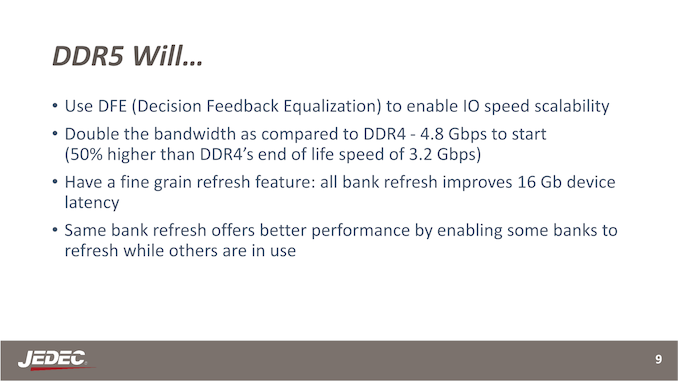

For DDR5, JEDEC is looking to start things off much more aggressively than usual for a DDR memory specification. Typically a new standard picks up from where the last one started off, such as with the DDR3 to DDR4 transition, where DDR3 officially stopped at 1.6Gbps and DDR4 started from there. However for DDR5 JEDEC is aiming much higher, with the group expecting to launch at 4.8Gbps, some 50% faster than the official 3.2Gbps max speed of DDR4. And in the years afterwards, the current version of the specification allows for data rates up to 6.4Gbps, doubling the official peak of DDR4.

Of course, sly enthusiasts will note that DDR4 already goes above the official maximum of 3.2Gbps (sometimes well above), and it’s likely that DDR5 will eventually go a similar route. The underlying goal, regardless of specific figures, is to double the amount of bandwidth available today from a single DIMM. So don’t be too surprised if SK Hynix indeed hits their goal of DDR5-8400 later this decade.

Underpinning these speed goals are changes at both the DIMM and the memory bus in order to feed and transport so much data per clock cycle. The big challenge as always for DRAM speeds, comes from the lack of progress in DRAM core clock rates. Dedicated logic is still getting faster, and memory busses are still getting faster, but the capacitor-and-transistor-based DRAM underpinning modern memory still can’t clock higher than a few hundred megahertz. So in order to get more from a DRAM die – to maintain the illusion that the memory itself is getting faster and to feed the actually faster memory busses – more and more parallelism has been required. And DDR5 for its part ups the ante once more.

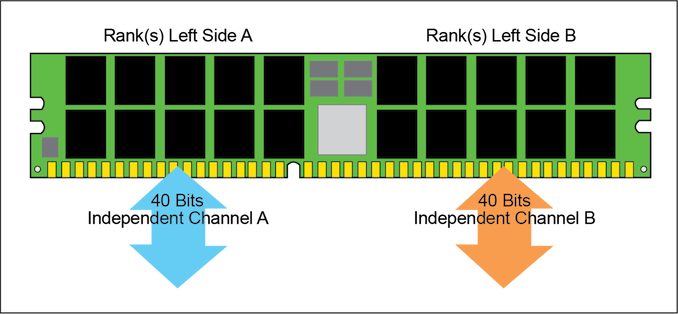

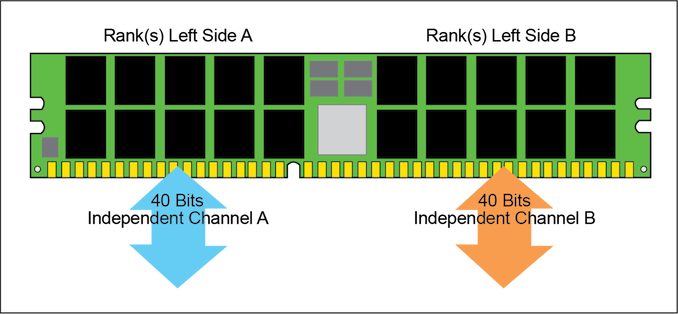

The big change here is that, similar to what we’ve seen in other standards like LPDDR4 and GDDR6, a single DIMM is being broken down into 2 channels. Rather than one 64-bit data channel per DIMM, DDR5 will offer two independent 32-bit data channels per DIMM (or 40-bit when factoring in ECC). Meanwhile the burst length for each channel is being doubled from 8 bytes (BL8) to 16 bytes (BL16), meaning that each channel will deliver 64 bytes per operation. Compared to a DDR4 DIMM, then, a DDR5 DIMM running at twice the rated memory speed (identical core speeds) will deliver two 64-byte operations in the time it takes a DDR4 DIMM to deliver one, doubling the effective bandwidth.

Overall, 64 bytes remains the magic number for memory operations as this is the size of a standard cache line. A larger burst length on DDR4-style memory would have resulted in 128-byte operations, which is too big for a single cache line, and at best, would have resulted in efficiency/utilization losses should a memory controller not want two lines’ worth of sequential data. By comparison, since DDR5’s two channels are independent, a memory controller can request 64 bytes from separate locations, making it a better fit to how processors actually work and avoiding the utilization penalty.

The net impact for a standard PC desktop then would be that instead of today’s DDR4 paradigm of two DIMMs filling two channels for a 2x64bit setup, a DDR5 system will functionally behave as a 4x32bit setup. Memory will still be installed in pairs – we’re not going back to the days of installing 32-bit SIMMs – but now the minimum configuration is for two of DDR5’s smaller channels.

This structural change also has some knock-on effects elsewhere, particularly to maximize usage in these smaller channels. DDR5 introduces a finer-grained bank refresh feature, which will allow for some banks to refresh while others are in use. This gets the necessary refresh (capacitor recharge) out of the way sooner, keeping latencies in check and making unused banks available sooner. The maximum number of bank groups is also being doubled from 4 to 8, which will help to mitigate the performance penalty from sequential memory access.

Rapid Bus Service: Decision Feedback Equalization

In contrast finding ways to increase the amount of parallelization within a DRAM DIMM, increasing the bus speed is both simpler and harder: the idea is simple in concept and harder in execution. At the end of the day to double DDR’s memory speeds, DDR5’s memory bus needs to run at twice the rate of DDR4’s.

There are several changes to DDR5 to make this happen, but surprisingly, there aren’t any massive, fundamental changes to the memory bus such as QDR or differential signaling. Instead, JEDEC and its members have been able to hit their targets with a slightly modified version of the DDR4 bus, albeit one that has to run at tighter tolerances.

The key driver here is the introduction of decision feedback equalization (DFE). At a very high level, DFE is a means to reduce inter-symbol interference by using feedback from the memory bus receiver to provide better equalization. And better equalization, in turn, allows for the cleaner signaling needed for DDR5’s memory bus to run at higher transfer rates without everything going off the rails. Meanwhile this is further helped by several smaller changes in the standard, such as the addition of new and improved training modes to help DIMMs and controllers compensate for minute timing differences along the memory bus.

Simpler Motherboards, More Complex DIMMs: On-DIMM Voltage Regulation

Along with the core changes to density and memory speeds, DDR5 also once again improves on DDR memory’s operating voltages. At-spec DDR5 will operate with a Vdd of 1.1v, down from 1.2v for DDR4. Like past updates this should improve the memory’s power efficiency relative to DDR4, although the power gains thus far aren’t being promoted as heavily as they were for DDR4 and earlier standards.

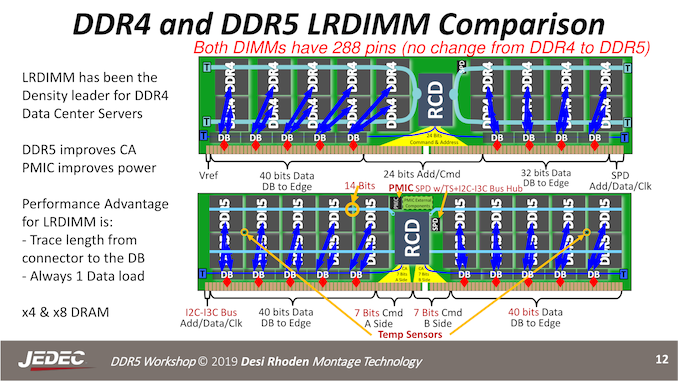

JEDEC is also using the introduction of the DDR5 memory standard to make a fairly important change to how voltage regulation works for DIMMs. In short, voltage regulation is being moved from the motherboard to the individual DIMM, leaving DIMMs responsible for their own voltage regulation needs. This means that DIMMs will now include an integrated voltage regulator, and this goes for everything from UDIMMs to LRDIMMs.

JEDEC is dubbing this “pay as you go” voltage regulation, and is aiming to improve/simplify a few different aspects of DDR5 with it. The most significant change is that by moving voltage regulation on to the DIMMs themselves, voltage regulation is no longer the responsibility of the motherboard. Motherboards in turn will no longer need to be built for the worst-case scenario – such as driving 16 massive LRDIMMs – simplifying motherboard design and reining in costs to a degree. Of course, the flip side of this argument is that it moves those costs over to the DIMM itself, but then system builders are at least only having to buy as much voltage regulation hardware as they have DIMMs, and hence the PAYGO philosophy.

According to JEDEC, the on-DIMM regulators will also allow for better voltage tolerances in general, improving DRAM yields. And while no specific promises are being made, the group is also touting the potential for this change to (further) reduce DDR5’s power consumption relative to DDR4.

As the implementation details for these voltage regulators will be up to the memory manufacturers, JEDEC hasn’t said too much about them. But it sounds like there won’t be a one-size-fits-all solution between clients and servers, so client UDIMMs and server (L)RDIMMs will have separate regulators/PMICs, reflecting their power needs.

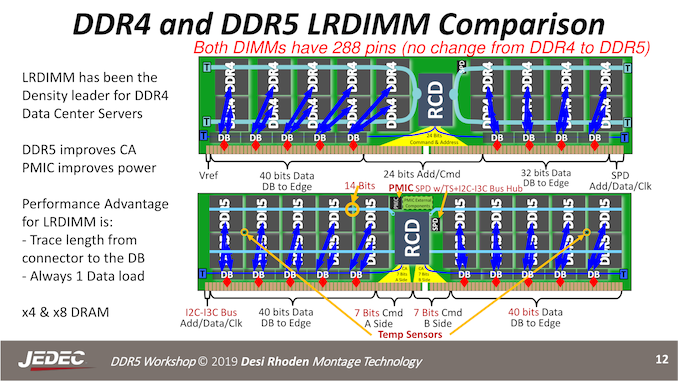

DDR5 DIMMs: Still 288 Pins, But Changed Pinouts

Finally, as already widely demonstrated from earlier vendor prototypes, DDR5 will be keeping the same 288 pin count from DDR4. This mirrors the DDR2 to DDR3 transition, where the pin count was kept identical there as well at 240 pins.

Don’t expect to use DDR5 DIMMs in DDR4 sockets, however. While the pin count isn’t changing the pinout is, in order to accommodate DDR5’s new features – and in particular its dual channel design.

The big change here is that the command and address bus is being shrunk and partitioned, with the pins being reallocated to the data bus for the second memory channel. Instead of a single 24-bit CA bus, DDR5 will have two 7-bit CA busses, one for each channel. 7 is well under half of the old bus, of course, so things are becoming a bit more complex for memory controllers in exchange.

Sampling Now, Adoption Starts in the Next 12-18 Months

Wrapping things up for today’s announcement, like other JEDEC specification releases, today is less of a product launch and more about the development committee setting the standard loose for its members to use. The major memory manufacturers, whom have been participating in the DDR5 development process since the start, have already developed prototype DIMMs and are now looking at wrapping things up to bring their first commercial hardware to market.

The overall adoption curve for DDR5 is expected to be similar to earlier DDR standards. That is to say that JEDEC expects DDR5 to start showing up in devices in 12 to 18 months as hardware is finalized, and increase from there. And while the group doesn’t give specific product guidance, they have been very clear that they expect servers to once again be the driving force behind early adoption, especially with the major hyperscalers. Neither Intel nor AMD have officially announced platforms that will use the new memory, but at this point that’s only a matter of time.

Meanwhile, expect DDR5 to have as long of a lifecycle as DDR4, if not a bit longer. Both DDR3 and DDR4 have enjoyed roughly seven-year lifecycles, and DDR5 should enjoy the same degree of stability. And while seeing out several years with perfect clarity isn’t possible, at this point the JEDEC is thinking that if anything DDR5 will end up with a longer shelf-life than DDR4, thanks to the ongoing maturation of the technology industry. Of course, this is the same year that Apple has dropped Intel for its CPUs, so by 2028 anything is possible.

At any rate, expect to see the major memory manufacturers continue to show off their prototype and commercial DIMMs as DDR5 gets ready to launch. With adoption set to kick off in earnest in 2021, it sounds like next year should bring some interesting changes to the sever market, and eventually the client desktop market as well.

![]()

![]()

Source: AnandTech – DDR5 Memory Specification Released: Setting the Stage for DDR5-6400 And Beyond