NVIDIA’s second GTC of 2020 is taking place this week, and as has quickly become a tradition, one of CO Jensen Huang’s “kitchenside chats” kicks off the event. As the de facto replacement for GTC Europe, this fall virtual GTC is a bit of a lower-key event relative to the Spring edition, but it’s still one that is seeing some NVIDIA hardware introduced to the world.

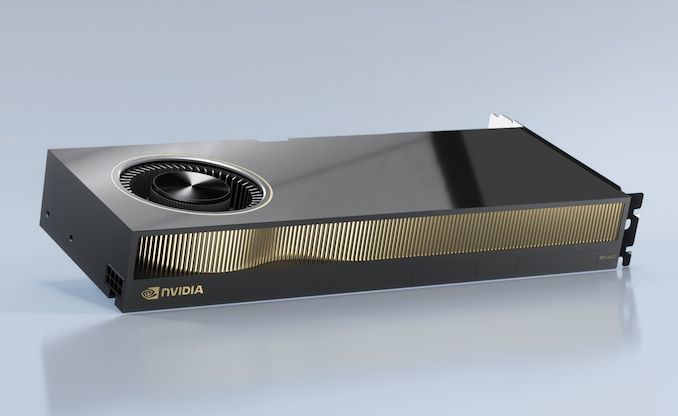

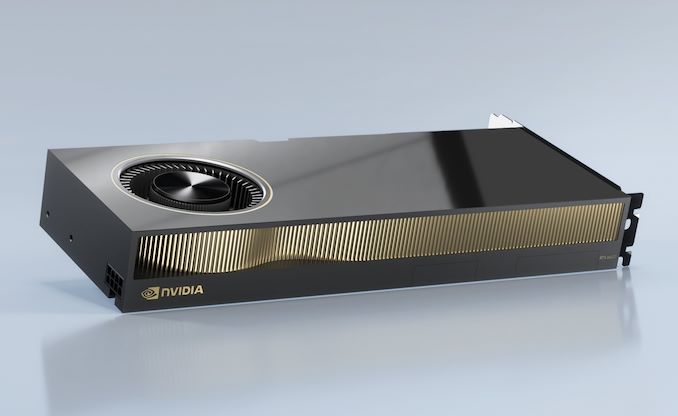

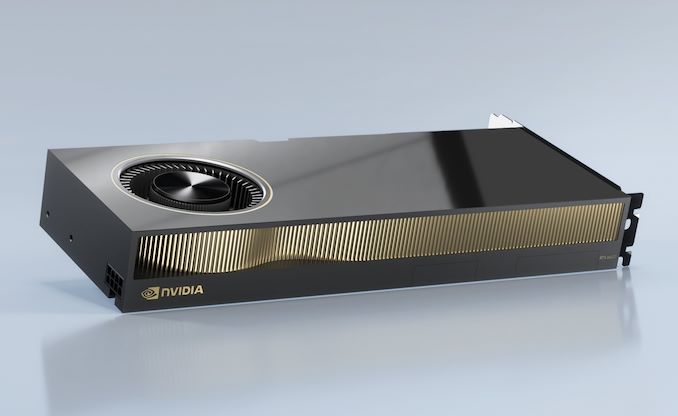

Starting things off, we have a pair of new video cards from NVIDIA – and a launch that seemingly indicates that NVIDIA is getting ready to overhaul its professional visualization branding. Being announced today and set to ship at the end of the year is the NVIDIA RTX A6000, NVIDIA’s next-generation, Ampere-based professional visualization card. The successor to the Turing-based Quadro RTX 8000/6000, the A6000 will be NVIDIA’s flagship professional graphics card, offering everything under the sun as far as NVIDIA’s graphics features go, and chart-topping performance to back it up. The A6000 will be a Quadro card in everything but name; literally.

NVIDIA Professional Visualization Card

Specification Comparison |

| |

A6000 |

A40 |

RTX 8000 |

GV100 |

| CUDA Cores |

10752 |

10752 |

4608 |

5120 |

| Tensor Cores |

336 |

336 |

576 |

640 |

| Boost Clock |

? |

? |

1770MHz |

~1450MHz |

| Memory Clock |

16Gbps GDDR6 |

14.5Gbps GDDR6 |

14Gbps GDDR6 |

1.7Gbps HBM2 |

| Memory Bus Width |

384-bit |

384-bit |

384-bit |

4096-bit |

| VRAM |

48GB |

48GB |

48GB |

32GB |

| ECC |

Partial

(DRAM) |

Partial

(DRAM) |

Partial

(DRAM) |

Full |

| Half Precision |

? |

? |

32.6 TFLOPS |

29.6 TFLOPS |

| Single Precision |

? |

? |

16.3 TFLOPS |

14.8 TFLOPS |

| Tensor Performance |

? |

? |

130.5 TFLOPS |

118.5 TFLOPs

(FP16) |

| TDP |

300W |

300W |

295W |

250W |

| Cooling |

Active |

Passive |

Active |

Active |

| NVLink |

1x NVLink3

112.5GB/sec |

1x NVLink3

112.5GB/sec |

1x NVLInk2

50GB/sec |

2x NVLInk2

100GB/sec |

| GPU |

GA102 |

GA102 |

TU102 |

GV100 |

| Architecture |

Ampere |

Ampere |

Turing |

Volta |

| Manufacturing Process |

Samsung 8nm |

Samsung 8nm |

TSMC 12nm FFN |

TSMC 12nm FFN |

| Launch Price |

? |

? |

$10,000 |

$9,000 |

| Launch Date |

12/2020 |

Q1 2021 |

Q4 2018 |

March 2018 |

The first professional visualization card to be launched based on NVIDIA’s new Ampere architecture, the A6000 will have NVIDIA hitting the market with its best foot forward. The card uses a fully-enabled GA102 GPU – the same chip used in the GeForce RTX 3080 & 3090 – and with 48GB of memory, is packed with as much memory as NVIDIA can put on a single GA102 card today. Notably, the A6000 is using GDDR6 here and not the faster GDDR6X used in the GeForce cards, as 16Gb density RAM chips are not available for the latter memory at this time. As a result, despite being based on the same GPU, there are going to be some interesting performance differences between the A6000 and its GeForce siblings, as it has traded memory bandwidth for overall memory capacity.

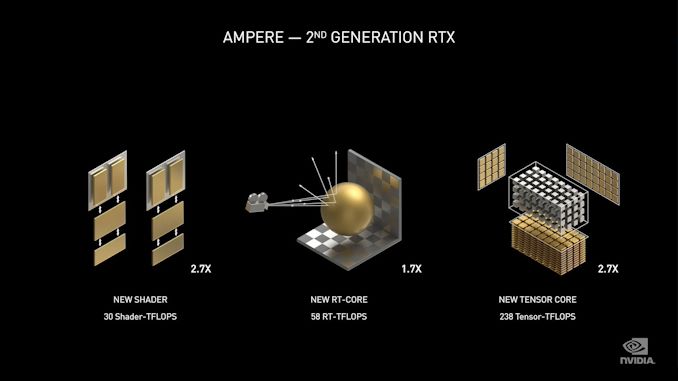

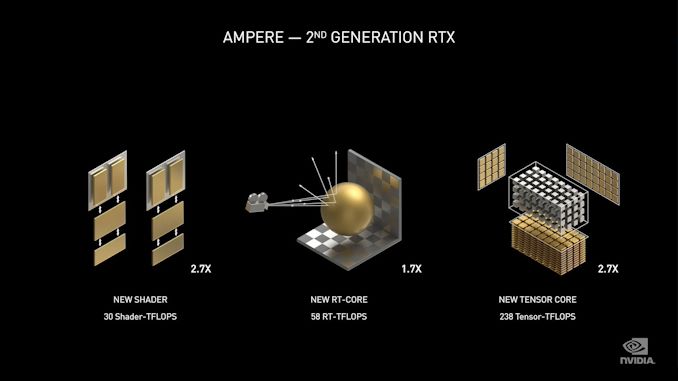

In terms of performance, NVIDIA is promoting the A6000 as offering nearly twice the performance (or more) of the Quadro RTX 8000 in certain situations, particularly tasks taking advantage of the significant increase in FP32 CUDA cores or the similar performance increase in RT core throughput. Unfortunately NVIDIA has either yet to lock down the specifications for the card or is opting against announcing them at this time, so we don’t know what the clockspeeds and resulting performance in FLOPS will be. Notably, the A6000 only has a TDP of 300W, 20W lower than the GeForce RTX 3090, so I would expect this card to be clocked lower than the 3090.

Otherwise, as we saw with the GeForce cards launched last month, Ampere itself is not a major technological overhaul to the previous Turing architecture. So while newer and significantly more powerful, there are not many new marquee features to be found on the card. Along with the expanded number of data types supported in the tensor cores (particularly BFloat16), the other changes most likely to be noticed by professional visualization users is decode support for the new AV1 codec, as well as PCI-Express 4.0 support, which will give the cards twice the bus bandwidth when used with AMD’s recent platforms.

Like the current-generation Quadro, the upcoming card also gets ECC support. NVIDIA has never listed GA102 as offering ECC on its internal pathways – this is traditionally limited to their big, datacenter-class chips – so this is almost certainly partial support via “soft” ECC, which offers error correction against the DRAM and DRAM bus by setting aside some DRAM capacity and bandwidth to function as ECC. The cards also support a single NVLink connector – now up to NVLink 3 – allowing for a pair of A6000s to be bridged together for more performance and to share their memory pools for supported applications. The A6000 also supports NVIDIA’s standard frame lock and 3D Vision Pro features with their respective connectors.

For display outputs, the A6000 ships with a quad-DisplayPort configuration, which is typical for NVIDIA’s high-end professional visualization cards. Notably this generation, however, this means the A6000 is in a bit of an odd spot since DisplayPort 1.4 is slower than the HDMI 2.1 standard also supported by the GA102 GPU. I would expect that it’s possible for the card to drive an HDMI 2.1 display with a passive adapter, but this is going to be reliant on how NVIDIA has configured the card and if HDMI 2.1 signaling will tolerate such an adapter.

Finally, the A6000 will be the first of today’s video cards to ship. According to NVIDIA, the card will be available in the channel as an add-in card starting in mid-December – just in time to make a 2020 launch. The card will then start showing up in OEM systems in early 2021.

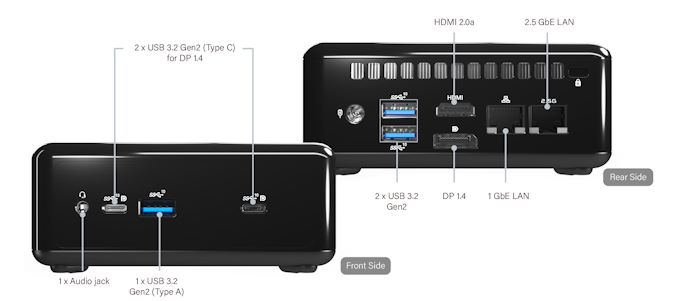

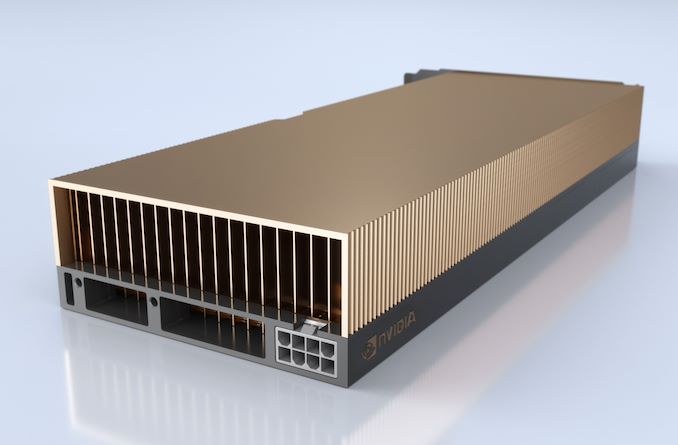

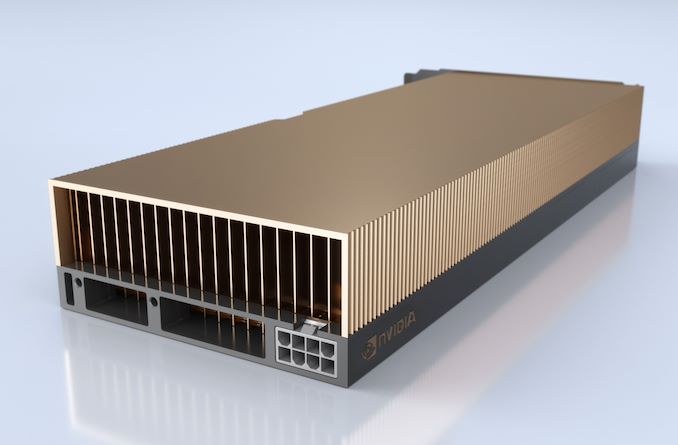

NVIDIA A40 – Passive ProViz

Joining the new A6000 is a very similar card designed for passive cooling, the NVIDIA A40. Based on the same GA102 GPU as the A6000, the A40 offers virtually all of the same features as the active-cooled A6000, just in a purely passive form factor suitable for use in high density servers.

By the numbers, the A40 is a similar flagship-level graphics card, using a fully enabled GA102 GPU. It’s not quite a twin to the A6000, but other than the cooling difference, the only other change under the hood is the memory configuration. Whereas the A6000 uses 16 Gbps GDDR6, A40 clocks it down to 14.5 Gbps. Otherwise NVIDIA has not disclosed expected GPU clockspeeds, but with a 300W TDP, we’d expect them to be similar to the A6000.

Overall NVIDIA is no stranger to offering passively cooled cards; however it’s been a while since we last saw a passively cooled high-end Quadro card. Most recently, NVIDIA’s passive cards have been aimed at the compute market, with parts like the Tesla T4 and P40. The A40, on the other hand, is a bit different and a bit more ambitious, and a reflection of the blurring lines between compute and graphics in at least some of NVIDIA’s markets.

The most notable impact here is the inclusion of display outputs, something that was never on NVIDIA’s compute cards for obvious reasons. The A40 includes three DisplayPort outputs (one fewer than the A6000), giving the server-focused card the ability to directly drive a display. In explaining the inclusion of display I/O in a server part, NVIDIA said that they’ve had requests from users in the media and broadcast industry, who have been using servers in places like video trucks, but still need display outputs.

Ultimately, this serves as something of an additional feature differentiator between the A40 and NVIDIA’s official PCIe compute card, the PCIe A100. As the A100 lacks any kind of video display functionality (the underlying A100 CPU was designed for pure compute tasks), the A40 is the counterpoint to that product, offering something with very explicit video output support both within and outside of the card. And while it’s not specifically aimed at the edge compute market, where the T4 still reigns supreme, make no mistake: the A40 is still capable of being used as a compute card. Though lacking in some of A100’s specialty features like Multi-Instance GPU (MIG), the A40 is fully capable of being provisioned as a compute card, including support for the Virtual Compute Server vGPU profile. So the card is a potential alternative of sorts to the A100, at least where FP32 throughput might be of concern.

Finally, like the A6000, the A40 will be hitting the streets in the near future. Designed to be sold primarily through OEMs, NVIDIA expects it to start showing up in servers in early 2021.

Quadro No More?

For long-time observers, perhaps the most interesting development from today’s launch is what’s not present: NVIDIA’s Quadro branding. Despite being aimed at their traditional professional visualization market, the A6000 is not being branded as a Quadro card, a change that was made at nearly the last minute.

Perhaps because of that last-minute change, NVIDIA hasn’t issued any official explanation for their decision. At face value it’s certainly an odd one, as the Quadro brand is one of NVIDIA’s longest-lived brands, second only to GeForce itself. NVIDIA still controls the lion’s share of the professional visualization market as well, so at face value there seems to be little reason for NVIDIA to shake-up a very stable market.

With all of that said, there are a couple of factors in play that may be driving NVIDIA’s decision. First and foremost is that the company has already retired one of its other product brands in the last couple of years: Tesla. Previously used for NVIDIA’s compute accelerators, Tesla was retired and never replaced, leaving us with the likes of the NVIDIA T4 and A100. Of course, Tesla is something of a special case, as the name has increasingly become synonymous with the electric car company, despite in both cases being selected as a reference to the famous scientist. Quadro, by comparison, has relatively little (but not zero) overlap with other business entities.

But perhaps more significant than that is the overall state of NVIDIA’s professional businesses. An important cornerstone of NVIDIA’s graphics products, professional visualization is a fairly stable market – which is to say it’s not a major growth market in the way that gaming and datacenter compute have been. As a result, professional visualization has been getting slowly subsumed by NVIDIA’s compute parts, especially in the server space where many products can be provisioned for either compute or graphics needs. In all these cases, both Quadro and NVIDIA’s former Tesla lineup have come to represent NVIDIA’s “premium” offerings: parts that get access to the full suite of NVIDIA’s hardware and software features, unlike the consumer GeForce products which have certain high-end features withheld.

So it may very well be that NVIDIA doesn’t see a need for a specific Quadro brand too much longer, because the market for Quadro (professional visualization) and Tesla (computing) are one in the same. Though the two differ in their specific needs, they still use the same NVIDIA hardware, and frequently pay the same high NVIDIA prices.

At any rate, it will be interesting to see where NVIDIA goes from here. Even with the overlap in audiences, branding segmentation has its advantages at times. And with NVIDIA now producing GPUs that lack critical display capabilities (GA100), it seems like making it clear what hardware can (and can’t) be used for graphics is going to remain important going forward.

![]()

![]()

Source: AnandTech – Quadro No More? NVIDIA Announces Ampere-based RTX A6000 & A40 Video Cards For Pro Visualization