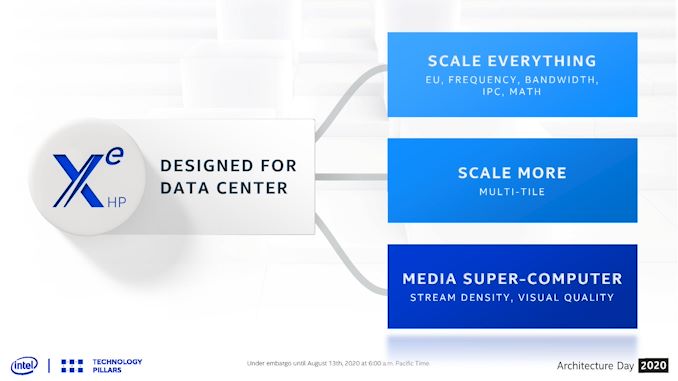

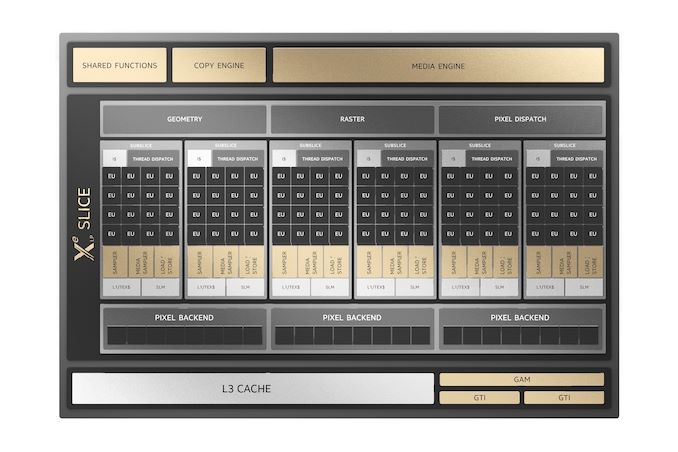

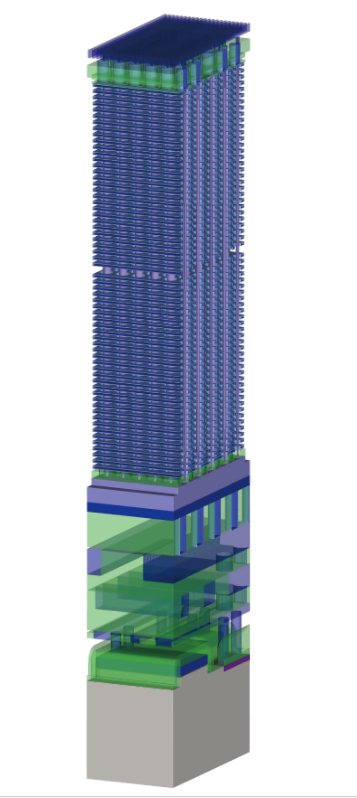

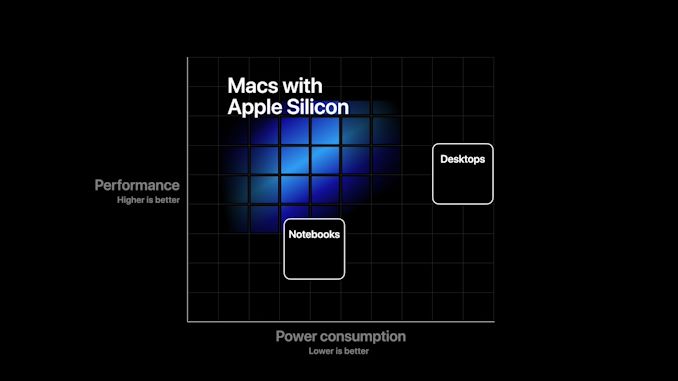

As part of Intel’s next generation Xe graphics strategy, one of the key elements is going to be the offerings for the enterprise market. For commercial enterprises that need hardcore compute resources, the Intel’s Xe-HP products are expected to be competition against Ampere and CDNA. The HP design, as we’ve already seen in teaser photos, is designed to leverage both scale-up and scale-out by using a multi-tile strategy paired with high-bandwidth memory.

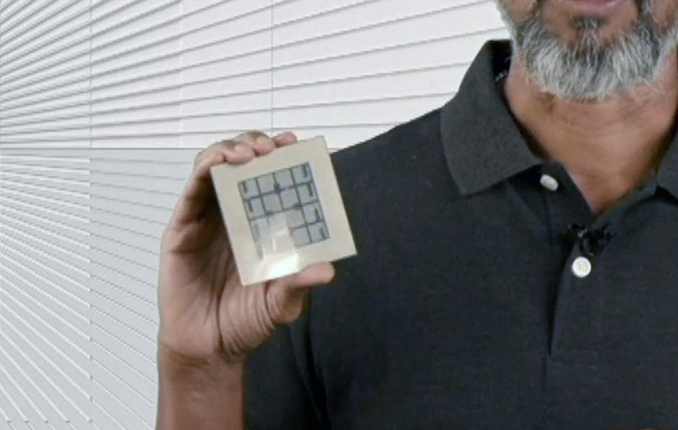

As far as we’re aware, Intel’s timeline for Xe-HP is to enable general availability sometime in 2021. The early silicon built on Intel’s 10nm Enhanced SuperFin process is in-house, working, and has been demonstrated to the press in a first-party video transcode benchmark. The top offering is a quad-tile solution, with a reported peak performance somewhere in the 42+ TFLOP (FP32) range in NEO/OpenCL-based video transcode. This would be more than twice as much as NVIDIA’s Ampere A100.

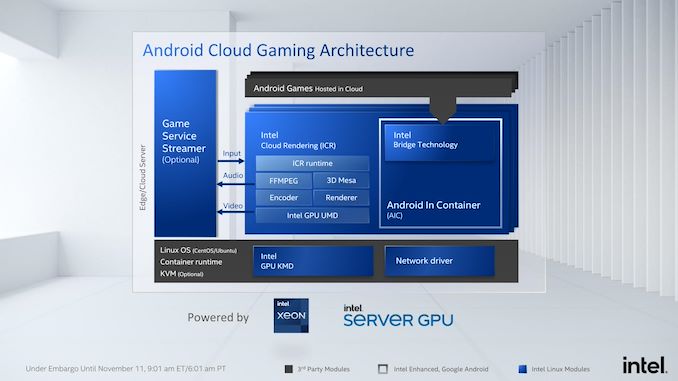

In an announcement today, Intel says that it is offering its dual-tile variant to select customers already, however not directly. Intel is going to offer Xe-HP use through its development cloud infrastructure, DevCloud. Approved partners will be able to spin up instances on Intel’s service, compile, and run Xe-HP compatible software in order to gauge both performance and code adaptability for their workflows.

Normally in a situation where a hardware provider offers a cloud-based program for unreleased products, there is a tendency to think that they’re not actually providing the hardware, that it’s an obfuscation on the back-end to what could be a series of FPGAs emulating what the customer thinks they’re using. This is part of the problem with these non-transparent cloud services. However, Intel has confirmed to us that the hardware on the back-end is indeed its Xe-HP silicon, running over a custom PCIe interface and powered through its Xeon infrastructure.

One of the common elements to new silicon is finding bugs and edge cases. All hardware vendors do their own validation testing, however in recent years Intel itself has presented the front where its customers, due to the scale of workloads and deployment, can test far deeper and wider than Intel does – up to a scale of 20-50x. But that’s when those partners have the hardware in hand, perhaps early engineering samples at lower frequencies; by using DevCloud, some of those big partners can attempt some of those workflows in preparation for a bigger direct shipment, and optimize the whole processes.

Intel did not state what the requirements were to get access to Xe-HP in the cloud. I suspect that if you have to ask, then you probably don’t qualify. In other news, Intel’s Xe-LP solution, Iris Xe MAX, is available in DevCloud for public access.

Related Reading

- Intel Xe-HP Graphics: Early Samples Offer 42+ TFLOPs of FP32 Performance

- Spotted At Hot Chips: Quad Tile Intel Xe-HP GPU

- What Products Use Intel 10nm? SuperFin and 10++ Demystified

- The Intel Xe-LP GPU Architecture Deep Dive: Building Up The Next Generation

- Raja Koduri at Intel HPC Devcon Keynote Live Blog (4pm MT, 11pm UTC)

Source: AnandTech – Intel’s Xe-HP Now Available to Select Customers