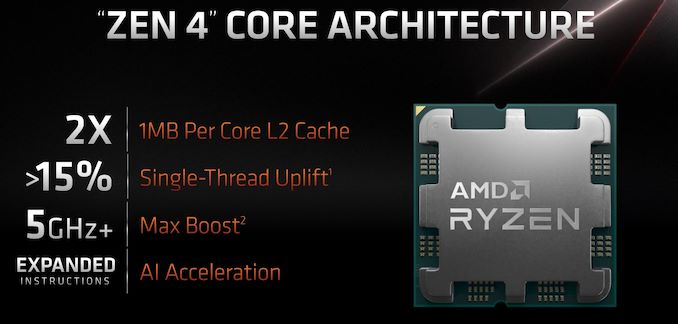

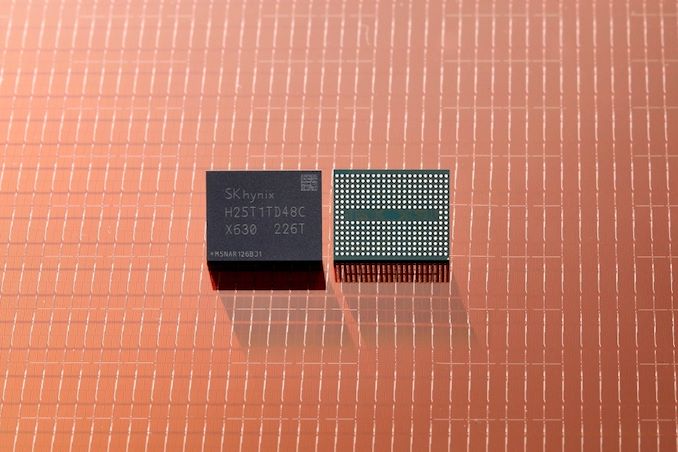

During AMD’s ‘together we advance_PCs event, the company unveiled its latest Zen 4-based Ryzen 7000 processors to the world, as well as its AM5 platforms, including X670E, X670, B650E, and B650. AMD also announced what it calls AMD EXPO, a new technology for overclocking DDR5 memory. In conjunction with this announcement, AMD has partnered with memory manufacturers including ADATA, Corsair, G.Skill, GeIL, and Kingston Technology to bring AMD Ryzen 7000 optimized kits of DDR5 memory to the market, with 15 (or more) of these set to be available on launch day on September 27th.

AMD EXPO stands for EXtended Profiles for Overclocking and is designed to provide users with high-end memory overclocking when used in conjunction with AMD’s Ryzen 7000 series processors. Similar to Intel’s preexisting X.M.P (Extreme Memory Profile) technology found on most consumer-level memory kits designed for desktop Intel platforms, AMD’s EXPO technology aims to do the same, but as an open standard with an emphasis on providing the best settings for AMD platforms.

AMD EXPO Technology: Like X.M.P, but Optimized for Ryzen 7000

The premise of AMD EXPO is that it will be a one-click DDR5 overclocking function for AM5 motherboards, and AMD claims that EXPO overclocked memory kits will offer up to 11% higher gaming performance at 1080p, although it hasn’t quantified how it came to this figure. AMD did, however, state that it is expecting (at least) 15 kits of DDR5 memory with AMD EXPO at launch on September 27th, with rates of up to DDR5-6400.

AMD EXPO, on the surface, is essentially an X.M.P profile specifically designed for AMD’s Ryzen 7000 (Zen 4) processors. Although AMD hasn’t gone into finer details on how it differs from X.M.P, beyond the fact that it will be royalty and licensing fee free.

G.Skill Trident Z5 Neo DDR5 memory with AMD EXPO certification

It is worth noting that DDR5 memory with X.M.P profiles will be supported on Ryzen 7000 platforms. Still, AMD EXPO adds an additional layer of ‘compatibility’ with AMD systems, as EXPO DIMMs will be optimized for use on AMD platforms (as opposed to X.M.P. kits chiefly being optimized for Intel platforms).

AMD EXPO does have one caveat associated with it; AMD EXPO is classed as overclocking in AMD’s own eyes, and according to its footnotes, it does void the warranty.

The footnote on the AMD EXPO landing page states as follows:

Overclocking and/or undervolting AMD processors and memory, including without limitation, altering clock frequencies / multipliers or memory timing / voltage, to operate outside of AMD’s published specifications will void any applicable AMD product warranty, even when enabled via AMD hardware and/or software. This may also void warranties offered by the system manufacturer or retailer. Users assume all risks and liabilities that may arise out of overclocking and/or undervolting AMD processors, including, without limitation, failure of or damage to hardware, reduced system performance and/or data loss, corruption or vulnerability. GD-106

Similar to how Intel operates with X.M.P profiles being applied, using AMD EXPO will technically void the warranty, which seems odd given this is AMD’s technology designed to offer adopters of Ryzen 7000 and AM5 an additional boost to performance through certification. When overclocking, doing so is always at the user’s risk. Still, with a certification such as AMD EXPO offers, it seems a little odd that AMD is recommending optimized memory for its platform but also voids the processor’s warranty.

AMD EXPO: 15 Different DDR5 Kits Available At Launch

As we previously mentioned, AMD says that there should be 15 kits of DDR5 with support for AMD EXPO ready to launch when Ryzen 7000 is released on September 27th. Some of these kits include ADATA Caster RGB and Lancer RGB models, with GeiL EVO V models and Kingston Technology Fury Beast and RGB enabled models.

G.Skill Flare X5 Memory in black with AMD EXPO certification

Corsair and G.Skill sent us information on what it is launching alongside Ryzen 7000 on September 27th. Starting with G.Skill, it announced three new kits of DDR5 for Ryzen 7000, including its Trident Z5 Neo RGB, regular Trident Z5 Neo, and the Flare X5 series. The flagship for its AMD EXPO memory is the Trident Z Neo, with four different varieties of DDR5-6000 set for launch, each with different latencies and capacities, as outlined in the table below.

| G.Skill AMD EXPO DDR5 Memory (as of 08/30) | |||

| Memory | Frequency | CL Timing | Capacity |

| Trident Z5 Neo + RGB Neo | DDR5-6000 | 30-38-38-96 | 2 x 16 GB |

| 30-40-40-96 | 2 x 32 GB | ||

| 32-38-38-96 | 2 x 16 / 2 x 32 GB | ||

| 36-36-36-96 | 2 x 16 GB | ||

| Flare X5 | DDR5-5600 | 28-34-34-89 | 2 x 16 / 2 x 32 GB |

| 30-36-36-89 | |||

| 36-36-36-89 | |||

The Trident Z5 Neo and RGB Neo share the same specifications, but the RGB version includes a customizable LED lightbar. The top SKU from G.Skill with AMD EXPO at launch will be the DDR5-6000 CL30-38-38-96 kit, which is available in capacities of 2 x 16 GB (32 GB). The Flare X5 replaces the older Flare X series for DDR4 and features a lower profile heatsink at just 33 mm tall; this makes it more compatible for users with space restrictions of large tower coolers that restrict larger and more aggressive heatsink designs such as the Trident Z5 Neo.

New Corsair Dominator Platinum RGB DDR5 for AMD Ryzen 7000

Focusing on what Corsair has announced for its AMD EXPO certified memory, it has two new varieties of DDR5 memory. This includes new premium Dominator Platinum RGB DDR5, Vengeance DDR5, and non-RGB enabled Vengeance DDR5, all designed specifically for AMD and Ryzen 7000. The top SKU from Corsair is the Dominator Platinum RGB 32 GB (2 x 16 GB) kit with speeds of DDR5-6000 and latencies of CL30-36-36-76. The Dominator Platinum RGB DDR5 memory for AMD EXPO will also be available in 64 GB (2 x 32 GB) kits, with speeds of DDR5-5600 CL36 and DDR5-5200 CL40 varieties.

The Corsair Vengeance RGB with AMD EXPO profiles will reach up to DDR5-6000 CL30 but will also be available in DDR5-5600 CL36 and DDR5-5200 CL40. At the time of writing, the non-RGB enabled Vengeance for AM5 will max out at DDR5-5600 CL36, with options also available in DDR5-5200 CL40 in both 2 x 16 GB (32 GB) and 2 x 32 GB (64 GB) kits.

The AMD EXPO DDR5 memory kits will launch at the same time as AMD’s Ryzen 7000 desktop processors and AMD X670E and X670 motherboards: September 27th. None of the memory vendors have provided us with any pricing at the time of writing.

Source: AMD, Corsair, & G.Skill

Source: AnandTech – AMD EXPO Memory Technology: One Click Overclocking Profiles For Ryzen 7000