Without much fanfare, Western Digital this week introduced its first dual actuator hard drive, a 20TB drive that is designed to offer SATA SSD-like sequential read/write performance. The Ultrastar DC HS760 drive is meant to increase IOPS-per-terabyte performance for hyperscale cloud datacenters and will compete against Seagate’s dual actuator Exos 2X family of HDDs. Meanwhile, Western Digital’s offering will also mark the latest deployment of the company’s OptiNAND technology.

The dual actuator Ultrastar DC HS760 HDD builds upon the company’s single actuator Ultrastar DC HC560 drive which uses nine 2.2TB ePMR (energy-assisted perpendicular magnetic recording) platters. But in the case of the HS760, WD adds a second actuator to the drive, essentially splitting it up into two quasi-independent drives with each half having domain over 4.5 platters (9 surfaces). By doubling the number of indepent actuators, Western Digital claims that the HS760 is able to double sequential read/write speeds and increase random read/write performance by 1.7 times versus single actuator drives.

While the company yet has to upload a datasheet for its dual actuator HDD, we are looking at sequential throughput rates of around 582 MB/s, which interestingly enough is a tad faster than SATA SSDs, which max out the SATA-III at around 550 MB/s. Though it’s worth noting that, as is typical for enterprise-focused hard drives, Western Digital is using Serial Attached SCSI (SAS) here, so it won’t be possible to hook the drive up to a SATA host.

Since the two actuators inside Western Digital’s Ultrastar DC HS760 HDD work independently, the unit presents itself as two independent logical unit number (LUN) devices, and both logical hard drives are independently addressable. This means that datacenters will have to introduce certain software tweaks (i.e., these are not drop-in compatible with infrastructure designed for single actuator HDDs). But for the added complexity on the software/configuration side of matters, data center operators are being promised not only the aforementioned higher performance levels, but also a setup that is 37% more energy efficient in terms of IOPS-per-Watt than two 10TB devices. In essence, hyperscalers are getting many of the benefits of having two current-generation 10TB HDDs, but in a product that takes up the space of just a single drive.

The key advantage of Western Digital’s Ultrastar DC HS760 20TB over hard drives with one actuator of the same capacity is significantly increased performance on an IOPS-per-TB basis. Typical enterprise-grade 3.5-inch HDDs with capacities between 8TB and 16TB offer random performance of 6 – 10 IOPS per terabyte, which is enough to ensure quality-of-service of datacenters. But at 20TB, random performance drops to less than 5 IOPS per terabyte, which requires hyperscalers to introduce various mitigations to ensure that these drives meet their QoS requirements.

Such mitigations either include implementing command queuing and latency-bounded I/O (LBIO) in firmware, usage of drives of lower capacity, reducing usable capacity per drive, or even adding sophisticated caching methods. All of these methods either increase upfront costs and/or total-costs-of-ownership. Therefore, hyperscalers need drives that can physically increase their IOPS-per-terabyte performance and dual actuator HDDs are a natural answer. As an added bonus, these hard drives also offer two times higher sequential read/write speeds than single-actuator HDDs.

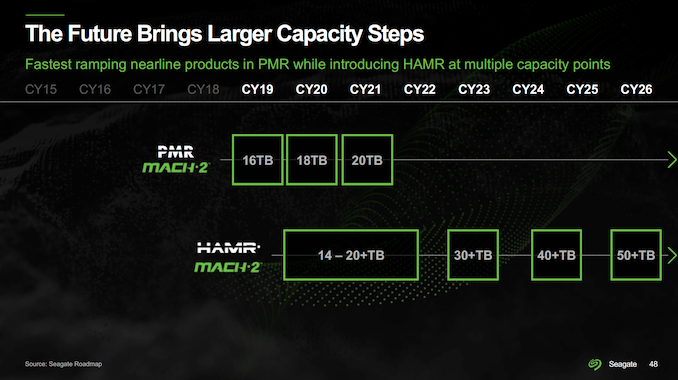

As noted above, Western Digital is not the only company to offer dual actuator HDDs as Seagate has been doing this for years now. But Western Digital’s Ultrastar DC HS760 has an advantage over rivals that comes in the form of its OptiNAND technology, which is an integrated iNAND UFS embedded flash drive (EFD) coupled with firmware tweaks. OptiNAND is meant to increase capacity, reliability, and performance of HDDs and while Western Digital yet has to disclose performance numbers of its Ultrastar DC HS760 drives, it is at least evident that its 20TB drive will offer more capacity than Seagate’s competing Exos 2X18 18TB drive.

Otherwise, given that the HS760 is aimed primarily at hyperscalers, Western Digital is treating the drive as a product for a limited audience. Although the drive is listed on the company’s website, for example, there is no public pricing listed, and buyers will have to make a sales inquiry. So the actual unit pricing on the new drive is going to vary some, depending on things like order volumes and agreements between Western Digital and its clients.

Western Digital’s Ultrastar DC HS760 HDD will be covered with a five-year warranty with each LUN rated for a 500 TB annual workload.

Source: AnandTech – Western Digital Unveils Dual Actuator Ultrastar DC HS760 20TB HDD