Qualcomm this morning is taking the wraps off its next generation Snapdragon 4-class mobile SoC, the aptly named Snapdragon 4 Gen 2. The company’s new entry-level SoC for smartphones and other devices incorporates some hardware updates that should modestly boost performance for the most cost-sensitive segment of the mobile market, as well as bringing Qualcomm’s overall Snapdragon product stack into closer alignment by supporting more recent 5G standards and fabbing the chip to a newer process node.

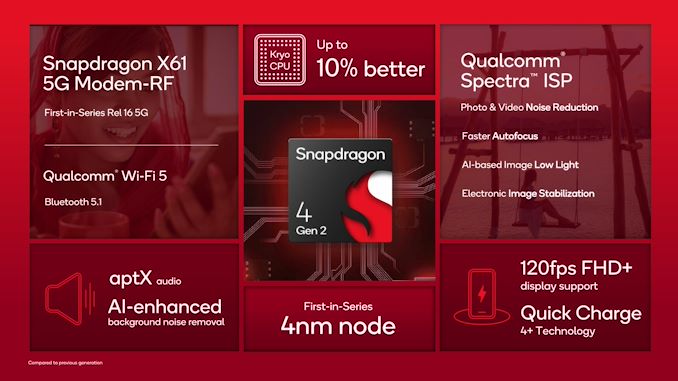

First and foremost, the top line on performance is that Qualcomm is touting a 10% boost in CPU performance, thanks to higher CPU core clockspeeds. The Snapdragon 4 Gen 2 uses the same 2+6 CPU core configuration as its predecessor, the Snapdragon 4 Gen 1, with 2 Cortex-A78-derived Kryo CPU cores paired with 6 Cortex-A55-derived cores. Thanks to a node shrink, Qualcomm has boosted the top clockspeeds on each set of cores by about 10%, bringing the A78 cores up to 2.2GHz, and the A55 cores up to 2.0GHz. So the 10% CPU performance gain should be fairly consistent in both single and multi-threaded workloads.

Architecturally, there’s little to say about these cores that hasn’t been said already. These are reasonably performant CPU cores, though the age and the lack of higher clockspeeds means their performance potential is limited. The ultra-budget nature of the Snapdragon 4 series means this will be the last part of Qualcomm’s stack to move to any kind of Armv9 core. Though interestingly, the 4 Gen 2 does get a bit of a leg up over the 6 Gen 1 when it comes to the A55 cores – 6G1’s small cores only boost to 1.8GHz, despite being fabbed on the same process node. So this will narrow the CPU performance gap between those parts a bit (at least until the obligatory 6 Gen 2 lands).

Meanwhile, Qualcomm is staying mum on GPU performance. The company stopped giving their GPUs external product numbers a few years ago, and for the Snapdragon 4 release they are not even issuing performance expectations. So we aren’t expecting any gains here, especially as the chip is still meant to drive HD/FHD devices.

| Qualcomm Snapdragon 4-Series SoCs | ||||||

| SoC | Snapdragon 4 Gen 2 (SM4450) |

Snapdragon 4 Gen 1 (SM4375) |

Snapdragon 480 (SM4350) |

|||

| CPU | 2x CA78 @ 2.2GHz 6x CA55 @ 2.0GHz |

2x CA78 @ 2.0GHz 6x CA55 @ 1.8GHz |

2x CA76 @ 2.0GHz 6x CA55 @ 1.8GHz |

|||

| GPU | Adreno | Adreno | Adreno 619 | |||

| DSP | Hexagon | Hexagon | Hexagon 686 | |||

| ISP/ Camera |

Spectra (2x 12-bit) 1x 108MP or 32MP with ZSL or 16+16MP with ZSL |

Spectra (3x 12-bit) 1x 108MP or 32MP with ZSL or 25+13MP with ZSL or 3x 13MP with ZSL |

Spectra 345 (3x 12-bit) 1x 64MP or 25+13MP or 3x 13MP |

|||

| Encode/ Decode |

1080p60 H.264, H.265, VP9 (Decode-Only) |

|||||

| Memory | 2x 16-bit @ 3200MHz LPDDR5X 25.6GB/s or 2x 16-bit @ 2133MHz LPDDR4X 17.0GB/s |

2x 16-bit @ 2133MHz LPDDR4X 17.0GB/s |

2x 16-bit @ 2133MHz LPDDR4X 17.0GB/s |

|||

| Storage | UFS 3.1 (2 lane) | UFS 2.2, eMMC 5.1 | UFS 2.2, eMMC 5.1 | |||

| Integrated Modem | X61 Integrated LTE DL = 800Mbps UL = 210Mbps 5G NR Sub-6 (100MHz) DL = 2500Mbps UL = 900Mbps |

X51 Integrated LTE DL = 800Mbps UL = 210Mbps 5G NR Sub-6 + mmWave (100MHz) DL = 2500Mbps UL = 900Mbps |

X51 Integrated LTE DL = 800Mbps UL = 210Mbps 5G NR Sub-6 + mmWave (100 + 200MHz) DL = 2500Mbps UL = 660Mbps |

|||

| Wi-Fi/BT | Wi-Fi 5 (2×2) Bluetooth 5.1 |

Wi-Fi 5 (2×2) Bluetooth 5.2 |

Wi-Fi 5 (2×2) Bluetooth 5.1 |

|||

| Mfc. Process | Samsung 4nm | TSMC 6nm | Samsung 8nm LPP | |||

As noted earlier, Qualcomm has moved their entry-level SoC to a Samsung 4nm process (given the timing, 4LPP, we’d assume). That means that Samsung’s entire current product stack – Snapdragons 8G2, 7+G2, 6G1, and 4G2 – are all made on a 4nm node of some flavor. Samsung’s is the less performant of the two, but it’s almost certainly cheaper. Which is a boon for these entry-level, price-constrained parts. Academically, I’m curious just how small the Snapdragon 4 die is based on this process, as it should be rather tiny, but that’s not a detail Qualcomm normally shares.

Otherwise, this latest Snapdragon 4-class SoC is a bit of a give-and-take release as compared to the 4 Gen 1. On the memory side of matters, the new SoC incorporates Qualcomm’s newer controller design, bringing LPDDR5X support to a 4-class chip for the first time. Like other Qualcomm SoCs, the chip can run that memory at up to LPDDR5X-6400 speeds. Meanwhile, LPDDR4X remains supported as well, at the usual LPDDR4X-4266 data rate. The memory bus is still a total of 32-bits wide, so you’re looking at a peak memory bandwidth of 25.6GB/second with LPDDR5X, a full 50% more bandwidth than what the 4 Gen 1 could provide.

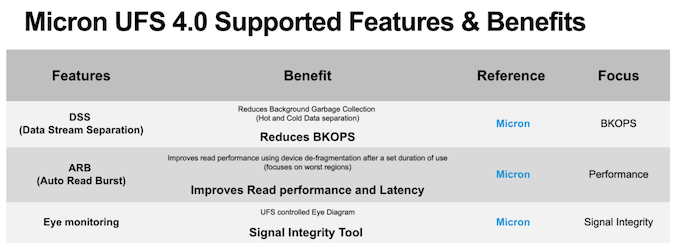

The storage controller has also been updated to support UFS 3.1, finally bringing Qualcomm’s low-end chip up to relatively modern version of the flash storage standard. Compared to the UFS 2.2 spec supported in the previous chip, UFS 3.1 a bit more than doubles the bandwidth per lane; so in a two-lane configuration, the 4G2 can theoretically transfer as much as 2.9GB/second of data though Qualcomm isn’t making any performance claims. Notably, eMMC storage support is absent from Qualcomm’s specification sheet, indicating that support for that now well-outdated interface standard is finally riding off into the sunset.

As for Qualcomm’s imaging ISPs and related hardware, the 4 Gen 2 will be taking a step backwards by removing the third camera pipeline. As a result, 4 Gen 2 will only support up to two cameras. The Spectra ISPs themselves are seemingly unchanged, with Qualcomm still using their 12-bit designs. Due to that camera shift, the overall imaging capabilities of the SoC have changed a bit, even in a two camera configuration. The resolutions supported in dual camera zero shutter lag mode are now balanced; rather than 25+13MPixels, the SoC runs at (up to) 16+16Mpixels.

Though with fewer camera modules to support, Qualcomm is touting better camera performance. In particular, Qualcomm says that the new SoC offers faster autofocus support than the 4 Gen 1, as well as an updated electronic image stabilization feature. The 4 Gen 2 also includes an “AI-enhanced” low light feature, and multi-camera temporal filtering (MCTF) support has been baked into the SoC for noise reduction.

The other major hardware improvement for the SoC, in turn, is an upgrade to a Snapdragon X60-series class modem. New to Qualcomm’s product lineup is the Snapdragon X61 modem, a downscaled version of their X62/X65 modem seem in other chips. Relative to the outgoing 4 Gen 1 SoC and its X51 modem, the X61 supports the newer Release 16 version (aka 5G Phase 2) of the 3GPP standard family.

Release 16 is now coming up on 3 years old, so this is a needed update to keep up with 5G network development. Though because the primary benefits of Release 16 are more for the network operations side than the consumer side, phone buyers don’t often see much from it besides some improvements in coverage. In the case of the 4 Gen 2, the actual bandwidth figures are unchanged from the 4 Gen 1, which means downloads and uploads top out at 2.5Gbps and 900Mbps respectively.

mmWave support has also remained off of Qualcomm’s spec sheets this time around. On the 4 Gen 1 it ended up being an unlisted feature of sorts – the hardware was there in case any handset vendor wanted to use it, but Qualcomm didn’t advertise it – and it’s unclear whether Qualcomm even bothered with the hardware for this new silicon.

Also of note: Bluetooth 5.2 support has disappeared from Qualcomm’s specifications. Whereas the 4 Gen 1 supported BT 5.2, across Qualcomm’s documentation 4 Gen 2 is listed as only supporting BT 5.1. This is notable not only because it’s a regression, but because Bluetooth Low Energy Audio is a 5.2 feature – and arguably the defining feature of 5.2. At this point it’s unclear why Qualcomm would want to remove the feature from their low-end SoC (I suspected royalties, but the LC3 codec for LE Audio is part of the BT license). Otherwise, the Wi-Fi half of Qualcomm’s radio system remains unchanged, with Wi-Fi 5 support.

Wrapping things up, Qualcomm tells us that several of the usual suspects will be adopting the Snapdragon 4 Gen 2, including Redmi and vivo. Handsets based on the SoC are expected to be announced in the second half of this year.

Source: AnandTech – Qualcomm Unveils Snapdragon 4 Gen 2: Modest Modernization For Low-End Mobiles