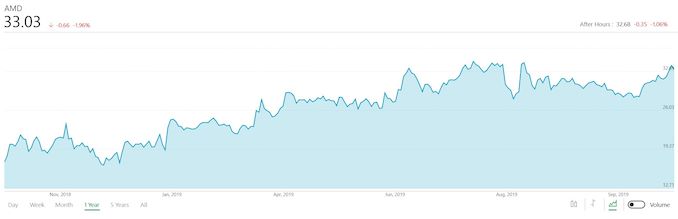

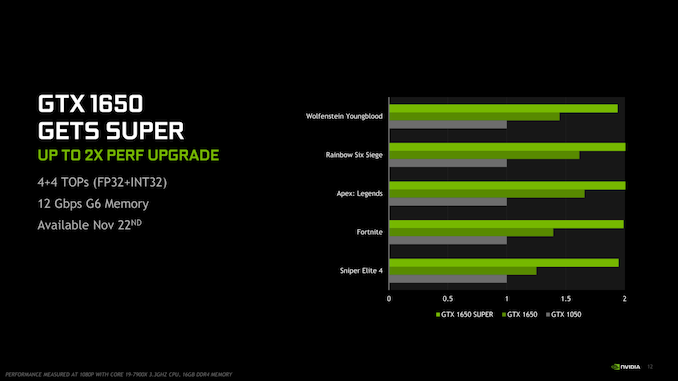

Alongside today’s GeForce GTX 1660 Super launch, NVIDIA is also taking the wraps off of one more GeForce Super card. Having already given a Super mid-generation refresh to most of their lineup, they will be giving it to one of their last, untouched product lineups, the GTX 1650 series. The resulting product, the GeForce GTX 1650 Super, promises to be an interesting card when it actually launches next month on November 22nd, as NVIDIA will be aiming significantly higher than the original GTX 1650 that it supplants. And it will be just in time to do combat with AMD’s Radeon RX 5500 series.

| NVIDIA GeForce Specification Comparison |

| |

GTX 1660 |

GTX 1650 Super |

GTX 1650 |

GTX 1050 Ti |

| CUDA Cores |

1408 |

1280 |

896 |

768 |

| ROPs |

48 |

32 |

32 |

32 |

| Core Clock |

1530MHz |

1530MHz |

1485MHz |

1290MHz |

| Boost Clock |

1785MHz |

1725MHz |

1665MHz |

1392MHz |

| Memory Clock |

8Gbps GDDR5 |

12Gbps GDDR6 |

8Gbps GDDR5 |

7Gbps GDDR5 |

| Memory Bus Width |

192-bit |

128-bit |

128-bit |

128-bit |

| VRAM |

6GB |

4GB |

4GB |

4GB |

| Single Precision Perf. |

5 TFLOPS |

4.4 TFLOPS |

3 TFLOPS |

2.1 TFLOPS |

| TGP |

120W |

100W |

75W |

75W |

| GPU |

TU116

(284 mm2) |

TU116

(284 mm2) |

TU117

(200 mm2) |

GP107

(132 mm2) |

| Transistor Count |

6.6B |

6.6B |

4.7B |

3.3B |

| Architecture |

Turing |

Turing |

Turing |

Pascal |

| Manufacturing Process |

TSMC 12nm “FFN” |

TSMC 12nm “FFN” |

TSMC 12nm “FFN” |

Samsung 14nm |

| Launch Date |

03/14/2019 |

11/22/2019 |

04/23/2019 |

10/25/2016 |

| Launch Price |

$219 |

TBA |

$149 |

$139 |

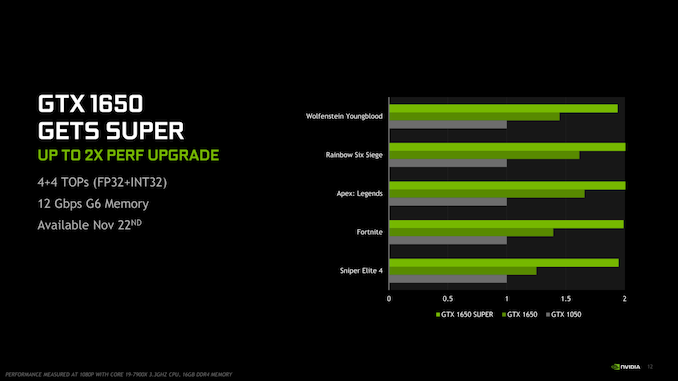

Like the other Super cards this year, the GTX 1650 Super is intended to be a mid-generation kicker for the GeForce family. However unlike the other Super cards, NVIDIA is giving the GTX 1650 Super a much bigger jump in performance. With a planned increase in GPU throughput of 46%, and paired with faster 12Gbps GDDR6 memory, the new card should be much farther ahead of the GTX 1650 than what we saw with today’s GTX 1660 Super launch, relatively speaking.

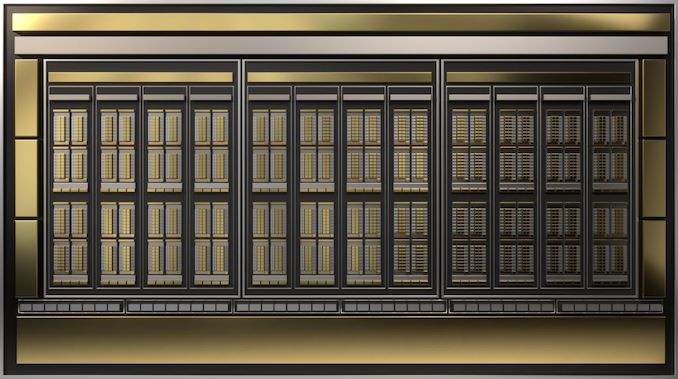

The single biggest change here is the GPU. While NVIDIA is calling the card a GTX 1650, in practice it’s more like a GTX 1660 LE; NVIDIA has brought in the larger, more powerful TU116 GPU from the GTX 1660 series to fill out this card. There are cost and power consequences to this, but the payoff is that it gives NVIDIA a lot more SMs and CUDA Cores to work with. Coupled with that is a small bump in clockspeeds, which pushes the on-paper shader/compute throughput numbers up by just over 46%.

Such a large jump in GPU throughput also requires a lot more memory bandwidth to feed the beast. As a result, just like the GTX 1660 Super, the GTX 1650 Super is getting the GDDR6 treatment as well. Here NVIDIA is using slightly lower (and lower power) 12Gbps GDDR6, which will be attached to the GPU via a neutered 128-bit memory bus. Still, this one change will give the GTX 1650 Super 50% more memory bandwidth than the vanilla GTX 1650, very close to its increase in shader throughput.

Do note, however, that not all aspects of the GPU are being scaled out to the same degree. In particular, the GTX 1650 Super will still only have 32 ROPs, with the rest of TU116’s ROPs getting cut off along with its spare memory channels. This means that while the GTX 1650 Super will have 46% more shader performance, it will only have 4% more ROP throughput for pushing pixels. Counterbalancing this to a degree will be the big jump in memory bandwidth, which will keep those 32 ROPs well-fed, but at the end of the day the GPU is getting an uneven increase in resources, and gaming performance gains are likely to reflect this.

The drawback to all of this, then, is power consumption. While the original GTX 1650 is a 75 Watt card – making it the fastest thing that can be powered solely by a PCIe slot – the Super-sized card will be a 100 Watt card. This gives up the original GTX 1650’s unique advantage, and it means builders looking for even faster 75W cards won’t get their wish, but it’s the power that pays the cost of the GTX 1650 Super’s higher performance. Traditionally, NVIDIA has held pretty steadfast at 75W for their xx50 cards, so I’ll be curious to see what this means for consumer interest and sales; but then again at the end of the day, despite the name, this is closer to a lightweight GTX 1660 than it is a GTX 1650.

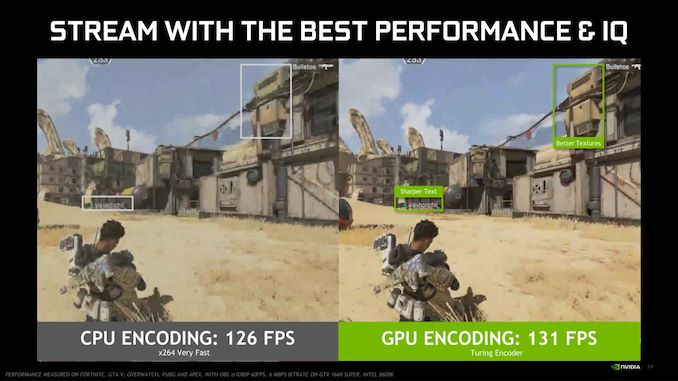

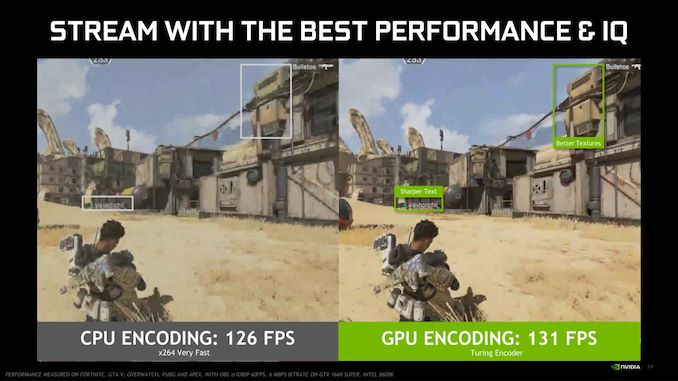

Speaking of hardware features, besides giving NVIDIA a good deal more in the way of GPU resources to play with, the switch from the TU117 GPU to the TU116 GPU will also have one other major ramification that some users will want to pay attention to: video encoding. Unlike TU117, which got the last-generation NVENC Volta video encoder block for die space reasons, TU116 gets the full-fat Turing NVENC video encoder block. Turing’s video encode block has been turning a lot of heads for its level of quality – while not archival grade, it’s competitive with x264 medium – which is important for streamers. This also led to TU117 and the GTX 1650 being a disappointment in some circles, as an otherwise solid video card was made far less useful for video encoding. So with the GTX 1650 Super, NVIDIA is resolving this in a roundabout way, thanks to the use of the more powerful TU116 GPU.

Moving on, the GTX 1650 Super is set to launch on November 22nd. And, while NVIDIA does not directly call out AMD in its production descriptions, the card’s configuration and timing makes a very compelling case that this is meant to be NVIDIA’s answer to AMD’s impending Radeon RX 5500. The first Navi 14-based video card is set to launch to retail sometime this quarter, and in their promotional material, AMD has been comparing it to the vanilla GTX 1650. So adding a GTX 1650 Super card allows NVIDIA to get ahead, in a fashion, by making available another (relatively) cheap card that, knowing NVIDIA, they expect to outperform what AMD has in the works. Of course the proof is in the pudding, so to speak, and at this point we’re waiting on both AMD and NVIDIA to actually launch their respective products before we can see how the competing cards actually stack up.

The other major wildcard here will be pricing. While NVIDIA is announcing the full specifications of the GTX 1650 Super today, they are withholding pricing information. This admittedly isn’t unusual for NVIDIA (they rarely release it more than a few days in advance), but in this case in particular, both NVIDIA and AMD seem to be playing a bit of a game of chicken. Neither side has announced where their card will be priced at, and it would seem that each is waiting on the other to go first so that they can counter with the best possible position for their respective card. Though with NVIDIA’s card not set to launch for another month, and AMD’s card more indeterminate still, we’re all going to be waiting for a while regardless.

At any rate, we’ll have more to talk about over the next month or so as the GTX 1650 Super and the rest of this holiday season’s video cards start hitting store shelves. So stay tuned.

![]()

![]()

Source: AnandTech – NVIDIA Announces GeForce GTX 1650 Super: Launching November 22nd