While the initial fervor over low-level graphics APIs has died down quite a bit since they first hit the scene in the middle of the last decade, API development is still alive and well. In fact in many ways it’s better than ever – now that these APIs are accepted and stable, developers on both sides of the aisle can sink their teeth into the new options provided, and plot where to go in the coming years. All the while OSes like Windows 7 are gone (but not forgotten), and a new generation of consoles is on the horizon. So in many ways, the next couple of years are when everything that has been put into motion over the last decade will finally come to fruition, and the baseline for graphics programming increasingly shifts to these low-level APIs.

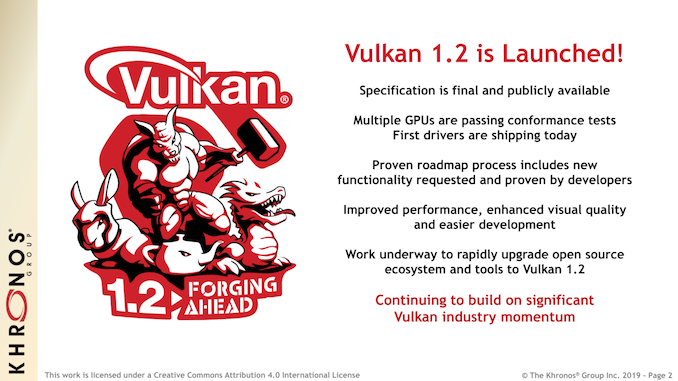

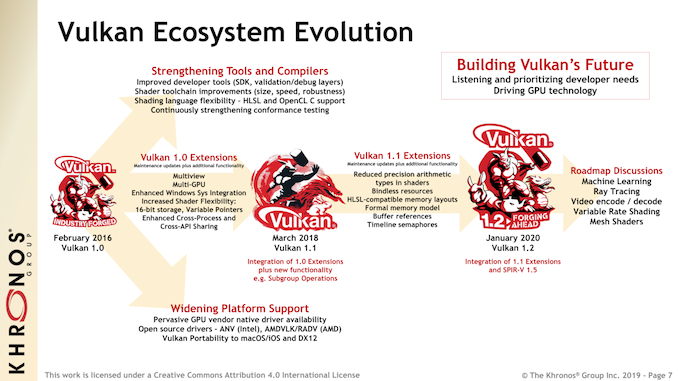

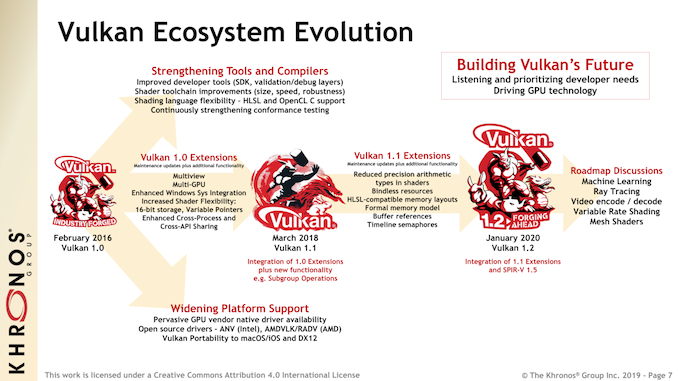

The work to do so is never done, of course. Even ignoring developments in graphics hardware itself, there is still plenty going on just in terms of programming. How to better extract the benefits of low-level programming, supporting new developer paradigms, ensuring cross-platform compatibility, etc, are all active topics, especially within the Khronos consortium. The institution of all APIs Open launched its own low-level graphics API back in 2016 with Vulkan, and since then has been continuing to iterate upon Vulkan to improve it. 2018 saw Vulkan 1.1, and now, today, is the formal launch of Vulkan 1.2

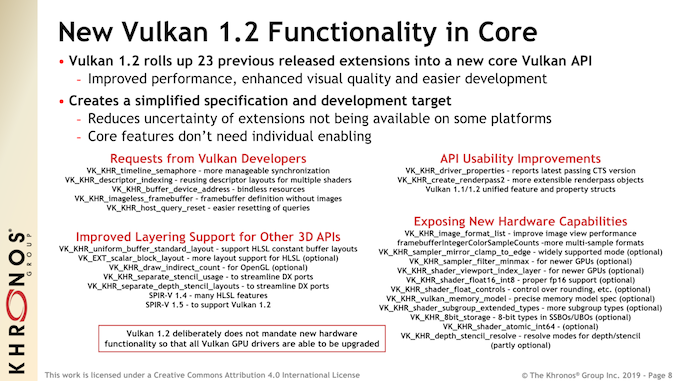

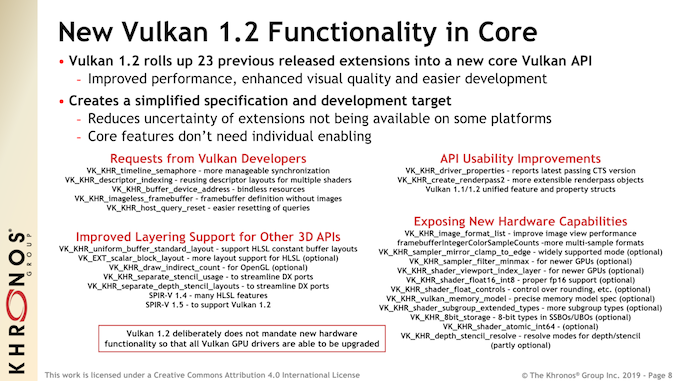

Like most Khronos projects, Vulkan is based on a constant progression of new ideas being suggested, implemented, tested, and finally rolled into the specification proper. As a result, Vulkan is never “done” – there’s always a new extension around the corner – but the sync points that are major releases represent a very important step in the development process. It’s here where extensions finally get their wings, in a sense, and get promoted into the core specification, ensuring their functionality and availability to programmers across all platforms with Vulkan support. So for Vulkan 1.2, today’s update sees the promotion of 23 extensions released in the last couple of years into the core API specification, with widespread availability set to quickly follow.

For better or worse, Vulkan 1.2 is very much a programmer-focused release. The new functionality is significant, as any programmer who has the (mis)fortune of playing with semaphores can tell you, but today’s release isn’t about new hardware features. In fact, Vulkan 1.2 doesn’t mandate any new hardware functionality whatsoever, so it’s purely an in-place API upgrade that can be deployed on any hardware that supports Vulkan 1.1. To be sure, Khronos achieves this in part by making several new API calls optional – things like FP16 shaders – but there isn’t any big, new feature anchoring Vulkan 1.2.

This makes it very geared towards being a quality of life improvement for programmers (and platform owners), all of whom are getting better ways of doing things faster – they just aren’t necessarily getting ways to do new things. For the gaming crowds out there, marquee feature additions such as ray tracing, variable rate shading, and mesh shaders will eventually come, but Vulkan 1.2 is not that kind of release.

Of Semaphores and Shading Languages

As I mentioned earlier, Vulkan 1.2 is largely a quality of life release for programmers. Low level graphics programming is hard, and best practices have continued to evolve over the last 4 years on how to better use Vulkan and similar APIs without being a John Carmack-caliber programmer.

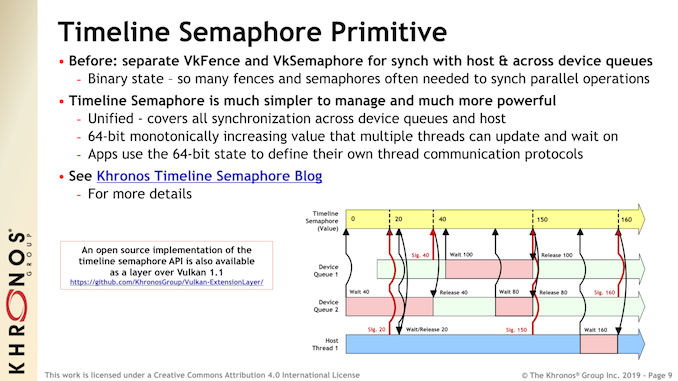

What’s the biggest feature addition for Vulkan 1.2 then? Timeline Semaphores.

In truth, I’ve re-written this section three times over trying to explain at a high-level what semaphores are, and why they’re so important to Vulkan. But semaphores are a distinctly computer science topic, and thus are a distinctly programmer (as opposed to user) topic when talking about Vulkan 1.2.

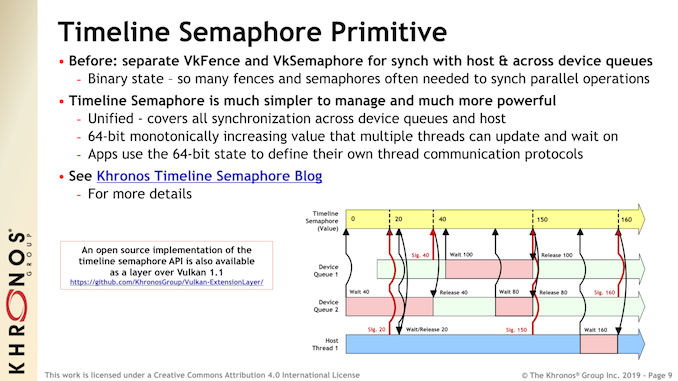

None the less, timeline semaphores are a major development for the API. In a nutshell, semaphores are a way to control access to shared resources and synchronize data across devices and queues; a piece of data to indicate when and how it’s safe to make operations on flagged resources. Vulkan has supported semaphores since its release (VkSemaphore), but as Khronos readily admits, Vulkan’s previous semaphore mechanism kind of sucked. Binary semaphores aren’t very flexible – and in some ways are closer to the good ole’ mutex – and while they certainly work, they can be inefficient.

The solution then is a more robust semaphore mechanism, and that is the timeline semaphore. I won’t attempt to outdo Khronos’s own blog post on the matter, but the advancement here is offering a much larger value for semaphores – 64 bits instead of 1 bit – and then making these new semaphores visible from hosts and devices alike. The end result is that the amount of work programmers have to do to synchronize parallel operations should go down, and similarly the amount of execution time wasted on multiple levels of simple semaphores will be reduced.

Again, the significance of this isn’t in new features, but rather in efficiency for the hardware and the programmer alike. One of the central goals of Vulkan is to enable multithreaded work submission – a limitation that could never be properly solved in OpenGL – so improved semaphores are one such means to make that task even easier. It’s not a feature that will ever be on a game box or in an interview, but if you happen to work around a graphics programmer, perhaps you’ll hear a bit less cursing when it comes to multithreaded programming.

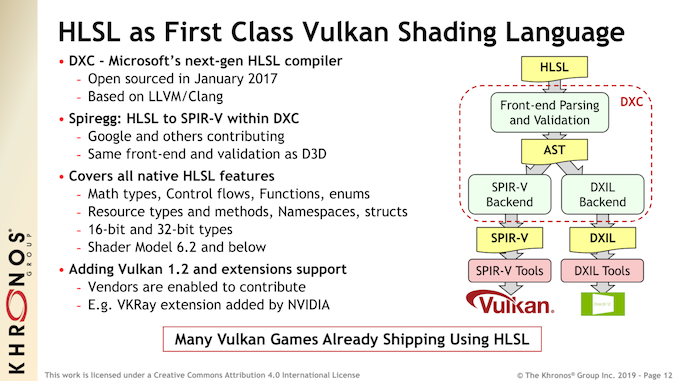

Moving on, the other big focus area for Vulkan 1.2 is on cross portability, both coming in and going out of Vulkan. The API’s development body has been working on the matter of expanded shader language support for a few years now, and with Vulkan 1.2 we’re finally seeing the fruits of their labor with High-Level Shader Language (HLSL) support.

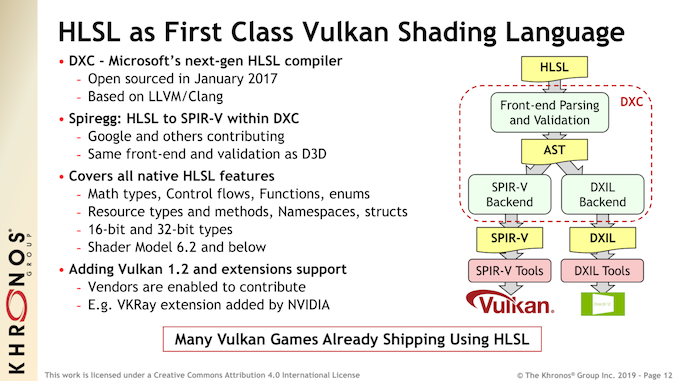

HLSL, as a refresher, is Microsoft’s shader language, which is used for DirectX. Like so many things Khronos versus Microsoft, it sits juxtaposed to Khronos’s own open shader language, GLSL. For obvious reasons, Khronos favors GLSL since they have control over it, but the group is also a pragmatic one: most of the PC space (and even a good chunk of the console space) is ruled by HLSL, and while GLSL isn’t going anywhere, it’s in everyone’s best interests to maximize compatibility with HLSL as well.

The net result is that for Vulkan 1.2, Khronos has achieved full HLSL support, making it a “first class” shading language within Vulkan, right up there with GLSL. Thanks in big part to Microsoft open sourcing their own HLSL compiler (DXC) a few years back, Vulkan 1.2 can support HLSL shader model 6.2 and below, essentially covering all modern hardware outside of ray tracing features. Under the hood, this is all being powered by Vulkan’s native intermediate representation format, SPIR-V, with HLSL being compiled down to SPIR-V code for further use.

The significance of adding HLSL support is two-fold. The first is that it allows for easier porting or the cross-platform development of games between Microsoft platforms – DirectX 12 and the Xbox console family – and everything else Vulkan supports. So whether this means porting a DX12 game to Vulkan or writing your shaders once in HLSL and being able to hit Vulkan PCs and the Xbox all in one go, Vulkan can now handle this situation without having to rewrite (or even heavily re-optimize) a bunch of shaders. And even if portability isn’t the desired goal, if a developer just likes HLSL for their own reasons, they can now use it as a native, full featured shader language within Vulkan.

Maximum Portability

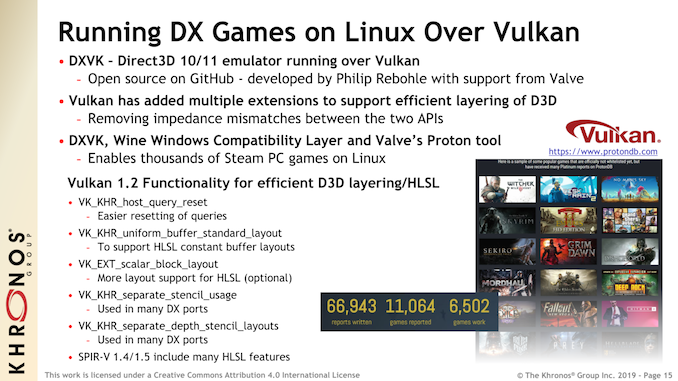

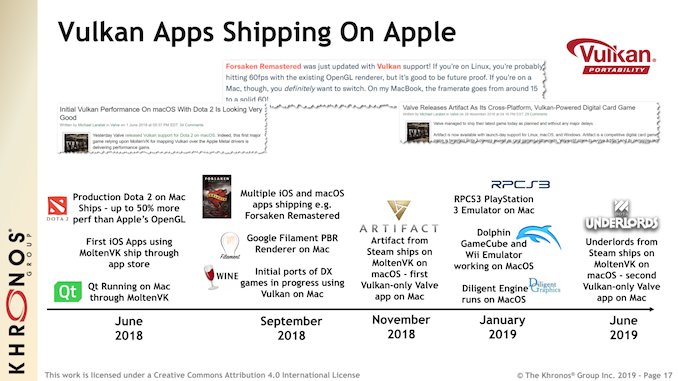

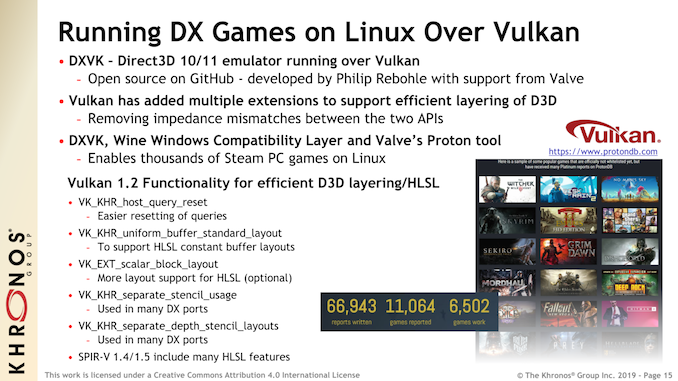

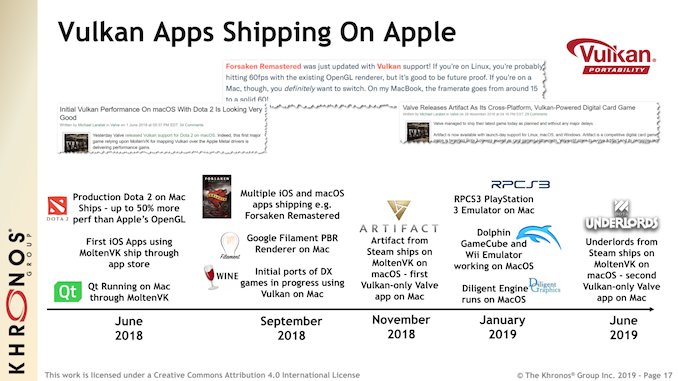

In fact portability as a whole remains one of the big, driving goals for Khronos and the Vulkan board. While the dream of a truly universal API has taken an unfortunate hit, thanks in large part to Apple going entirely proprietary with Metal, Vulkan’s big backers have opted to put their energy into various portability efforts to bridge these gaps, at least where it makes sense. The net result is projects such as DXVK, which is a DirectX emulator running on top of Vulkan and the key enabler of Valve’s Proton compatibility tool. Or to use the Apple example, the MoltenVK runtime library, which allows Vulkan to be used on top of Metal. In both cases Vulkan is being used to provide portability, either as a common target to run proprietary code, or as a common API to run common code on a proprietary platform.

Finally, in a quick note on Vulkan progression in general, Khronos is also using the Vulkan 1.2 launch to make note of where Vulkan use stands with professional application developers. A relationship that can be tenuous at times, professional applications were one of the first uses for OpenGL, and while gaming gets more attention, they are arguably still among the more important uses for the API. For that reason, the developers behind these applications were always going to be slow to make the transition away from tried & true OpenGL to Vulkan, but that time has finally come.

Driving this are a few different factors. The biggest one, perhaps, is Vulkan adding support for more legacy OpenGL features such as hardware line acceleration. Which may sound trivial in an age where GPUs are pushing billions of pixels, but for highly refined CAD programs and the like, this is what these programs are built on top of. And of course, this doesn’t include actual next-generation Vulkan features such as multi-threading, compute, and future hardware features. OpenGL itself is at a dead end – it’s unlikely to see any further feature development – so as new hardware features like ray tracing become available, Vulkan will be the path forward for legacy OpenGL users.

Vulkan 1.2: Shipping Today, Conformance Today, Drivers Today

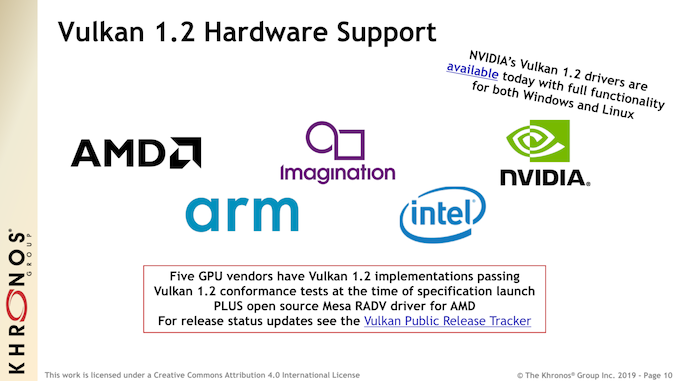

Wrapping things up, as with previous Vulkan releases, Khronos has played things very conservatively with respect to their development process and when the new specification is being launched. Rather than announcing the new specification and letting hardware vendors catch up, Khronos has worked in tandem with the hardware developers to try to launch Vulkan 1.2 in as useable a state as possible.

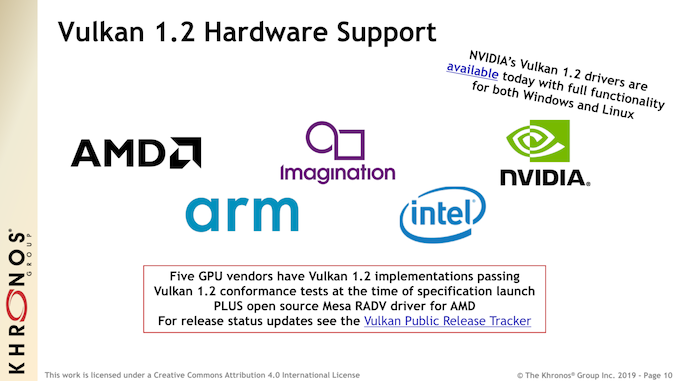

To that end, the Vulkan 1.2 conformance tests are already done, and five vendors – the three PC vendors plus Imagination and Arm – all already have 1.2 implementations that pass the conformance tests. In fact NVIDIA will be doing one better than that, and will have Vulkan 1.2 drivers ready today as a developer beta. So while it will still take some time for Vulkan 1.2 to start showing up in commercial (or at least production-ready) software, the consortium and its members are hitting the ground running.

![]()

![]()

Source: AnandTech – Vulkan 1.2 Specification Released: Refining For Efficiency & Development Simplicity