A large shop in Japan has barren shelves and is running low on gaming PCs as hardware around the world gets more expensive

The post AI-Fueled PC Gaming Crisis Has One Store Begging For People’s Old Rigs appeared first on Kotaku.

A large shop in Japan has barren shelves and is running low on gaming PCs as hardware around the world gets more expensive

The post AI-Fueled PC Gaming Crisis Has One Store Begging For People’s Old Rigs appeared first on Kotaku.

Lumus, the company that developed the waveguide optic used in Meta’s Ray-Ban Display smart glasses, says it has achieved a 70° field-of-view in a new design revealed this week at CES 2026. This conveniently matches the 70° field-of-view that Meta achieved in its ‘Orion’ prototype, but only with the use of novel materials.

Back in 2024, Meta revealed its first AR glasses prototype, codenamed Orion. One of the prototype’s big innovations was its ability to squeeze a 70° field-of-view into such a small form-factor. This was made possible with the use of unique waveguide optics made with silicon carbide, a novel material that enabled the wider field-of-view thanks to its greater refractive index.

In 2025, Meta talked about the challenges of manufacturing silicon carbide waveguides, affordably, at scale. While the company said progress was being made, it still conceded that the work is ongoing.

“We’ve successfully shown that silicon carbide can flex across electronics and photonics. It’s a material that could have future applications in quantum computing. And we’re seeing signs that it’s possible to significantly reduce the cost. There’s a lot of work left to be done, but the potential upside here is huge,” the company said at the time.

But now Lumus, the company that developed the waveguides in Meta’s Ray-Ban Display glasses says it has achieved a 70° field-of-view in its glass waveguides. The company claims it’s the “world’s first geometric waveguide to surpass a 70° FOV.”

The company announced that it is showing the new ZOE waveguide this week at CES 2026. Renders provided by the company show the company’s latest prototype to include the ZOE optics (though it’s worth noting that Lumus’ prototypes typically do not include on-board battery, compute, or tracking hardware, which would add bulk to any real product based on ZOE).

My gut tells me it probably isn’t a coincidence that Lumus has been aiming for a 70° field-of-view, which just happens to match what Meta achieved with its Orion prototype. Most likely, the company was tasked (implicitly or maybe even directly) with doing exactly that—proving that its waveguides could reach the 70° benchmark without using silicon carbide.

Beyond simply achieving a 70° field-of-view as a proof-of-concept, Lumus says the ZOE optic is made with the same process as its other glass waveguides. That’s a big deal, because the company has already proven that such waveguides can be manufactured at scale, thanks to the use of its waveguides in Ray-Ban Display, Meta’s first smart glasses with a display.

That means Lumus’ ZOE waveguide is most definitely on the shortlist for what Meta could use in its first pair of wide field-of-view AR glasses, which the company said it hopes to bring to market before 2030.

Granted, field-of-view isn’t everything. When it comes to optics, everything is a tradeoff. Increased field-of-view can impact brightness, PPD, and various visual artifacts. Without being able to see the new ZOE optic for myself, it’s hard to say whether or not Lumus has something truly new here, or if they’ve simply boosted field-of-view by trading other downsides.

I expect I’ll have a chance to see the ZOE optic later this year at AWE 2026 where I usually meet with Lumus to see their latest developments. In the meantime, I’ve also reached out to the company to learn more about how it reached the 70° field-of-view and what tradeoffs it did or didn’t have to make to get there.

The post Meta Waveguide Provider Claims “world’s first” 70° FoV Waveguide appeared first on Road to VR.

The generative-AI tool Grok has been found to be producing images of undressed minors

The post Elon Musk’s Bot Lies About Locking Deepfake Nudity Factory Behind A Paywall appeared first on Kotaku.

People online spent a lot of time arguing about representation in the first and second games, but Warhorse’s co-founder isn’t too concerned

The post <i>Kingdom Come Deliverance 2</i> Boss Says Only ‘Terminally Online Culture Warriors’ Care About Controversies appeared first on Kotaku.

Also: a mystery game is set to appear at the first Xbox showcase of the year

The post Would You Pay Over $1,000 For A Steam Machine? appeared first on Kotaku.

Wyll was rewritten in the development phase, but that’s not the only reason he has less involvement than other companions

The post Larian Explains Why <em>Baldur’s Gate 3’s</em> Most Underserved Companion Felt Shortchanged appeared first on Kotaku.

This persistent rumor won’t go away, despite mountains of evidence proving it to be false

The post The Conspiracy That Todd Howard Hates <i>Fallout: New Vegas</i> Is Absurd appeared first on Kotaku.

The RPG got some flak for characters like Gale and Halsin coming on too strong

The post After <em>Baldur’s Gate 3</em>, Larian Wants Companions To Stop Trying To Immediately Have Sex With You appeared first on Kotaku.

You’re saving a nice $30 with this deal, plus enjoying free delivery with your purchase.

The post Samsung Galaxy Buds 3 FE (2025) Drops to All-Time Low With Its First Cut of the Year While AirPods Go Back to Near Full Price appeared first on Kotaku.

What entices someone to visit a virtual museum rather than a physical one? ArtQuest VR might have an answer.

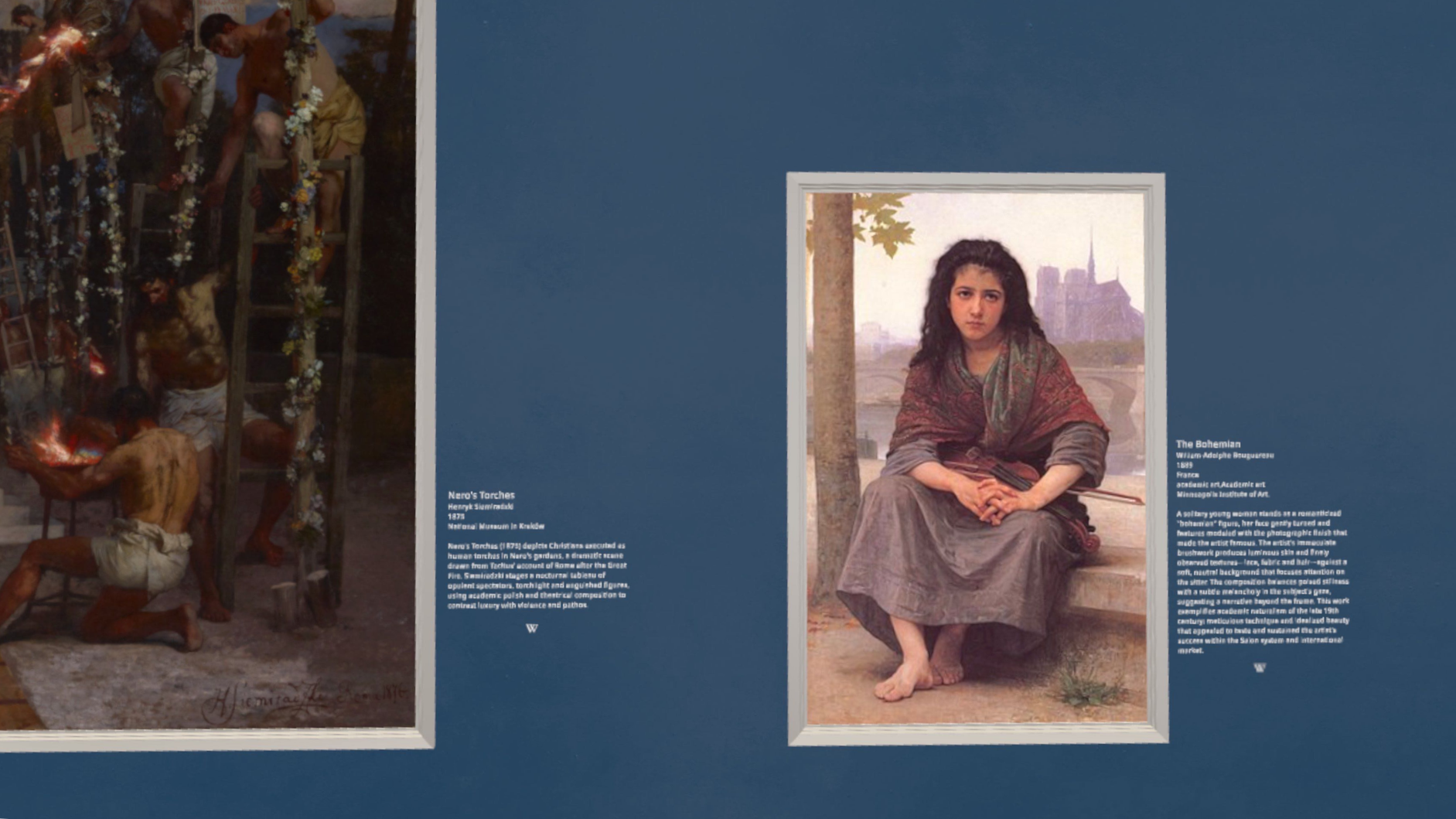

ArtQuest VR is a museum app allowing users to visit halls of paintings presented in true scale. Pulling from collections of famous museums around the world, visitors can enjoy exhibits arranged by artist, movement, or preset collection.

Opening ArtQuest VR directs you to your first gallery and presents a menu for exhibit navigation. The museum has options for choosing what art you want to see, gallery customization, and movement.

You can change the color and materials of the main wall, floor, and frames of the art you’re looking at. Adjustments can be made to frame thickness with a drop-down menu for framing styles. There is also an option for a text-to-speech voice to narrate each painting’s description and information with five different voices offered.

0:00

Customizing the gallery

Moving around can either be done smoothly with the left joystick push or “blink” teleportation using the right joystick. The only turning option currently is snap turning. You can also use the menu to recalibrate your height so each painting is positioned at eye level.

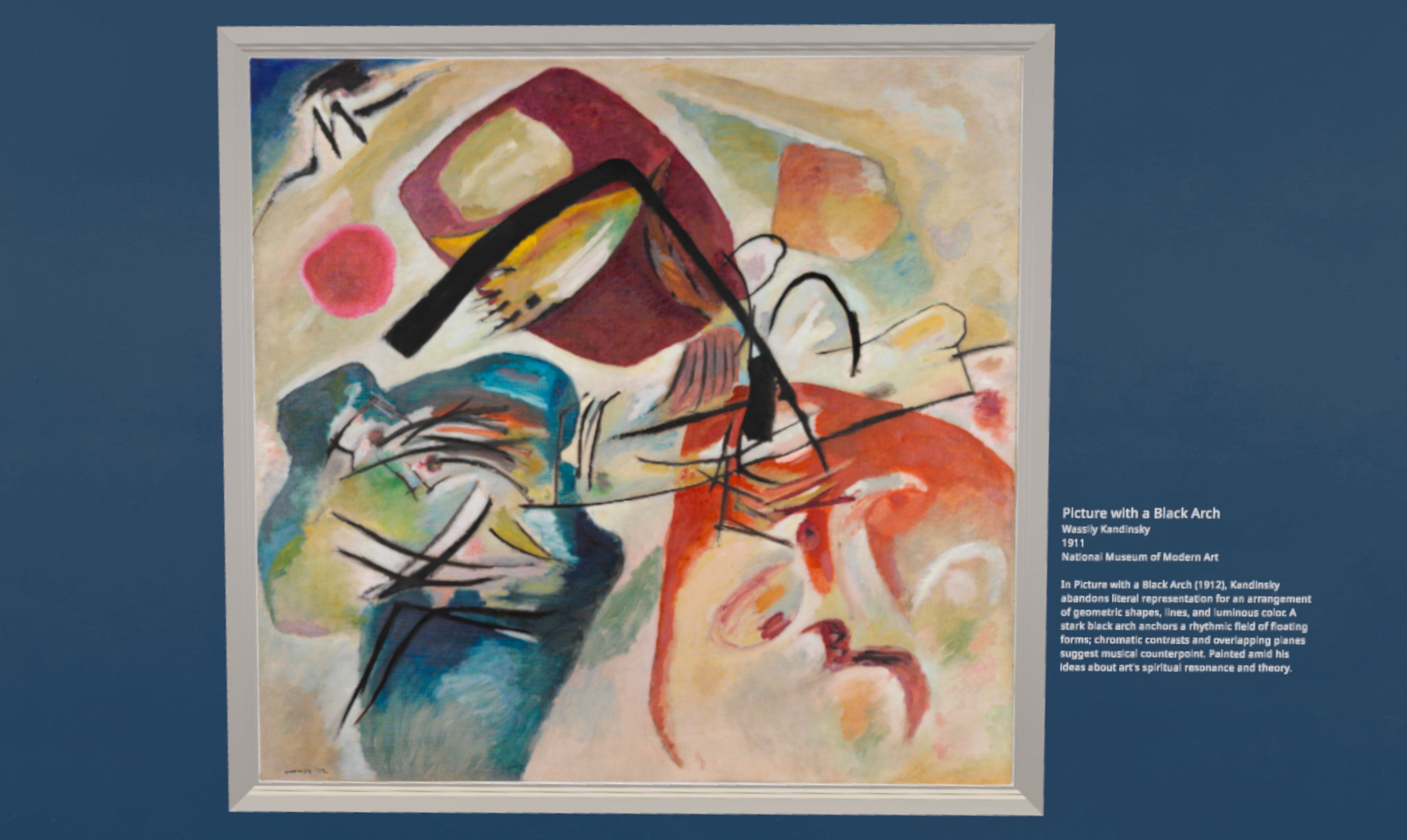

I examined a painting by Wassily Kandinsky titled “Picture With A Black Arch”.

The height offset feature in ArtQuest allows visitors to elevate their stance, as if borrowing a ladder to view paintings from a higher vantage. I floated upwards along the canvas and examined the painting. “Picture With A Black Arch” is awash with quick brushstrokes and geometric shapes. What made the artist paint this? What did the accompanying description of “musical counterpoint” mean here?

I pantomimed painting in the air along with the artist himself, tracing my hand over the dark outlines first. I’m a painter myself, so I recognized thick brushstrokes meant a pause, or applied pressure on the canvas. Thinner strokes meant a more delicate hand. Short, harsh, lines meant faster application, especially several in a group at once. These particular brushstrokes all lean left, indicating Kandinsky painted with his right hand. I traced the marks in the air while listening to my favorite orchestral music.

What I found were hand movements that seemed to dance in the air with purposeful direction. It felt just like someone directing an orchestra while painting on a canvas. Checking a Google cultural site later that listed more information about the artwork, I remain pretty convinced that’s what Kandinsky was doing.

Virtual museums can be hard to build. You immediately discover the architecture surrounding the art relates to the pieces within. These digital spaces benefit from thoughtful immersive design. That means ambient sounds and building for how someone will walk around the space you’ve designed. How about a lobby to pause and reflect on what’s been seen? Neither ambient audio nor lobby are present in ArtQuest VR, and I’d love to see these added.

The advantage of ArtQuest VR, though a bit lonely without other visitors and plain in presentation, is that I can go and see a near-entire collection of Van Gogh or Matisse, and I don’t have to download gigabytes of information to do it nor compete with anyone else for the perfect spot. The app has the feel of a spatial website and a functioning museum with an exclusive collection of work.

0:00

Accessing the collection menu

ArtQuest VR’s architecture is simple with a slight neoclassical style and descriptions that appear sourced from Wikipedia. The neoclassical architecture matches Wikipedia’s site design, but it still feels like something is missing without the ambient sound. You simply pop into the gallery once the app is open. At least one art collection featured missing textures. As I browsed the contemporary art wing, a recent Banksy piece returned an ugly floating pink square to indicate the sourced artwork was no longer there. A picture featuring a mural by Shepard Fairey rotated itself in the wrong direction after sitting right-side-up for a few seconds.

I noted some additional bugs attempting to access my Quest menu and teleporting too far into the wall moving between galleries. The most notable issue, perhaps, is when paintings don’t appear at high resolution until your face is practically centimeters from the artwork. Also, sometimes, there are duplicate paintings that appear in a gallery with no explanation why.

It’s a well-held myth that art is about perfection and not the journey it takes to get there. If this were true, museums wouldn’t show the early work of artists they feature. Viewing famous paintings chronologically in an app such as ArtQuest VR can show how art is just as much about failure as it is about success. Each artist has their own story in how they reach that success, and it’s up to each visitor to reflect on that and how they can adapt this lesson to their lives.

How can ArtQuest VR keep building on this? Every museum visitor is looking for something when they visit. Can VR bring them the very human effort of outdoing oneself through practice, improvement and sudden inspiration? That’s not always present in the room with us in a physical museum. Seeing things from new angles is precisely VR’s power, and there’s an opportunity for an app like ArtQuest to help see more context around a specific piece of art each time someone walks through the front door.

Co-op and more returns in the next RPG from the maker of Baldur’s Gate 3

The post 15 Things We Just Learned About <i>Divinity</i> appeared first on Kotaku.

Having a charger that you can take everywhere with you is super useful.

The post Anker’s 24,000mAh Power Bank Hits Its First Record Low of the New Year After Skipping Holiday Sales appeared first on Kotaku.

Larian says it ‘would have loved to’ bring the RPG to Nintendo’s console

The post <em>Baldur’s Gate 3</em> Director Addresses Missing Switch 2 Port: ‘It’s Wasn’t Our Decision’ appeared first on Kotaku.

If you’re looking to do some upgrades, here’s an easy path to doing so.

The post AMD Puts Its Ryzen 7 7700X on Clearance at Nearly 40% Off, the Lowest Price for 8-Core and 16-Thread Unlocked Desktop CPU appeared first on Kotaku.

Enjoy immersive, smooth gameplay for all your favorite titles.

The post Samsung Goes All-In on Odyssey Gaming Monitors, 32″ G50D Series Hits an All-Time Low at 42% Off appeared first on Kotaku.

The My Mario toys are being marketed toward parents with children too young to pick up a Switch

The post Nintendo Markets <em>Mario</em> Toys With Mom Whose Thumb Is A Medical Miracle Or AI Slop appeared first on Kotaku.

Meta released an open source language learning app for Quest 3 that combines mixed reality passthrough and AI-powered object recognition.

Called Spatial Lingo: Language Practice, the app not only aims to help users practice their English or Spanish using objects in their own room, but also give developers a framework to make their own apps.

Meta calls Spatial Lingo a “cutting-edge showcase app” meant to transform your environment into an interactive classroom thanks to its ability to overlay translated words onto real-world objects, which uses AI and Quest’s Passthrough Camera API.

The app’s AI not only focuses on object detection for vocab building, but also features a 3D companion to guide you, which Meta says will offer encouragement and feedback as you practice speaking.

It also listens to your voice, evaluates your responses, and helps you master pronunciation in real time, Meta says.

Spatial Lingo is also open source, letting developers use its code, contribute, or build their own new Unity-based experiences.

Developers looking to use Spatial Lingo can find it over on GitHub. You can also find it for free on the Horizon Store for Quest 3 and 3S.

I’m usually pretty wary of most language learning apps since many of them look to maximize engagement, or otherwise appeal to what a language learner thinks they need rather than what the answer actually is: hours and hours of dedicated multimedia consumption combined with speaking practice with native speakers. I say this as a native English speaker who speaks both German and Italian.

Spatial Lingo isn’t promising fluency though; it’s primarily a demo that comes with some pretty cool building blocks—secondary to its use as a nifty ingress point into the basics of common nouns in English or Spanish. That, and it actually might also be pretty useful for beginners who not only want to see the word they’re trying to memorize, but get some feedback on how to pronounce it. It’s a neat little thing that maybe someone could take and make into an even neater big thing.

That said, the highest quality language learning you could hope for should invariably involve a native speaker (or AI indistinguishable from a native speaker) who not only points to a cat and can say “el gato “, but uses the word in full sentences so you can also get a feel for syntax and flow of a language—things that are difficult to teach directly, and are typically absorbed naturally during the language acquisition process.

Now that’s the sort of XR language learning app that would make me raise an eyebrow.

The post Meta’s Language Learning App for Quest Combines Mixed Reality and AI appeared first on Road to VR.

Meta is rolling out the Conversation Focus feature to the Early Access program of its smart glasses in the US & Canada.

Announced at Connect 2025 back in September, Conversation Focus is an accessibility feature that amplifies the voice of the person in front of you.

It’s the result of more than six years of research into “perceptual superpowers” at Meta. Unlike the hearing aid feature of devices like Apple’s AirPods Pro 2, Meta’s Conversation Focus is designed to be highly directional, amplifying only the voice directly in front of you.

0:00

Conversation Focus on Meta smart glasses.

Conversation Focus is available in Early Access on Ray-Ban Meta and Oakley Meta HSTN glasses in the US and Canada.

To join the Early Access program, visit this URL in the US or Canada.

Once your glasses have the feature, you can activate it at any time using “Hey Meta, start conversation focus,” or (perhaps more practically) you can assign it as the long-press action for the touchpad on the side of the glasses.

Save 20% on this ‘piece of junk’ Star Wars Millennium Falcon set from LEGO for a limited time.

The post LEGO Restocks the Star Wars Millennium Falcon at a Record Low for Another Clearance Run appeared first on Kotaku.

The Divinity maker elaborated on controversial comments about gen AI from last year

The post <i>Baldur’s Gate 3</i> Maker Says Next RPG Could Have Gen AI Assets As Long As They’re Created With ‘Data We Own’ appeared first on Kotaku.