The intel-speed-select tool that lives within the Linux kernel source tree for allowing some control over Intel Speed Select Technology (SST) and managing of clock frequencies / performance behavior will finally allow limited non-root usage…

Author Archives: Xordac Prime

Zorin OS 18 Crosses 2 Million Downloads, Cementing Its Appeal to New Linux Users

Zorin OS has reached an important milestone. The team behind the popular Linux distribution has announced that Zorin OS 18 has surpassed two million downloads, underscoring the growing interest in Linux as a practical alternative to mainstream operating systems.

ChaosBSD Is A New BSD For “Broken Drivers, Half-Working Hardware, Vendor Trash” Test Bed

A new BSD on the block is ChaosBSD that intends to serve as a testing distribution for unfinished and broken drivers not suitable for upstreaming to FreeBSD proper…

Linux 6.19-rc6 Bringing Sound Fixes For ROG Xbox Ally X & Various Laptops

With the Linux 6.19-rc6 kernel release due out later today there will be a number of sound fixes/workarounds to note from the ASUS ROG Xbox Ally X gaming handheld to several newer laptops seeing fixes for their audio support…

Retailers Rush to Implement AI-Assisted Shopping and Orders

This week Google “unveiled a set of tools for retailers that helps them roll out AI agents,” reports the Wall Street Journal,

The new retail AI agents, which help shoppers find their desired items, provide customer support and let people order food at restaurants, are part of what Alphabet-owned Google calls Gemini Enterprise for Customer Experience. Major retailers, including home improvement giant Lowe’s, the grocer Kroger and pizza chain Papa Johns say they are already using Google’s tools to help prepare for the incoming wave of AI-assisted shopping and ordering…

Kicking off the race among tech giants to get ahead of this shift, OpenAI released its Instant Checkout feature last fall, which lets users buy stuff directly through its chatbot ChatGPT. In January, Microsoft announced a similar checkout feature for its Copilot chatbot. Soon after OpenAI’s release last year, Walmart said it would partner with OpenAI to let shoppers buy its products within ChatGPT.

But that’s just the beginning, reports the New York Times, with hundreds of start-ups also vying for the attention of retailers:

There are A.I. start-ups that offer in-store cameras that can detect a customer’s age or gender, robots that manage shelves on their own and headsets that give store workers access to product information in real time… The scramble to exploit artificial intelligence is happening across the retail spectrum, from the highest echelons of luxury goods to the most pragmatic of convenience stores.

7-Eleven said it was using conversational A.I. to hire staff at its convenience stores through an agent named Rita (Recruiting Individuals Through Automation). Executives said that they no longer had to worry about whether applicants would show up to interviews and that the system had reduced hiring time, which had taken two weeks, to less than three days.

The article notes that at the National Retail Federation conference, other companies showing their AI advancements included Applebee’s, IHOP, the Vitamin Shoppe, Urban Outfitters, Rag & Bone, Kendra Scott, Michael Kors and Philip Morris.

Read more of this story at Slashdot.

GNOME 49.3 Desktop Released with More Improvements and Bug Fixes

The GNOME Project released today GNOME 49.3 as the third point release to the latest GNOME 49 “Brescia” desktop environment series with various bug fixes and improvements.

53% of Crypto Tokens Launched Since 2021 Have Failed, Most in 2025

=[

“More than half of all cryptocurrencies ever launched are now defunct,” reports CoinDesk, citing a new analysis by cryptocurrency data aggregator CoinGecko.

And most of those failures occurred in 2025:

The study looked at token listings on GeckoTerminal between mid-2021 and the end of 2025. Of the nearly 20.2 million tokens that entered the market during that period, 53.2% are no longer actively traded. A staggering 11.6 million of those failures happened in 2025 alone — accounting for 86.3% of all token deaths over the past five years.

One key driver behind the surge in dead tokens was the rise of low-effort memecoins and experimental projects launched via crypto launchpads like pump.fun, CoinGecko analyst Shaun Paul Lee said. These platforms lowered the barrier to entry for token creation, leading to a wave of speculative assets with little or no development backing. Many of these tokens never made it past a handful of trades before disappearing.

Read more of this story at Slashdot.

Sequent Microsystems Multichemistry Watchdog HAT Adds UPS Support for Raspberry Pi

Sequent Microsystems has unveiled a multichemistry Watchdog HAT designed to provide battery-backed power, system monitoring, and automatic recovery for Raspberry Pi boards. The HAT targets mission-critical and unattended deployments, combining a hardware watchdog timer with an integrated UPS and battery charger. The Watchdog HAT supports a wide range of rechargeable battery chemistries, including Lithium-Ion, Lithium-Polymer, […]

How Much Do AI Models Resemble a Brain?

At the AI safety site Foom, science journalist Mordechai Rorvig explores a paper presented at November’s Empirical Methods in Natural Language Processing conference:

[R]esearchers at the Swiss Federal Institute of Technology (EPFL), the Massachusetts Institute of Technology (MIT), and Georgia Tech revisited earlier findings that showed that language models, the engines of commercial AI chatbots, show strong signal correlations with the human language network, the region of the brain responsible for processing language… The results lend clarity to the surprising picture that has been emerging from the last decade of neuroscience research: That AI programs can show strong resemblances to large-scale brain regions — performing similar functions, and doing so using highly similar signal patterns.

Such resemblances have been exploited by neuroscientists to make much better models of cortical regions. Perhaps more importantly, the links between AI and cortex provide an interpretation of commercial AI technology as being profoundly brain-like, validating both its capabilities as well as the risks it might pose for society as the first synthetic braintech. “It is something we, as a community, need to think about a lot more,” said Badr AlKhamissi, doctoral student in computer science at EPFL and first author of the preprint, in an interview with Foom. “These models are getting better and better every day. And their similarity to the brain [or brain regions] is also getting better — probably. We’re not 100% sure about it….”

There are many known limitations with seeing AI programs as models of brain regions, even those that have high signal correlations. For example, such models lack any direct implementations of biochemical signalling, which is known to be important for the functioning of nervous systems.

However, if such comparisons are valid, then they would suggest, somewhat dramatically, that we are increasingly surrounded by a synthetic braintech. A technology not just as capable as the human brain, in some ways, but actually made up of similar components.

Thanks to Slashdot reader Gazelle Bay for sharing the article.

Read more of this story at Slashdot.

How to Install and Use Flutter on Linux

In this article, you will learn how to setup and configure Flutter with Android Studio on Linux and quickly run a Flutter app to confirm the setup.

The Metaverse At All Costs: How Mark Zuckerberg Fumbled Oculus VR

In 2014, Mark Zuckerberg bought Oculus VR for a couple billion dollars with the premise virtual reality was to become the foundation of personal computing.

In 2026, virtual reality is really starting to dig its roots into those foundations with OpenXR and Flatpaks. Operating systems based around VR headsets with eye tracking as a key feature are now receiving updates from Google, Valve and Apple.

New walled gardens are building up fast even as old ones fall down. Valve is coming for gaming, Google is relying on Android APKs, and Apple is building out a new kind of live sports and TV experience, all of it with VR as the display for the entire landscape.

Earlier this week many hundreds of people lost their jobs as Meta announced the most dramatic course-correction to its strategy yet. Even though VR’s future has never been brighter, the weight of Meta’s shift might lead some to believe “Oculus VR” here was a “grand misadventure” and virtual reality is dead, again.

That couldn’t be further from reality. If you care about the future you should have been reading UploadVR yesterday.

As I look across the last 10 years and try to piece together a picture of how Meta ended up here, I find one key technology conspicuously absent from almost all their headset and glasses designs, save for the failed Quest Pro.

Here’s a look at why the absence of eye tracking limits VR’s scale and Zuckerberg’s ambition for a new social network clouded the Oculus vision.

Eye Tracking In 2017

In 2017 I attended a pair of eye tracking demos at GDC, one of them inside Valve’s booth. From these demos I started to realize “just how empowering eye tracking will be for VR software designers.”

“The additional information [eye tracking] provides will allow creators to make games that are fundamentally different from the current generation,” I wrote. “It was like I had been suddenly handed a superpower and I naturally started using it as such — because it was fun. It is up to designers to figure out how much skill will be involved in achieving a particular task when the game knows exactly what you’re interested in at any given moment.”

Architecting an entire VR platform over a decade without a solid plan for default implementation of eye tracking is a study in long-term vision meeting short-term execution.

“Apple’s eye tracking is really nice,” Zuckerberg noted on Instagram in 2024 after saying he tried Apple’s headset. “We actually had those sensors back in Quest Pro. We took them out for Quest 3, and we’re gonna bring them back in the future.”

0:00

You could see this tiny comment as one of the first public acknowledgments in which he is starting to realize something is deeply wrong with his current strategy.

When Facebook stopped selling Oculus Go it acknowledged the company wouldn’t ever make another 3dof headset. The same thing should have happened after the Quest Pro launched in 2022 with eye tracking. It didn’t. By the time Meta will ship a second VR headset with eye tracking, roughly five years will have passed from the first. The company is probably all in on full-body codec avatars being their prize for drawing you into their vision of the metaverse now, after Apple stole their initial thunder with FaceTime and Personas powered by good eye tracking.

I believe we now have evidence that VR headsets that can’t see what you want by following the intent of your eyes aren’t serious contenders as platform plays. Valve, Google, and Apple are all centered on the technology in their latest headsets for slightly different reasons. When you pull back far, you can see that Steam Frame’s DK1 was the HTC Vive in 2016 and Valve Index was its DK2 from 2019.

Valve decided SteamOS in VR is ready for prime time in 2026 with Steam Frame’s consumer release, following Apple deciding 2024 was the time to launch Vision Pro. Both use eye tracking to do key things for users.

From Rift To Quest

For Zuckerberg’s organization, the ramping investments over the last decade would build the necessary technologies for a complete computing platform, starting with just a few billion to acquire the development team behind the Oculus Rift. Michael Abrash left Valve to found a modern Xerox PARC within Zuckerberg’s larger organization, drawn by the commitment to invest in costly long-term research and development.

Meta built those technologies in a fairly public way by showing work as it went, both in sharing research and selling products. Solid ideas like Oculus Medium during this early period were spun out and continued at places like Adobe.

Starting in 2020, Facebook tried forcing the linking of its accounts to the use of Quest headsets and, in early 2021, it tried advertising in virtual reality. VR users quickly rejected both efforts.

Facebook’s executives embarked on a rebranding effort to Meta alongside a new accounts system developed as a fresh start for Zuckerberg’s new computing platform in headsets and glasses. By the end of 2021, Facebook was Meta.

Quest 2 was selling well. There was a well-curated store, their hand tracking was quickly approaching state of the art, and there was no credible competition in the United States shipping a standalone VR headset. Any stink associated with Facebook was being put behind Meta with Zuckerberg’s bold new vision of the “Metaverse.”

And a high-end Quest Pro with eye tracking was still coming in late 2022.

A Grand Misadventure?

“Setting out to build the metaverse is not actually the best way to wind up with the metaverse,” warned technical guide John Carmack in 2021. “The metaverse is a honeypot trap for architecture astronauts… Mark Zuckerberg has decided now is the time to build the metaverse….my worry is we could spend years and thousands of people possibly and wind up with things that didn’t contribute all that much to the ways that people are actually using the devices and hardware today…we need to concentrate on actual products rather than technology, architecture, or initiatives.”

In 2022 Carmack left Meta as he “wearied of the fight” and, four years later, thousands have departed as leadership reshaped the company in the form of VR and AR technologies. Until the layoffs in 2026, Meta’s leadership and design failures didn’t reek of the failure Carmack specifically warned about. Now they do.

After laying off the vast majority of the game developers Meta hired and tasking the rest to “Horizon” initiatives, do we see Beat Saber and Population: One become a last ditch effort to keep Horizon Worlds alive? Meta’s latest move in December, as some of the first Steam Frame kits arrived with devs, was to delist Population: One from the Steam store, noting that it was a move to stop “unfair play” by cheaters using the openness of a PC to break the multiplayer experience.

The grand misadventure here was the entire Horizon Worlds effort, attempting to force a social network by brute force onto the wrong technology at the wrong time in the wrong way. 2026 represents a reset of Meta’s efforts, certainly, but the question is exactly how far back in this timeline Meta needs to go to figure out what went wrong, and which structural changes need to take place to fix it?

Gaming Studios Instead Of Eye Tracking

Meta acquired Beat Saber in November of 2019 and, over the next several years, doubled down multiple times by hiring dozens of developers skilled in the use of Oculus Touch controllers. Some of these decisions were set against unusual behavior patterns due to a generationally significant pandemic keeping people home near their headsets.

During the 2016-2018 period, NextVR streamed NBA games live to VR headsets, a startup called Spaces opened a walk around Terminator VR attraction, and the first decent eye tracking was demonstrated in consumer VR hardware. Apple released a headset that combined all those technologies mentioned from 2017 in a 2024 product.

At Meta, someone made decisions to ship headsets without eye tracking after shipping a single headset that tracks eyes. They have their reasons, but whatever they are may be the cause of Meta losing some of the lead in VR that was bought with Oculus in 2014. Whatever is going on with Meta’s decision-making process, leadership tried to rectify it by the end of 2025 with the hiring of a key executive from Apple.

Now Meta faces a world where it might increase production for its non-VR glasses products. Meanwhile, Apple, Google, Samsung, and Valve ship or plan to ship VR headsets with eye tracking.

From Real To Virtual And Virtual To Real

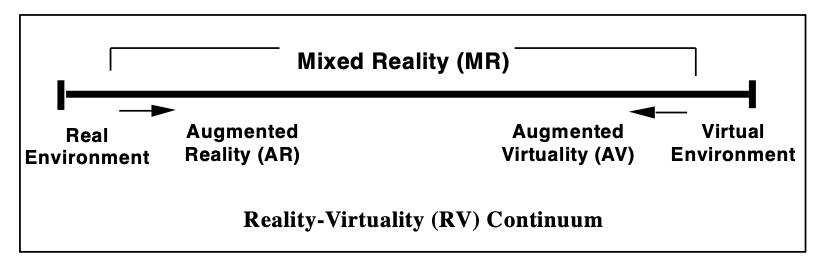

Imagine two types of eyewear at opposite ends of this particular continuum from Paul Milgram’s seminal 1994 paper. One at the right is a relatively heavy VR headset that is essentially all display. The other at left is a pair of ultra lightweight frames with no display. Today Meta ships Quest 3, 3S, and Ray-Ban glasses in each of these categories, and they all lack eye tracking.

Apple ships only the Vision Pro with eye tracking today and it is a $3,500 device not many people have tried. The headset does a little magic trick with this chart. It is rooted at the right edge of the chart, but software defaults to starting you at the left side. Turn the dial on the headset and the world can shift from your environment being fully “real” to fully “virtual” across the whole continuum.

Apple is surely readying something to secure the left side of the chart. When they launch, what features will they focus on and how might Apple and Meta eyewear differ?

Pointing Cameras In The Wrong Directions

If Vision Pro is a spatial computer I want Apple’s answer to the Meta Ray-Ban glasses to function more like a spatial mouse. No display and all input.

Apple could take the sensors for tracking hand movements and eye movements from Vision Pro and put that technology into slim frames with Bluetooth and battery. Thin clear glasses can gather the same eye and finger input as a big enclosed VR headset. It’s difficult, surely, but it’s more useful than putting in a display system for one eye. The differentiating feature would be a universal remote for everything that’s so impossibly advanced it could feel like magic almost everywhere.

- You should be able to operate an iPad or Apple TV, and maybe other Apple devices, the same way you do the menu of a Vision Pro. Just pinch and drag in the open air. Ex: While washing the dishes with your hands and watching a movie on your iPad, you look over and pause a movie without touching the tablet with your dirty hands.

- You should be able to run your finger along any flat surface as a virtual trackpad for any computer you’re looking at.

- You should be able to touch type on any surface.

Google told me touch typing on any surface would be a solved problem in a couple of years at the end of 2024. In such a focused design, Apple could conceivably replace the mouse, trackpad and keyboard with eyewear at the opposite end of the spectrum from Vision Pro. I mean that literally because display-free means you only ever see the real environment through a pair of frames, and yet the glasses still track your eye movements the same as they do in Vision Pro.

In Apple Vision Pro, eye tracking is used to target what you’re looking at so that when you “mouse click” by pinching your fingers together the whole system responds to exactly what you want in that moment. It’s also used to drive the included Persona avatars and even the outward-facing display system showing recreated eyeballs to external viewers. That’s a lot of technology, weight and expense Apple introduced in Vision Pro to fully enclose a person in a focused virtual location represented as an Apple home environment, and then anywhere else along the mixed reality spectrum using a dial and software.

None of that seems like a mass market need unless you had an experience in 2017 that instantly made you feel like a superhero in a VR headset. Why do VR headsets need eye tracking? For the same reason a computer needs a mouse. It is how you tell the computer what you want in a graphical user interface, even if you still need something else to select what you’re looking at.

The camera Meta placed in multiple generations of glasses faces outward for photo capture, rather than inward at the eyes for intent detection. Meta moved fast, but it made the wrong decisions in the wrong order.

When I leave the house, I could grab a pair of glasses to listen to music, take calls through my phone, and control all my other computers more easily on the go. And when I get home, or I need to do real work, I enter my Apple Vision Pro (or future Meta headset or Steam Frame).

Apple doesn’t need to make camera glasses. It doesn’t need a social network. And it could kill the mouse and keyboard while fulfilling the exact words Michael Abrash at Meta said to me in 2022.

Meta is likely aiming to get back on track with an ultralight headset that does what Apple Vision Pro does with avatars, eye tracking, focus and movies. As Meta looks to the future and figures out which brands to leave in the past I have just one suggestion.

There is one brand in Meta’s arsenal with a very close association to both eyes and headsets that gamers love.

Just call the next headset Oculus.

It’s cleaner.

Synex Server: A New Debian Based Linux Distro With Native ZFS Installation Support

Synex is a Linux distribution that’s been around for some months as a Debian-based, minimalistic Linux distribution out of Argentina focused on the needs of small and medium businesses. Making it a bit more intriguing for some now is that with their new release based on Debian 13 is a server edition and they have added native OpenZFS file-system support for new installations…

Origami Linux’s COSMIC Desktop on Fedora Atomic Almost Wins Me Over

Origami Linux pairs the Cosmic desktop with a Fedora Atomic base and a deliberately sparse default install, leaving most of the customization to you.

2026’s Breakthrough Technologies? MIT Technology Review Chooses Sodium-ion Batteries, Commercial Space Stations

As 2026 begins, MIT Technology Review publishes “educated guesses” on emerging technologies that will define the future, advances “we think will drive progress or incite the most change — for better or worse — in the years ahead.”

This year’s list includes next-gen nuclear, gene-editing drugs (as well as the “resurrection” of ancient genes from extinct creatures), and three AI-related developments: AI companions, AI coding tools, and “mechanistic interpretability” for revealing LLM decision-making.

But also on the list is sodium-ion batteries, “a cheaper, safer alternative to lithium.”

Backed by major players and public investment, they’re poised to power grids and affordable EVs worldwide. [Chinese battery giant CATL claims to have already started manufacturing sodium-ion batteries at scale, and BYD also plans a massive production facility for sodium-ion batteries.] The most significant impact of sodium-Âion technology may be not on our roads but on our power grids. Storing clean energy generated by solar and wind has long been a challenge. Sodium-ion batteries, with their low cost, enhanced thermal stability, and long cycle life, are an attractive alternative. Peak Energy, a startup in the US, is already deploying grid-scale sodium-ion energy storage. Sodium-ion cells’ energy density is still lower than that of high-end lithium-ion ones, but it continues to improve each year — and it’s already sufficient for small passenger cars and logistics vehicles.

And another “breakthrough technology” on their list is commercial space stations:

Vast Space from California, plans to launch its Haven-1 space station in May 2026 on a SpaceX Falcon 9 rocket. If all goes to plan, it will initially support crews of four people staying aboard the bus-size habitat for 10 days. Paying customers will be able to experience life in microgravity and conduct research such as growing plants and testing drugs. On its heels will be Axiom Space’s outpost, the Axiom Station, consisting of five modules (or rooms). It’s designed to look like a boutique hotel and is expected to launch in 2028. Voyager Space aims to launch its version, called Starlab, the same year, and Blue Origin’s Orbital Reef space station plans to follow in 2030.

Thanks to long-time Slashdot reader sandbagger for sharing the article.

Read more of this story at Slashdot.

Predator Spyware Turns Failed Attacks Into Intelligence For Future Exploits

In December 2024 the Google Threat Intelligence Group published research on the code of the commercial spyware “Predator”. But there’s now been new research by Jamf (the company behind a mobile device management solution) showing Predator is more dangerous and sophisticated than we realized, according to SecurityWeek.

Long-time Slashdot reader wiredmikey writes:

The new research reveals an error taxonomy that reports exactly why deployments fail, turning black boxes into diagnostic events for threat actors. Almost exclusively marketed to and used by national governments and intelligence agencies, the spyware also detects cybersecurity tools, suppresses forensics evidence, and has built-in geographic restrictions.

Read more of this story at Slashdot.

Subject: Oculus Strategy (LONG)

There’s a link on Reddit aging like fine wine. It carries the timestamp January 28, 2014 at 7:54:33 PM EST.

“So no way to confirm this, but my friend works in the same building as Oculus, and he ran into Mark Zuckerberg taking the elevator to Oculus’ floor,” Threewolfmtn posted. “Do you think he was just checking it out? Or is there somethign more devious going on?”

With whole teams shown the door from inside Meta’s VR and AR efforts in January 2026, you can put that time stamp in your mind relative to the one UploadVR published over half a decade ago. The important time stamp for the words we’re republishing from John Carmack to Oculus VR leaders is February 16, 2015.

Before you get to those words, in full below, here he is speaking directly to the public in 2021 before he “wearied of the fight” and exited near the end of 2022:

I reached out to Carmack earlier this week to invite fresh comment of any length. You can find it on UploadVR.com if he replies.

From: John Carmack

Date: February 16, 2015

Subject: Oculus Strategy (LONG)

In preparation for the executive retreat this week, I have tried to clarify some of my thoughts about the state and direction of Oculus. This is long, but I would appreciate it if everyone took the time to read it and consider the points for discussion. Are there people attending the meeting that aren’t on the ExecHQ list that I should forward this to?

Some of this reads as much more certain that I actually am; I recognize a lot of uncertainty in all the predictions, but I will defend them in more depth as needed.

Things are going OK. I am fairly happy with the current directions, and I think we are on a path that can succeed.

There are a number of things that I have been concerned about that seem to have worked out, but I remain a little wary of some of them metastasizing.

Oculus Box. Selling the world’s most expensive console would have both failed commercially and offended our PC base. Building it would have stolen resources from more important projects. Note that my objection is based on a high-end PC spec system. At some point in the future (or for some level of experiences), you start considering cheap, mobile based hardware, which is a different calculation.

Oculus OS. The argument goes something like “All important platforms have had their own OS. We want VR to be an important platform, therefore we need our own OS.” That is both confusing correlation with causation, and just wrong – Facebook is an important platform that doesn’t have its own OS. When you push hard enough, the question of “What, specifically, would we do with our own kernel that we can’t get from an existing platform?” turns out to be “Not much”. Supporting even a basic Linux distribution would be a huge albatross around our neck.

Indefinite innovator editions for Gear VR. We have been over this enough; I am happy with the resolution.

Major staff-up to “build the Metaverse”. Throw fifty new developers together and tell them to build a completely hand-wavey and abstract application. That was not going to go well. Oculus needs to learn how to deliver decent quality VR apps at a small scale before getting overly ambitious. I understand this choice wasn’t made willingly, but I am still happy with the outcome.

Write all new apps for CV1 in UE4. Would have been a recipe for failure this year, and would have unnecessarily divided efforts between mobile and PC. I recognize that my contention that we can build the current apps for both PC and mobile has not yet been demonstrated, and is in fact running quite a bit behind expectations.

Acceptance of non-interactive media. This is still grudging, as noted by the “interactive” bullet point in our official strategy presentation at the town hall, and Brendan’s derisive use of “viewmaster” when talking about Cardboard, but most now agree it has an important place. People like photos and video. You could go so far as to say it drives the consumer internet, and I think Oculus still underestimates this, which is why I am happy that Douglas Purdy’s VR Video team is outside the Oculus chain of command.

While it isn’t something I am directly involved in, I think the decision to push CV1 without controllers at a cheaper price point is a good one. Waiting for perfect is the wrong thing to do, and I am much less convinced of the necessity of novel controllers for VR’s success.

On to things with more room for improvement:

Platform under-delivery

I suspect that this was not given the focus it deserved because many people thought Gear VR wasn’t going to be “real”, so it may have felt like there was a whole year of cushion before CV1 was going to need a platform. Launching Gear VR without commerce sucked. Some steps have been taken here, but there are still hazards. I won’t argue passionately about platform strategy, because it really isn’t my field, but I have opinions based on general software development with some relevance.

We still have definitional problems with what exactly “platform” is, and who is responsible for what. I would like to see this made very clear. I am unsure about having the Apps team responsible for the client side interfaces. It may be pragmatic right now, but it doesn’t feel right.

I have heard Holtman explain how we couldn’t just use Facebook commerce infra because it wouldn’t allow us to do some things like region specific pricing that are important factors for Steam, but I remain unconvinced that it is sufficient reason to make our development more challenging. There is so much value in Facebook’s infra that I feel we should bend our strategies around using it as much as possible. A good strategy on world class infra has a very good chance of beating out an ideal strategy on virgin infra.

We should be a really damn good app/media store and IAP platform before we start working on providing gaming services. App positioning, auto updates / update notification, featured lists, recommendations, media rentals, etc.

When we do get around to providing gaming services, we should incrementally clone Steamworks as needed to satisfy key developers, rather than trying to design something theoretically improved that developers will have to adapt to.

The near term social VR push should be based strictly on the Facebook social graph. We can prove out our interaction models and experiences without waiting for the platform team to make an anonymized parallel implementation…

Consumer software culture

We need to become a consumer software shop.

The Oculus founders came from a tool company background, which has given us an “SDK and demos” development style that I don’t think best suits our goals. Oculus also plays to the press, rather than to the customers that have bought things from us, and it is going to be an adjustment to get there. Having an entire research division that is explicitly tasked with staying away from products is also challenging, and is probably going to get more so as product people crunch.

Talk of software at Oculus has been largely aspirational rather than practical. “What we want” versus “what we can deliver”. I was exasperated at the talk about “Oculus Quality”, as if it was a real thing instead of a vague goal. I do have concerns that at the top of the software chain of command, Nate and Brendan haven’t shipped consumer software.

Everyone knows that we aren’t going to run out of money and be laid off in a few months. That gives us the freedom to experiment and explore, looking for “compelling experiences”, and discarding things that don’t seem to be working out. In theory, that sounds ideal. In practice, it means we have a lot of people working on things that are never going to contribute any value to our customers.

Most people, given the choice, will continue to take the path that avoids being judged. Calling our products “developer kits”, “innovator editions”, and “beta” has been an explicit strategy along those lines. To avoid being judged on our software, we largely just don’t ship it.

For example, I am unhappy with Nate’s decision to not commit to any kind of social component for the consumer launch this year. I’m going to try to do something anyway, but it means swimming against the tide.

I would like to see us behave more like a scrappy web / mobile developer. Demos become products, and if they suck, people take responsibility. Move fast, watch our numbers, and react quickly. “What’s new” on our website should report new features added and bugs fixed on a weekly basis, not just the interviews we have given.

Get better value from partner companies

The most effective way to add value to our platform is to leverage the work of other successful companies, even if that means doing all the work for them and letting them take all the money. I contend that adding value to our platform to make more happy users is much more important at this point than maximizing revenue from a tiny pool. I think win-first, then optimize monetization, is an effective way to take advantage of our relatively safe position inside Facebook.

It is fine to shotgun dev kits out to lots of prominent developers, but the conversion rate to shipping products from top tier companies isn’t very good. A focused effort will yield better results.

My pursuit of Minecraft has been an explicitly strategic operation. We will benefit hugely if it exists on our platform, and if we close the deal on it, the time I spent coding on it will have been among the most valuable of my contributions.

We need a big video library streaming service, and I would be similarly willing to personally write a bunch of code to make sure it turned out great. Ideally it would be Netflix, but even a third tier company like M-Go would be far better than doing it ourselves. There is an argument along the lines of “We don’t need Netflix, we’ll cut our own content deals and be better off in the long run.” That makes the conscious (sometimes defensible) choice to suck in the near term for a long term advantage, but it also grossly underestimates the amount of work that all those companies have done. I have low confidence that a little ad-hoc team inside Oculus is going to deliver a better, or even comparable, movie / TV show watching system than the established players.

I know I don’t have broad buy-in on the value, but I feel strongly enough about the merits of demonstrating a “VR Store” that I think it is worth basically writing the app for Comixology. I look at it as a free compelling dataset for us, rather than us doing free work for them.

What other applications could be platform-defining for us with a modest VR reinterpretation?

Picking winners like this does clearly sacrifice platform impartiality, but I think it is a cost worth paying.

Even amongst the general application pool, we should be actively fixing 3rd party apps, and letting them drive the shape of SDK development. I am bothered by a lot of the text aliasing in VR apps, so I need to finish up my Unity-GUI-in-overlay-plane work and provide it to developers.

Abandon “Made for VR or go away” attitude

The iPhone was a phone. Many people would say it wasn’t actually a great phone, but it subsumed the functionality of something that everyone had and used, and that was important for adoption. If it had been delivered as the iPod Touch first, it would have been far less successful, and, one step farther, if it wasn’t also an iPod, it would have been another obscure PDA.

Oculus’ position has been hostile to apps that aren’t specifically designed for VR, and I think that is a mistake. We do not have a flood of AAA, or even A level content, and I don’t think it will magically appear as soon as we yell CV1 at the top of our lungs. The economics are just not very compelling to big studios, and developing to the solid 90 fps stereo CV1 spec is very challenging.

There are a number of things that can help:

Encourage limited VR modes for existing games. Even simple viewer or tourist modes, or mini-games that aren’t representative of the real gameplay would be of some value to VR users. Do we have a head mount sensor on CV1? We win if we can get our customers to think that when you put on your HMD, a good game should do SOMETHING.

Embrace Asynchronous Time Warp on PC, so developers have a fighting chance to get a decent VR experience out of their existing codebases. We are going to be forced to make this work eventually, but we have strategically squandered six months of lead time. This is directly attributable to Atman’s strong opinions on the issue.

We should make first class support for running conventional 2D apps in VR, and we should support net application streaming on mobile. It is going to be a long time before we have high quality VR applications for everything that people want to do; 2D applications floating in VR will fill a valuable role, especially as we move towards switching between multiple resident applications.

Even driver intercept applications 3D/VR-ifying naïve applications may eventually have a place. It is technically feasible to deliver the full comfortable-VR experience from a naïve application in some cases.

Abandon “Comfortable VR” as a dominant priority

Even aside from this almost killing Gear VR, our positioning on PC has been somewhat inconsistent. We talk about how critical SteamWorks-like functionality is to our platform, because Steam gamers are our (PC) user base, but the intersection of stationary viewpoint game experiences and the games people play on Steam is actually quite small.

We should not support developers “doing it wrong”, like using an incorrect FOV for rending, but “doing uncomfortable things”, like moving the viewpoint or playing panoramic video that can’t be positioned, are value decisions that will often be net positive. In fact, I believe that they will constitute the natural majority of hours spent in VR, and we do a disservice to our users by attempting to push against that natural position.

We have a problem here – It would be hard for the CEO of a sailboat company to be enthusiastic and genuine if they always got seasick whenever they went out, but Brendan is in exactly that position.

My Minecraft work is a good example. By its very nature, it is terrible from a comfort position — not only does it have navigation, but there is a lot of parabolic bounding up and down. Regardless, I have played more hours in it than any other VR experience except Cinema.

Brendan suggested there might be a better “Made for VR Minecraft” that was stationary and third person, like the HoloLens demo. This was frightening to hear, because it showed just how wide the gulf was between our views of what a great VR game should be. Playing with lego blocks can be fun, but running for your life while lost underground is moving.

Mobile expansion plan

It will not be that long until Note 4 class performance is available in much cheaper phones. Notably, being quad core (or octa-core on Exynos) does almost nothing for our VR performance, and neither does being able to burst to 2.5 GHz, both due to thermal reasons. A dual core Snapdragon that was only binned for 1.7 GHz CPU and 400 MHz GPU could run all the existing applications, and DK2 would argue that 1080p screens can still “Do VR”. This is still the most exciting vision for me – when everyone picks up a cheap Oculus headset holder for their phone when they walk out of the carrier store, just like grabbing a phone case.

I would rather push for cost reduction and model range expansion across all Samsung’s lines before going out to other vendors, but we are doing the right thing with Shaheen working towards building our own Android extensions to run Gear VR apps, so we have them on hand when we do need them.

The other major technical necessity is to engage with LCD panel manufacturers to see what the best non-OLD VR display can be, either with overclocked memory interfaces and global backlight controls, or custom building rolling portrait backlights. Once we have apps running on the custom dev kits with Shaheen’s work, we should be able to do experiments with this.

I am less enthusiastic about the dedicated LG headset that plugs into phones. It will require all the Android software engineering effort that Gear VR did for each headset it will be compatible with, as well as significant new hardware engineering, and the attach rate would be guaranteed to be a fraction of Gear VR due to a much higher price. It seems much more sensible to just make sure that CV2 is mobile friendly, rather than building a CV1.5 Mobile Edition. If you certify a phone for VR, you might as well have a drop-in holder for it as well as the plug in option; there would be little difference in the software, and the tradeoff between cost, position tracking, refresh rate, and resolution would be evaluated by the market.

If we want to allow mobile developers to prepare for eventual position tracking support, we could make a butchered DK2 / CV1 LED faceplate that attaches to a Gear VR so a PC could do the tracking and communicate positions back to the Gear VR over WiFi. I don’t feel any real urgency to do this, I doubt the apps people are developing today are going to be the killer apps of a somewhat distant position tracked mobile system.

The plan for a gaming-themed Atari hotel in Las Vegas has reportedly been scrapped

Six years after the announcement of plans to build Atari Hotels in eight cities across the US, including Las Vegas, only one now seems to be moving forward, in Phoenix, Arizona. The Las Vegas deal ultimately “didn’t come to fruition,” spokesperson Sara Collins told Las Vegas Sun this week, and Atari Hotels is putting its focus into the Phoenix site “for the time being.”

Phoenix was always meant to be the first site, followed by other hotels in Austin, Chicago, Denver, Las Vegas, San Francisco, San Jose and Seattle. But Las Vegas is now apparently off the table, and there haven’t been any signs of life around the other planned locations. The FAQ on the Atari Hotels website notes, “Additional sites, including Denver, are being explored under separate development and licensing agreements.” The Atari Hotel project was announced in 2020 just before the onset of the COVID-19 pandemic and consequently experienced development delays. Construction on the Phoenix hotel, which was supposed to break ground in 2020, is expected to begin late this year, with its opening now planned for 2028.

But maybe don’t hold your breath. According to a December press release, the company is still trying to raise $35 million to $40 million to fund the “playable destination” for gamers in Phoenix.

This article originally appeared on Engadget at https://www.engadget.com/gaming/the-plan-for-a-gaming-themed-atari-hotel-in-las-vegas-has-reportedly-been-scrapped-214212269.html?src=rss

To Pressure Security Professionals, Mandiant Releases Database That Cracks Weak NTLM Passwords in 12 Hours

Ars Technica reports:

Security firm Mandiant [part of Google Cloud] has released a database that allows any administrative password protected by Microsoft’s NTLM.v1 hash algorithm to be hacked in an attempt to nudge users who continue using the deprecated function despite known weaknesses…. a precomputed table of hash values linked to their corresponding plaintext. These generic tables, which work against multiple hashing schemes, allow hackers to take over accounts by quickly mapping a stolen hash to its password counterpart… Mandiant said it had released an NTLMv1 rainbow table that will allow defenders and researchers (and, of course, malicious hackers, too) to recover passwords in under 12 hours using consumer hardware costing less than $600 USD. The table is hosted in Google Cloud. The database works against Net-NTLMv1 passwords, which are used in network authentication for accessing resources such as SMB network sharing.

Despite its long- and well-known susceptibility to easy cracking, NTLMv1 remains in use in some of the world’s more sensitive networks. One reason for the lack of action is that utilities and organizations in industries, including health care and industrial control, often rely on legacy apps that are incompatible with more recently released hashing algorithms. Another reason is that organizations relying on mission-critical systems can’t afford the downtime required to migrate. Of course, inertia and penny-pinching are also causes.

“By releasing these tables, Mandiant aims to lower the barrier for security professionals to demonstrate the insecurity of Net-NTLMv1,” Mandiant said. “While tools to exploit this protocol have existed for years, they often required uploading sensitive data to third-party services or expensive hardware to brute-force keys.”

“Organizations that rely on Windows networking aren’t the only laggards,” the article points out. “Microsoft only announced plans to deprecate NTLMv1 last August.”

Thanks to Slashdot reader joshuark for sharing the news.

Read more of this story at Slashdot.

Two More Offshore Wind Projects in the US Allowed to Continue Construction

Friday a federal judge “cleared U.S. power company Dominion Energy to resume work on its Virginia offshore wind project.” But a U.S. federal judge also ruled Thursday that another major offshore wind farm is allowed to resume construction, reports the Hill. “The project, which would supply power to New York, was one of five that were halted by the Trump administration in December….”

In fact, there were three different court rulings this week each allowing construction to continue on a U.S. wind project:

Judge Carl Nichols, a Trump appointee, granted a preliminary injunction allowing Empire Wind to keep building… Another, Revolution Wind, was also allowed to move forward in court this week… The project would provide enough power for up to 500,000 homes, according to its website. The court’s decision allows construction to resume while the underlying case against the Trump order plays out.

Meanwhile, power company Orsted “is also suing over the pause of its Sunrise Wind project for New York,” reports the Associated Press, “with a hearing still to be set.”

The fifth paused project is Vineyard Wind, under construction in Massachusetts. Vineyard Wind LLC, a joint venture between Avangrid and Copenhagen Infrastructure Partners, joined the rest of the developers in challenging the administration on Thursday.

CNN points out that the Vineyard Wind project “has been allowed to send power to the grid even amid Trump’s suspension, a spokesperson for regional grid operator ISO-New England told CNN in an email.”

Residential customers in the mid-Atlantic region, including Virginia, desperately need more energy to service the skyrocketing demand from data centers â” and many are seeing spiking energy bills while they wait for new power to be brought online.

CNN notes that president Trump said last week “My goal is to not let any windmill be built; they’re losers.”

The Associated Press adds that “In contrast to the halted action in the US, the global offshore wind market is growing, with China leading the world in new installations. Nearly all of the new electricity added to the grid in 2024 was renewable. The British government said on Wednesday it had secured a record 8.4 gigawatts of offshore wind in Europe’s largest offshore wind auction, enough clean electricity to power more than 12m homes.”

Read more of this story at Slashdot.

Important AMDGPU & AMDKFD Driver Improvements Readied For Linux 6.20~7.0

On Friday AMD sent out another set of AMDGPU kernel graphics driver and AMDKFD kernel compute driver patches for queuing in DRM-Next ahead of the upcoming Linux 6.20~7.0 kernel cycle kicking off in February…