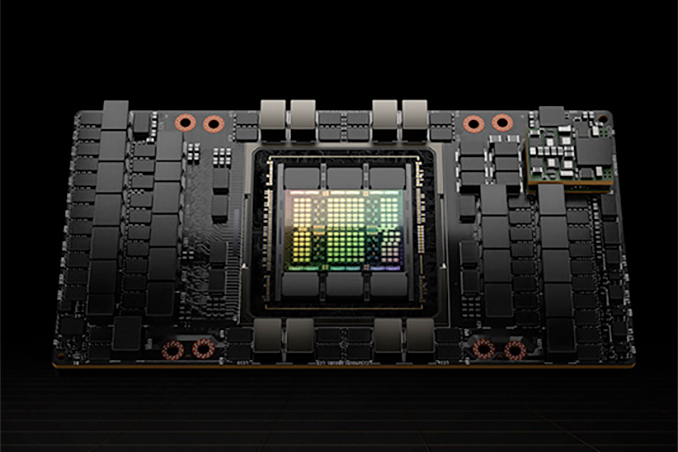

CoreWeave, an Nvidia-backed cloud service provider specializing in GPU-accelerated services, has secured a debt facility worth $2.3 billion using Nvidia’s H100-based hardware as collateral. The company intends to use the funds to procure more compute GPUs and systems from Nvidia, construct new data centers, and hire additional personnel to meet the growing needs for AI and HPC workloads.

CoreWeave has reaped enormous benefits from the rise in generative AI due to its large-scale cloud infrastructure as well as an exclusive relationship with Nvidia, and its ability to procure the company’s H100 compute GPUs as well as HGX H100 supercomputing platforms amid shortages of AI and HPC hardware. Since many AI and HPC applications used nowadays were developed for Nvidia’s CUDA platform and API, they require Nvidia’s GPUs. Therefore, access to H100 gives CoreWeave a competitive edge over traditional CSPs like AWS, Google, and Microsoft.

In addition to offering its customers access to advanced hardware, CoreWeave collaborates with AI startups and major CSPs — which are essentially its competitors — to build clusters that power AI workloads. These rivals — AWS and Google — have their own processors for AI workloads, and they continue to develop new ones. Still, given the dominance of CUDA, they have to offer Nvidia-powered instances to their clients and are currently grappling with Nvidia GPU supply limitations.

CoreWeave’s competitive advantage, facilitated by access to Nvidia’s latest hardware, is a key factor in the company’s ability to secure such substantial credit lines from companies like Magnetar Capital, Blackstone, Coatue, DigitalBridge, BlackRock, PIMCO, and Carlyle. Meanwhile, CoreWeave has already gotten $421 million from Magnetar at a valuation exceeding $2 billion.

Notably, this is not the first example of an Nvidia-supported startup reaping substantial benefits from its association with the tech giant. Last month, Inflection AI built a supercomputer worth hundreds of millions of dollars powered by 22,000 Nvidia H100 compute GPUs.

Meanwhile, this is the first time Nvidia’s H100-based hardware was used as collateral, emphasizing these processors’ importance in the capital-intensive AI and HPC cloud business. Moreover, this massive loan indicates the growing market for private asset-based financing secured by actual physical assets.

“We negotiated with them to find a schedule for how much collateral to go into it, what the depreciation schedule was going to be versus the payoff schedule,” said Michael Intrator, CoreWeave’s CEO. “For us to go out and to borrow money against the asset base is a very cost-effective way to access the debt markets.”

The company recently announced a $1.6 billion data center in Texas and plans to expand its presence to 14 locations within the U.S. by the end of 2023.

Source: Reuters

Source: AnandTech – Cloud Provider Gets .3 Billion Debt Using Nvidia’s H100 as Collateral