Alongside this morning’s announcement of the new Radeon RX 7800 XT and RX 7700 XT video cards, AMD is also using their Gamescom launch event to deliver an update on the state of their Radeon software stack. The company has been working on a couple of performance-improvement projects since the launch of the Radeon RX 7000 series in late 2022, including the highly anticipated FSR 3, and they’re finally offering a brief update on those projects ahead of their September launches.

FSR 3 Update: First Two Games Available in September

First and foremost, AMD is offering a bit of a teaser update on Fidelity FX Super Resolution 3 (FSR 3), their frame interpolation (frame generation) technology that is the company’s answer to NVIDIA’s DLSS 3 frame generation feature. First announced back at the Radeon RX 7900 series launch in 2022, at the time AMD only offered the broadest of details on the technology, with the unspoken implication being that they had only recently started development.

At a high level, FSR 3 was (or rather, will be) AMD’s open source take on frame interpolation, similar to FSR 2 and the rest of the Fidelity FX suite of game effects. With FSR 3, AMD is putting together a portable frame interpolation technique that they’re dubbing “AMD Fluid Motion Frames” and, unlike DLSS, is not vendor proprietary and can work on a variety of cards from multiple vendors. Furthermore, the source code for FSR3 will be freely available as part of AMD’s GPUOpen community.

Since that initial announcement, AMD has not had anything else of substance to say on the state of FSR 3 development. But at last, the first shipping version of FSR 3 is in sight, with AMD expecting to bring it to the first two games next month.

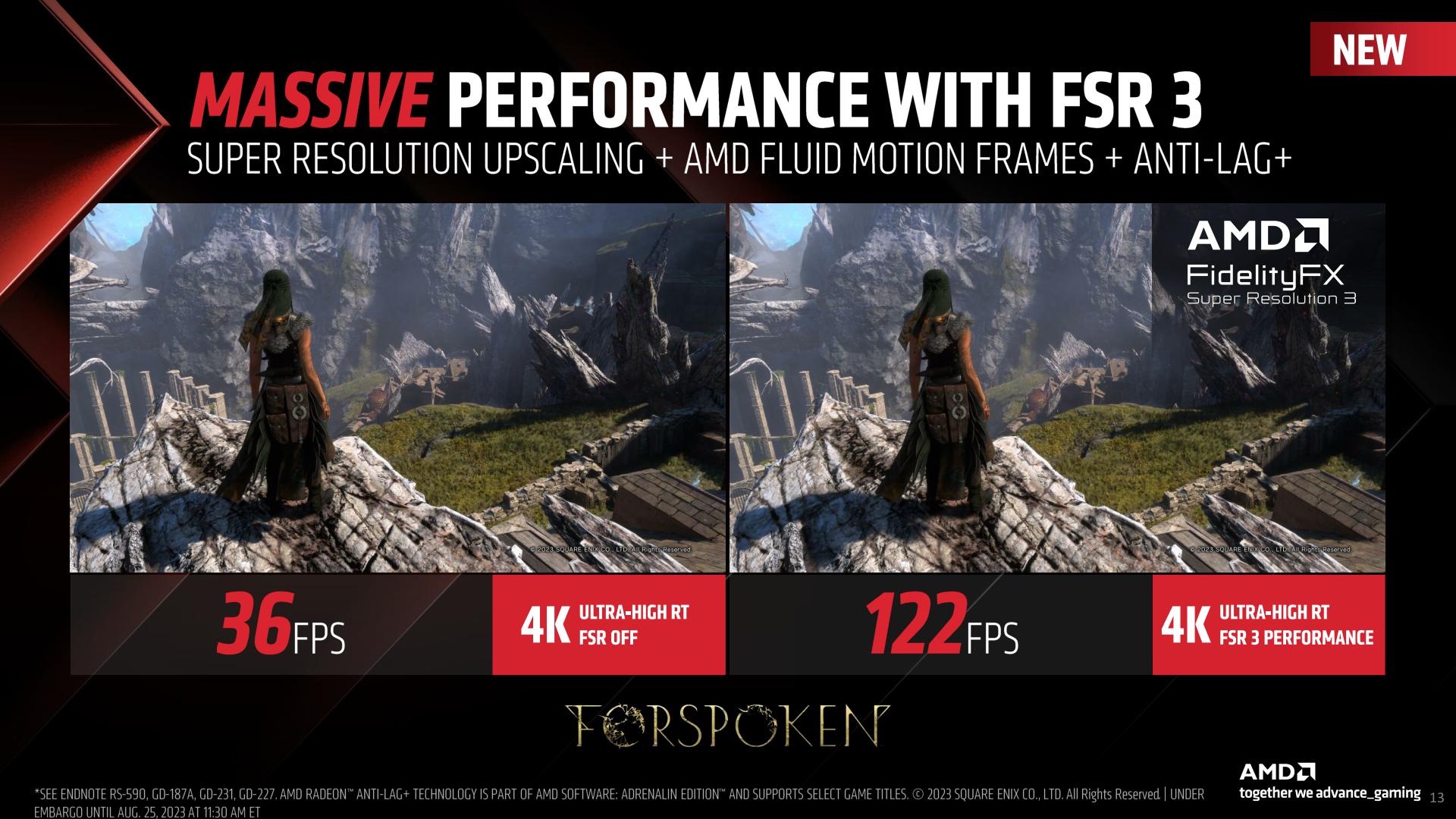

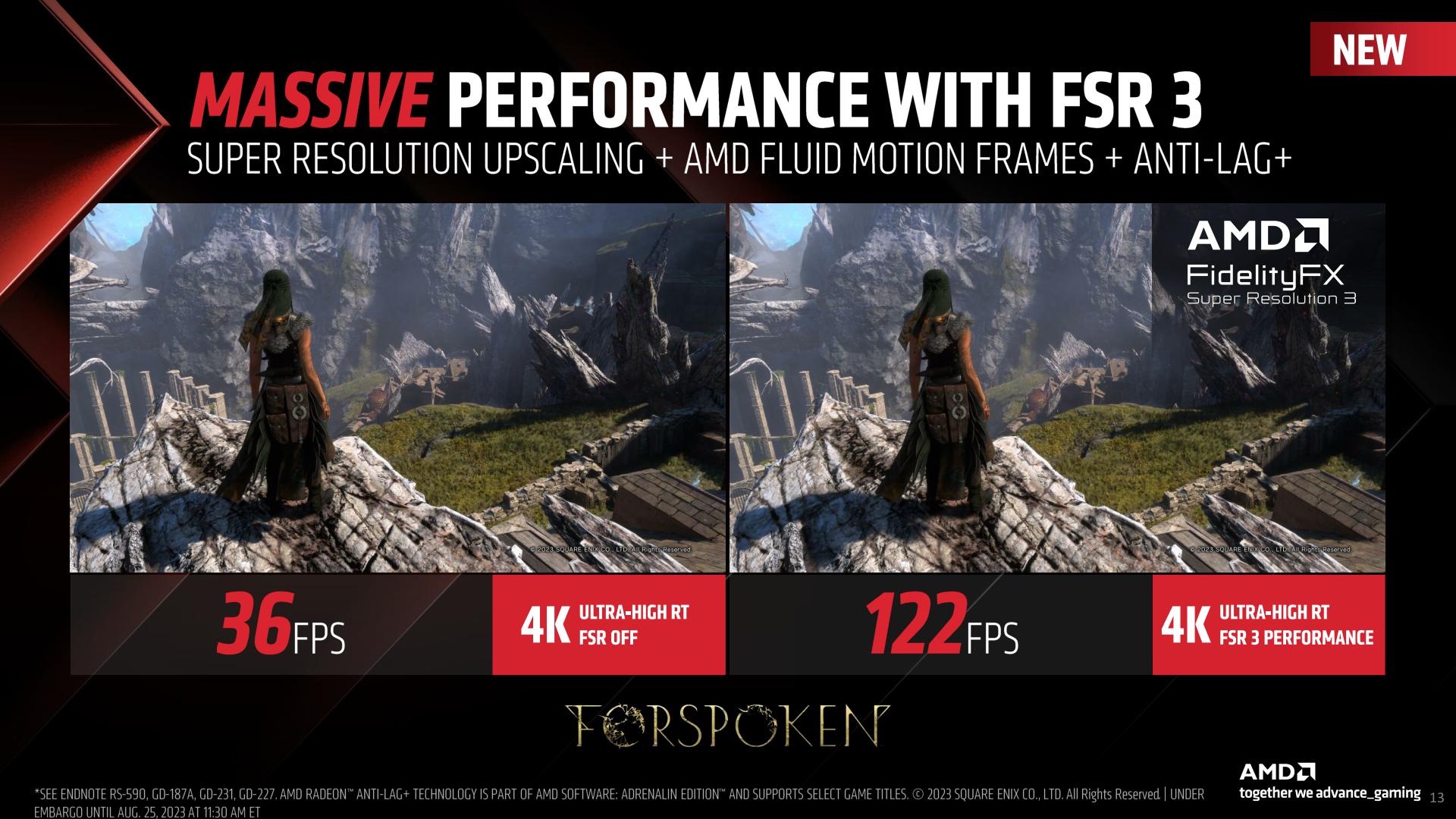

Ahead of that launch, they are offering a small taste of what’s to come, with some benchmark numbers for the technology in action on Forspoken. Using a combination of Fluid Motion Frames, Anti-Lag+, and temporal image upscaling, AMD was able to boost 4K performance on Forspoken from 36fps to 122fps.

Notably, AMD is using “Performance” mode here, which for temporal upscaling means rendering at one-quarter the desired resolution of a game – in this case, rendering at 1080p for a 4K/2160p output. So a good deal of the heavy lifting is being done by temporal upscaling, but not all of it.

AMD has also published a set of numbers without temporal upscaling, using their new native anti-aliasing mode, which renders at the desired output resolution and then uses temporal techniques for AA, and combines that with Fluid Motion Frames. In that case, performance at 1440p goes from 64fps to 106fps.

For the time being, these are the only two sets of data points AMD is providing. Otherwise, the screenshots included in their press deck are not nearly high enough in quality to make any kind of meaningful image quality comparisons, and AMD hasn’t published any videos of the technology in action. So convincing visual evidence of FSR 3 in action is, at least ahead of today’s big reveal, lacking. But it is a start none the less.

As for the technical underpinnings, AMD has answered a few questions relating to FSR3/Fluid Motion Frames, but the company is not doing a deep dive on the technology at this time. So there remains a litany of unanswered questions about its implementation.

With regards to compatibility, according to AMD FSR3 will work on any RDNA (1) architecture GPU or newer, or equivalent hardware. RDNA (1) is a DirectX Feature Level 12_1 architecture, which means equivalent hardware spans a pretty wide gamut of hardware, potentially going back as far as NVIDIA’s Maxwell 2 (GTX 900) architecture. That said, I suspect there’s more to compatibility than just DirectX feature levels, but AMD isn’t saying much more about system requirements. What they are saying, at least, is that while it will work on RDNA (1) architecture GPUs, they recommend RDNA 2/RDNA 3 products for the best performance.

Along with targeting a wide range of PC video cards, AMD is also explicitly noting that they’re targeting game consoles as well. So in the future, game developers will be able to integrate FSR3 and have it interpolate frames on the PlayStation 5 and Xbox Series X|S consoles, both of which are based on AMD RDNA 2 architecture GPUs.

Underpinning FSR 3’s operation, in our briefing AMD made it clear that it would require motion vectors, similar to FSR 2’s temporal upscaling, as well as rival NVIDIA’s DLSS 3 interpolation. The use of motion vectors is a big part of why FSR 3 requires per-game integration – and a big part of delivering high quality interpolated frames. Throughout our call “optical flow” did not come up, but, frankly, it’s hard to envision AMD not making use of optical flow fields as well as part of their implementation. In which case they may be relying on D3D12 motion estimation as a generic baseline implementation, since it wouldn’t require accessing each vendor’s proprietary integrated optical flow engine.

What’s not necessary, however, is AI/machine learning hardware. Since AMD is targeting the consoles, they wouldn’t be able to rely on it anyhow.

AMD also says that FSR 3 includes further latency reduction technologies (which are needed to hide the latency of interpolation). It’s unclear if this is something equivalent to NVIDIA’s Reflex marker system, or if it’s something else entirely.

The first two games to get FSR 3 support will be the aforementioned Forspoken, as well as the recently launched Immortals of Aveum. AMD expects FSR 3 to be patched in to both games here in September – presumably towards the later part of the month.

Looking farther forward, AMD has lined up several other developers and games to support the technology, including RPG-turned-technology-showcase Cyperpunk 2077. Given that Forspoken and Immortals of Aveum are essentially going to be the prototypes for testing FSR 3, AMD isn’t saying when support for the technology will come to these other games. Though with plans to make it available soon as an Unreal Engine plugin, the company certainly has its eyes on enabling wide-scale deployment of the technology over time.

For now, this is only a brief teaser of details. I expect that AMD will have a lot more to say about FSR 3, and to disclose, once the FSR 3 patches for Forspoken and Immortals of Aveum are ready to be released.

Hypr-RX: Launching September 6th

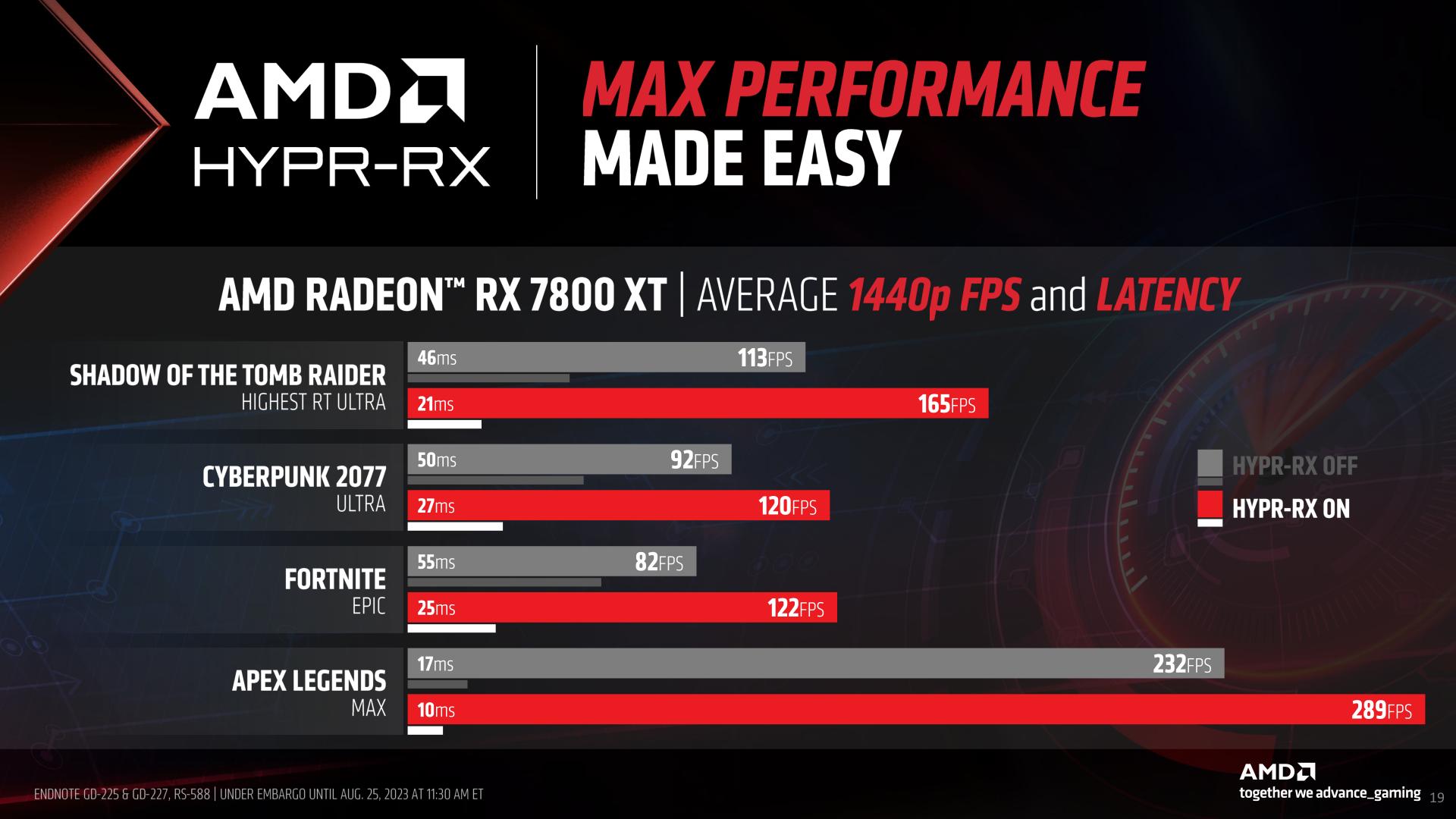

Second up, we have Hypr-RX. AMD’s smorgasbord feature combines the company’s Radeon Super Resolution (spatial upscaling), Radeon Anti-Lag+ (frame queue management), and Radeon Boost (dynamic resolution scaling). All three technologies are already available in AMD’s drivers today, however they can’t all currently be used together. Super Resolution and Boost both touch the rendering resolution of a game, and Anti-Lag steps on the toes of Boost’s dynamic resolution adjustments.

Hypr-RX, in turn, is designed to bring all three technologies together to make them compatible with one another, and to put the collective set of features behind a single toggle. In short, if you turn on Hypr-RX, AMD’s drivers will use all of the tricks available to improve game performance.

Hypr-RX was supposed to launch by the end of the first half of the year, a date that has since come and gone. But, although a few months late, AMD has finally finished pulling together the feature, just in time for the Radeon RX 7800 XT launch.

To that end, AMD will be shipping Hypr-RX in their next Radeon driver update, which is due on September 6th. This will be the launch driver set posted for the RX 7800 XT, bringing support for the new card and AMD’s newest software features all at once. On that note, it bears mentioning that Hypr-RX requires an RDNA 3 GPU, meaning it’s only available for Radeon RX 7000 video cards as well as the Ryzen Mobile 7040HS CPU family.

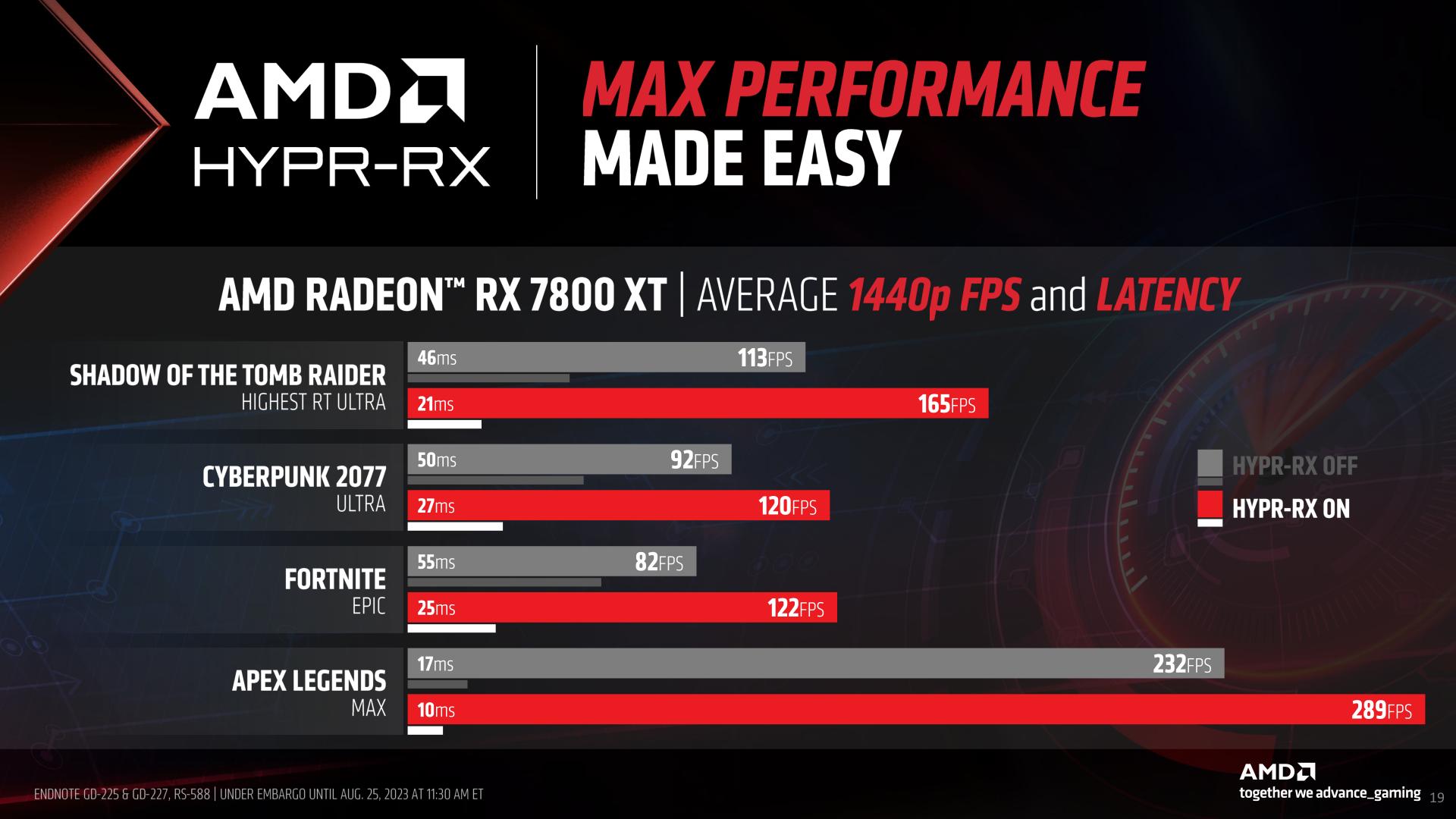

Taking a quick look at performance, AMD has released some benchmark numbers showing both the latency and frame rates for several games on the RX 7800 XT. In all cases latency is down and framerates are up, varying on a game-by-game basis. As these individual technologies are already available today, there’s not much new to say about them, but given the overlap in their abilities and the resulting technical hurdles, it’s good to see that AMD finally has them playing nicely together.

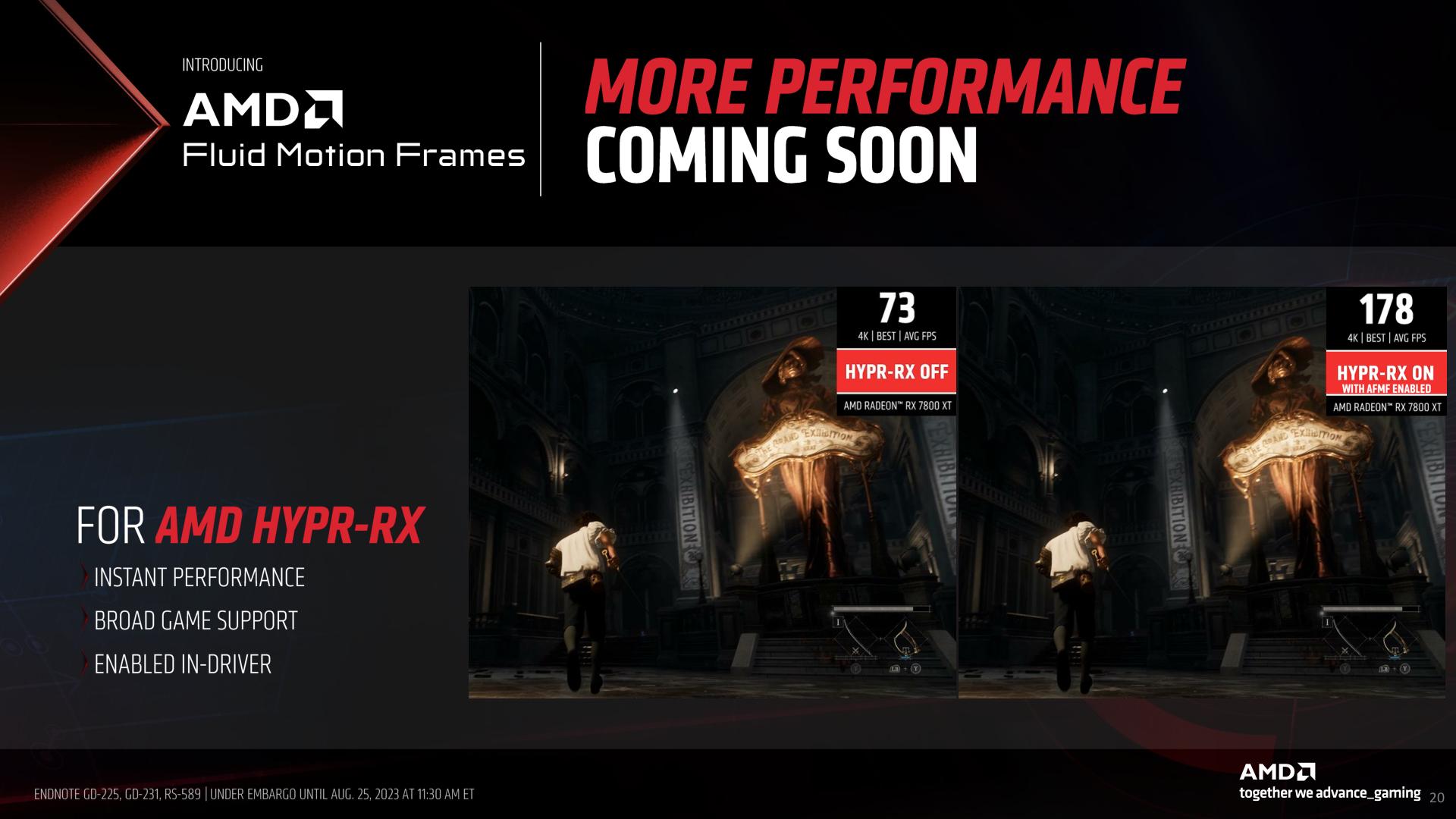

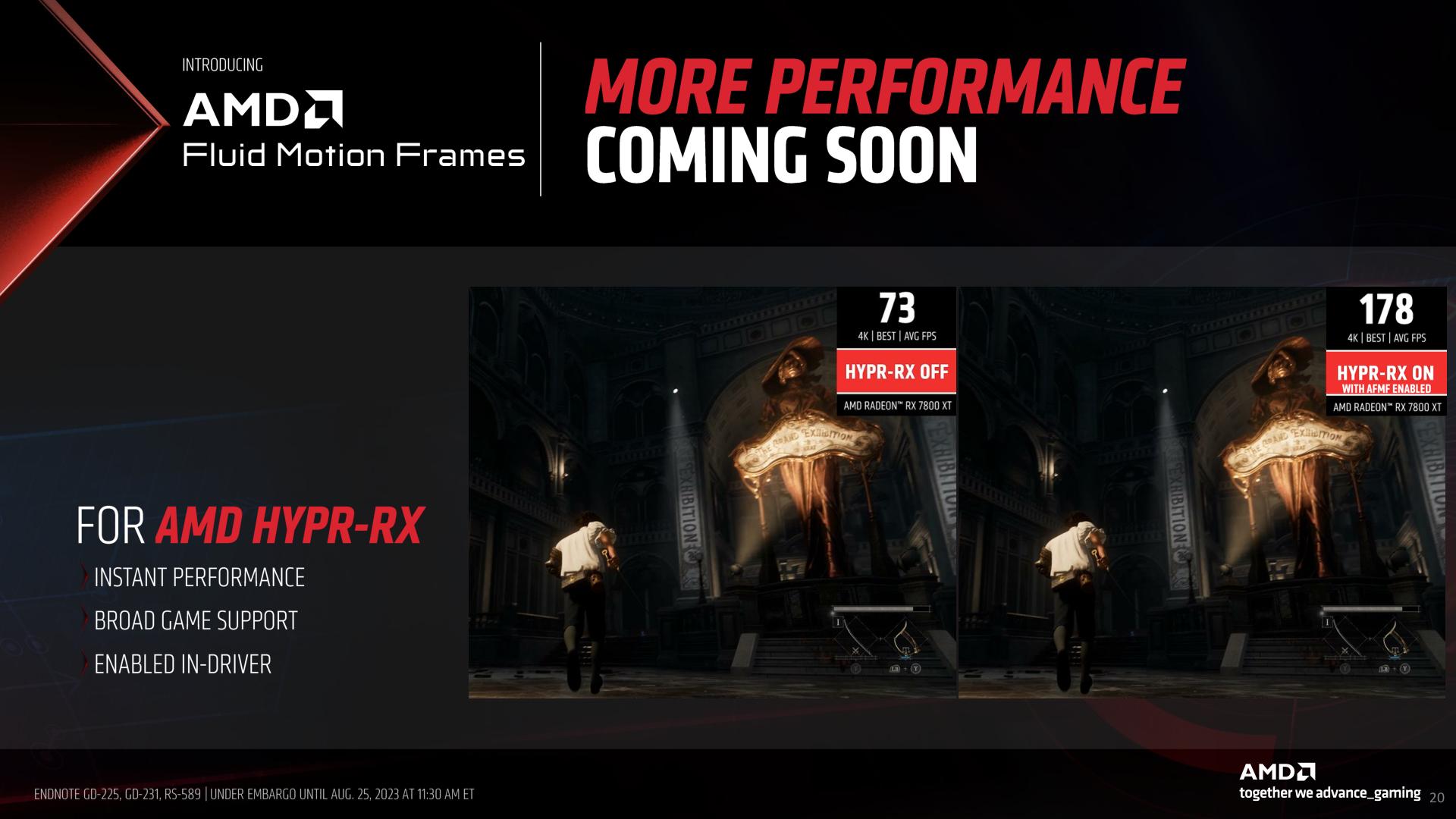

But with FSR 3 and its frame interpolation abilities soon to become available, AMD won’t be stopping there for Hypr-RX. The next item on AMD’s to-do list is to add Fluid Motion Frame support to Hypr-RX, allowing AMD’s drivers to use frame interpolation (frame generation) to further improve game performance.

This is a bigger and more interesting challenge than it may first appear, because AMD is essentially promising to (try to) bring frame interpolation to every game on a driver level. FSR 3 requires that the technology be built in to each individual game, in part because it relies on motion vector data. That motion vector data is not available to driver-level overrides, which is why Hypr-RX’s Radeon Super Resolution ability is only a spatial upscaling technology.

Put another way: AMD apparently thinks they can do frame interpolation without motion vectors, and still achieve a good enough degree of image quality. It’s a rather audacious goal, and it will be interesting to see how it turns out in the future.

Wrapping things up, AMD’s current implementation of Hypr-RX will be available on September 6th as part of their new driver package. Meanwhile Hypr-RX with frame interpolation is a work in progress, and will be coming at a later date.

Source: AnandTech – AMD Teases FSR 3 and Hypr-RX: Updated Radeon Performance Technologies Available in September