Kioxia this morning is updating their BG series of M.2 2230 SSDs for OEMs with the addition of the new BG5 family of drives. The latest in the company’s lineup of postage stamp-sized SSDs, the BG5 series sees Kioxia reworking both the NAND and the underlying controller to use newer technologies. As a result, the latest iteration of the drive is gaining overall higher performance thanks to the combination of PCIe 4.0 support as well as the switch to Kioxia’s latest BiCS5 NAND. However, in an unexpected twist, the BG series is no longer a single-chip design; instead, the NAND and controller on the BG5 are now separate packages.

Long a fixture of pre-built systems, Kioxia’s BG series of SSDs have been a favorite of OEMs for the last several years due to their small size – typically M2. 2230 or smaller – as well as their low cost. In particular, the DRAMless design of the drive keeps the overall component costs down, and it allowed Kioxia to simply stack the NAND dies on top of the controller, giving the SSDs their small footprint. As well, the simple design and tight thermal tolerances of such a stacked design mean that power consumption has been kept quite low, too. The resulting performance of the drives is very much entry-level, and thus rarely noteworthy, but for a drive not much bigger than a postage stamp, it fills a small role.

Coming a bit over two years since the BG4 was introduced, the headlining update to BG5 is the addition of PCIe 4.0 support. Whereas BG4 was a PCIe 3.0 x4 drive, BG5 is PCIe 4.0 x4, which at this point gives the drive more bus bandwidth than it could ever possibly hope to use. Truth be told, I was a bit surprised to see that the BG5 went PCIe 4.0 given the limited performance impact on an entry-level drive and the tight power limits, though there are some second-order benefits from PCIe 4.0. In particular, any OEM who ends up only allocating two lanes to the drive (something that happens now and then) will still get the equivalent of PCIe 3.0 x4 speeds out of the drive, which in turn is still high enough to run the drive at almost full performance. This underscores one of the big improvements offered by higher PCIe speeds: for components that don’t need more bandwidth, integrators can instead cut down on the number of lanes.

| Kioxia BG5 SSD Specifications |

| Capacity |

256 GB |

512 GB |

1 TB |

| Form Factor |

M.2 2230 or M.2 2280 |

| Interface |

PCIe Gen4 x4, NVMe 1.4 |

| NAND Flash |

112L BiCS5 3D TLC |

| Sequential Read |

3500 MB/s |

| Sequential Write |

2900 MB/s |

| Random Read |

500k IOPS |

| Random Write |

450k IOPS |

Speaking of performance, the BG5 drives are rated for higher throughput than their predecessor. Kioxia’s official press release only offers a single set of figures, so these are almost certainly for the 1TB configuration, but for that drive they are rating it at 2900MB/sec writes and 3500MB/sec reads – the latter just crossing the limits of PCIe 3.0 x4. Random writes and reads are rated at 450K IOPS and 500K IOPS respectively. As always, these figures are against writing to the drive’s SLC cache, so sustained write throughput does eventually taper off.

As this is a DRAMless drive, there is no significant on-package caching/buffer layer to talk about. Instead, like its predecessor, Kioxia is relying on Host Memory Buffer (HMB) tech to improve the performance of their drive. HMB isn’t used to cache user data, but instead is used to cache mapping information about the drive’s contents in order to speed up access. Along with the latest generation of this tech, Kioxia has also updated their controller to support NVMe 1.4.

Backing the new PCIe 4.0 controller is Kioxia’s BiCS5 generation of TLC NAND, which is a 112L design. BiCS5 has been shipping for a while now, so it’s very much a known quantity, but the time has finally come for it to trickle down into the BG series of drives. BiCS5 was a relatively modest increase in density over BiCS4, so it’s not too surprising here that Kioxia is keeping the largest BG5 configuration at 1TB, which would mean stacking 8 of the 1Tbit dies.

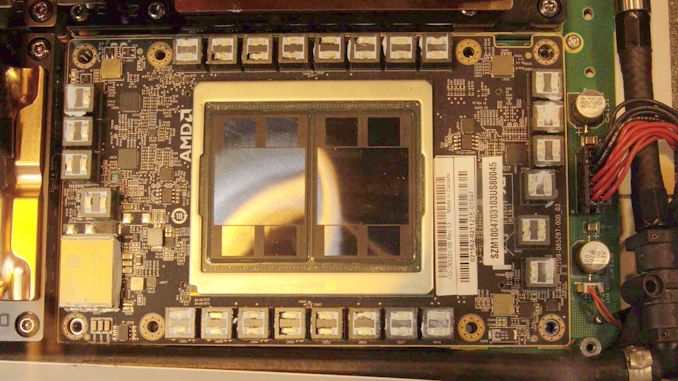

But perhaps the biggest change with the BG5 isn’t the specifications of the controller or the NAND on their own, but rather the fact that the two parts are alone to begin with. A staple of the BG5 series design has been the small package enabled by stacking the memory and controller together into a single package. But from Kioxia’s supplied product photo, we can clearly see that the NAND and the controller are separate packages. Kioxia made no mention of this change, so we can only speculate about whether it’s for simplicity in construction (no TSVs to the controller) or maybe the heat put off by a PCIe 4.0 controller. But one way or another, it’s a big change in how the small drive is assembled.

As a result of this change, the BGA M.2 1620 form factor – which supplied the single-chip package in a solder-down package – has gone away. Instead, the smallest form factor is now the removable M.2 2230 version. The postage stamp-sized M.2 2230 form factor has long been the staple of the lineup, as it’s what we’ve seen in Microsoft’s Surface products and other thin and light designs over the years. Since the form factor here isn’t changing, the use of multiple packages shouldn’t alter things much for a lot of OEMs. And for OEMs that need physically larger drives for compatibility reasons, Kioxia is also formally offering a 2280 design as well. A simple two-chip solution on such a large PCB is unremarkable, but it would allow the BG5 to be easily inserted into systems that are designed to take (and typically use) 2280 drives.

As these are OEM drives, no pricing information is available. The drives are currently sampling to Kioxia’s customers, so expect to see them land in commercial products in 2022.

![]()

![]()

Source: AnandTech – Kioxia Updates M.2 2230 SSD Lineup With BG5 Series: Adding PCIe 4.0 and BiCS5 NAND