December is here, and with it comes several technical summits ahead of the holiday break. The most notable of which this week is the annual RISC-V summit, which is being put on by the Linux Foundation and sees the numerous (and ever increasing) parties involved in the open source ISA gather to talk about the latest products and advancements in the RISC-V ecosystem. The summit always tends to feature some new product announcements, and this year is no different, as Imagination Technologies is at the show to provide details on their first RISC-V CPU cores, along with announcing their intentions to develop a full suite of CPU cores over the next few years.

The company, currently best known for their PowerVR GPU lineup, has been dipping their toes into the RISC-V ecosystem for the last couple of years with projects like RVfpga. More recently, this past summer the company revealed in an earnings call that they would be designing RISC-V CPU cores, with more details to come. Now at the RISC-V summit they’re providing those details and more, with the formal announcement of their Catapult family of RISC-V cores, as well as outlining a heterogeneous computing-centric roadmap for future development.

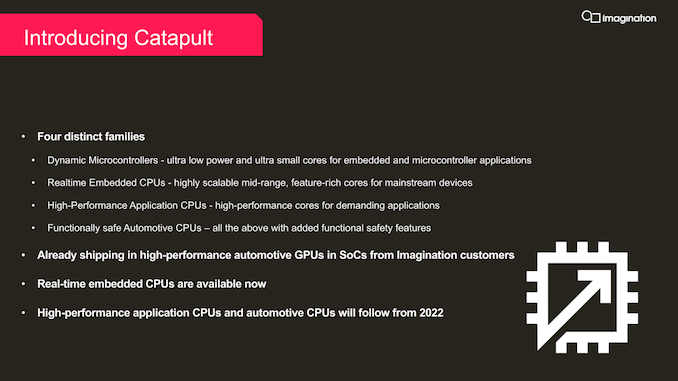

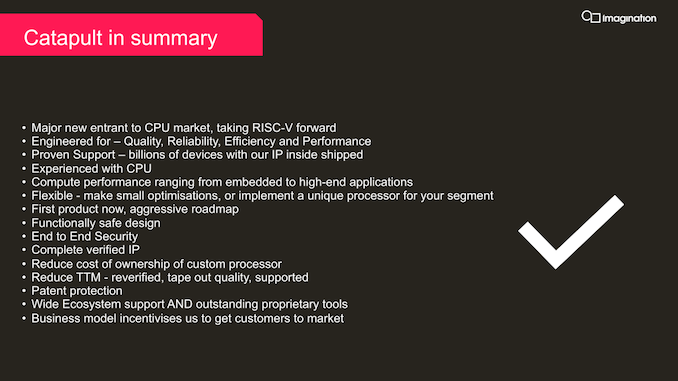

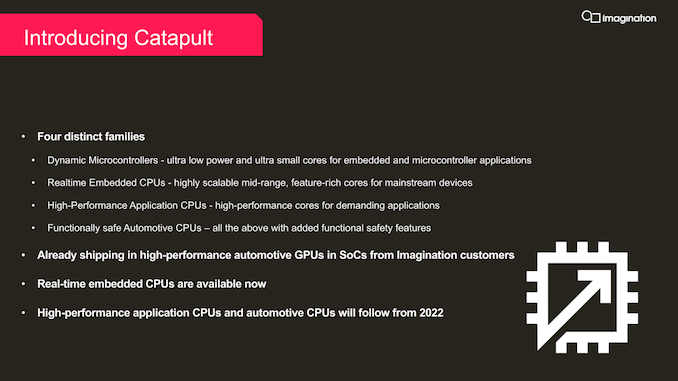

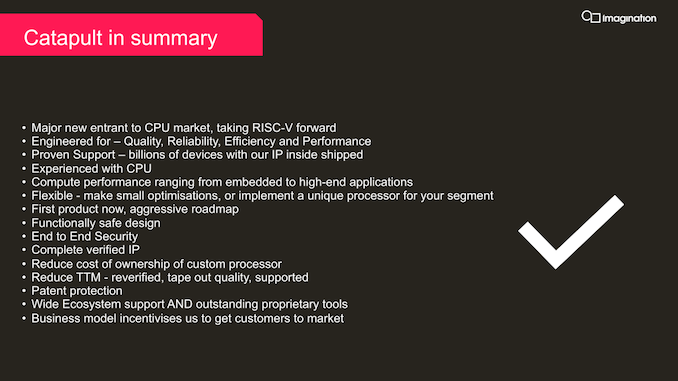

Starting from the top, the Catapult family is Imagination’s overarching name for a complete family of RISC-V CPU cores, the first of which are launching today. Imagination has (and is) designing multiple microarchitectures in order to cover a broad range of performance/power/area (PPA) needs, and the Catapult family is slated to encompass everything from microcontroller-grade processors to high-performance application processors. All told, Imagination’s plans for the fully fleshed out Catapult family look a lot like Arm’s Cortex family, with Imagination preparing CPU core designs for microcontrollers (Cortex-M), real-time CPUs (Cortex-R), high performance application processors (Cortex-A), and functionally safe CPUs (Cortex-AE). Arm remains the player to beat in this space, so having a similar product structure should help Imagination smooth the transition for any clients that opt to disembark for Catapult.

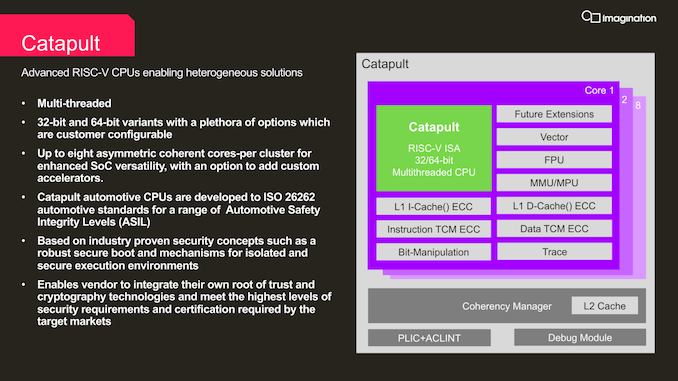

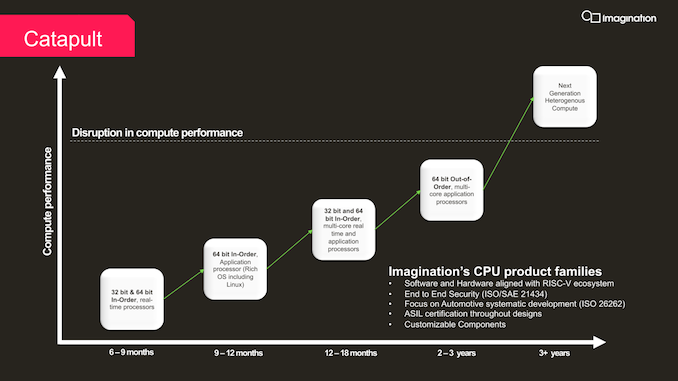

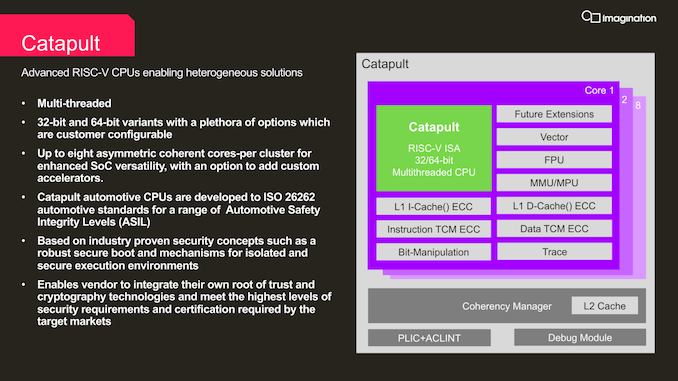

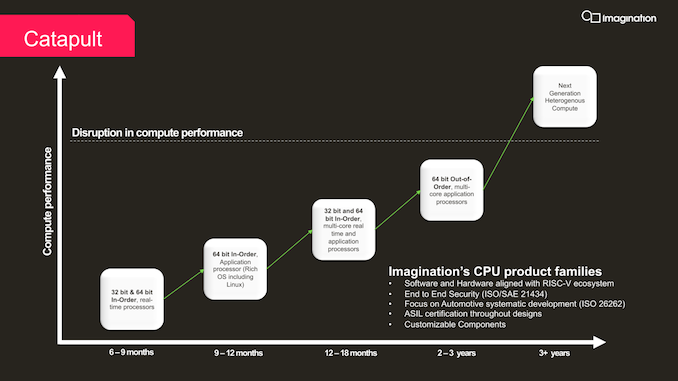

At present, Imagination has finished their first CPU core design, which is a simple, in-order core for 32-bit and 64-bit systems. The in-order Catapult core is being used for microcontrollers as well as real-time CPUs, and according to the company, Catapult microcontrollers are already shipping in silicon as part of automotive products. Meanwhile the real-time core is available to customers as well, though it’s not yet in any shipping silicon.

The current in-order core design supports up to 8 cores in a single cluster. The company didn’t quote any performance figures, though bear in mind this is a simple processor meant for microcontrollers and other very low power devices. Meanwhile, the core is available with ECC across both its L1 and TCM caches, as well as support for some of RISC-V’s brand-new extensions, such as the Vector computing extension, and potentially other extensions should customers ask for them.

Following the current in-order core, Imagination has essentially three more core designs on their immediate roadmap. For 2022 the company is planning to release an enhanced version of the in-order core as an application processor-grade design, complete with support for “rich” OSes like Linux. And in 2023 that will be followed by another, even higher performing in-order core for the real-time and application processor markets. Finally, the company is also developing a much more complex out-of-order RISC-V core design as well, which is expected in the 2023-2024 timeframe. The out-of-order Catapult would essentially be their first take on delivering a high-performance RISC-V application processor, and like we currently see with high-performance cores the Arm space, has the potential to become the most visible member of the Catapult family.

Farther out still are the company’s plans for “next generation heterogeneous compute” designs. These would be CPU designs that go beyond current heterogeneous offerings – namely, just placing CPU, GPU, and NPU blocks within a single SoC – by more deeply combining these technologies. At this point Imagination isn’t saying much more, but they are making it clear that they aren’t just going to stop with a fast CPU core.

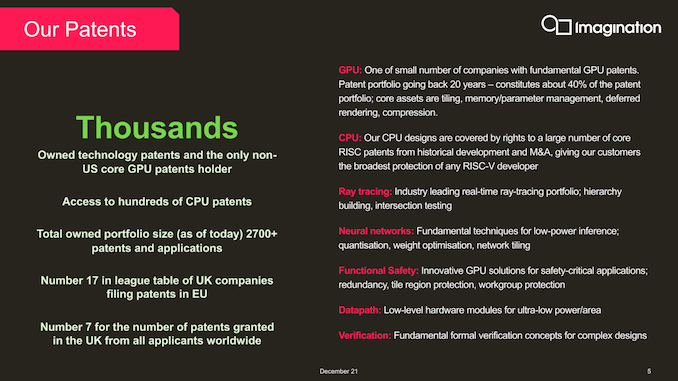

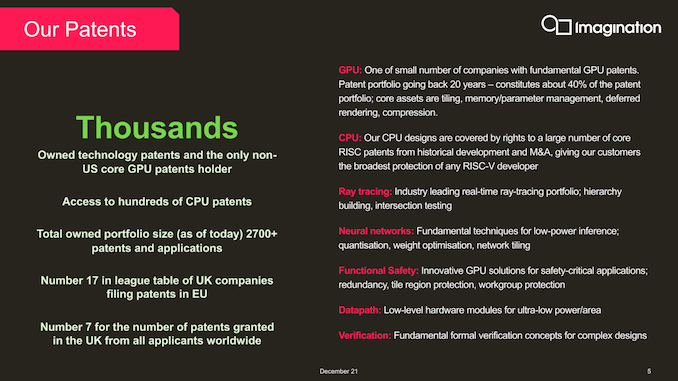

Overall, these are all clean room designs for Imagination. While the company has long since sold off its Meta and MIPS CPU divisions, it still retains a lot of the engineering talent from those efforts – along with ownership of or access to a large number of patents from the area. So although they aren’t reusing anything directly from earlier designs, they are hoping to leverage their previous experience to build better IP sooner.

Of course, CPU cores are just one part of what it will take to succeed in the IP space; besides incumbent Arm, there are also multiple other players in the RISC-V space, such as SiFive, who are all vying for much of the same market. So Imagination needs to both differentiate themselves from the competition, and offer some kind of market edge to customers.

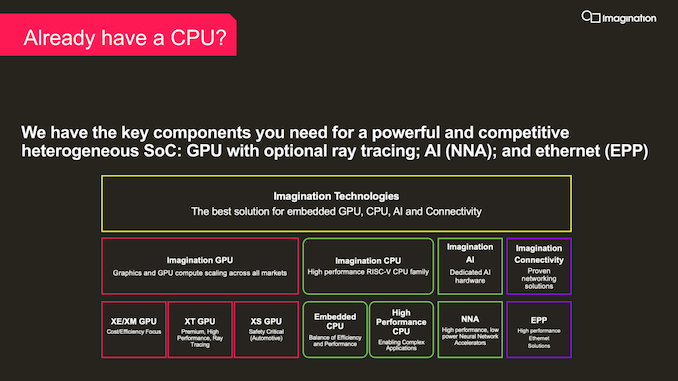

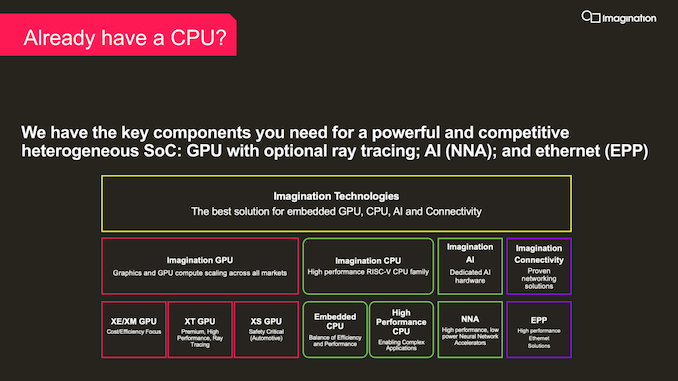

To that end, Imagination is going to be heavily promoting the possibilities for heterogenous computing designs with their IP. Compared to some of the other RISC-V CPU core vendors, Imagination already has well-established GPU and NPU IP, so customers looking to put together something more than just a straight CPU will be able to tap into Imagination’s larger library of IP. This does put the company more in direct competition with Arm (who already has all of these things as well), but then that very much seems to be Imagination’s goal here.

Otherwise, Imagination believes that their other big advantage in this space is the company’s history and location. As previously mentioned, Imagination holds access to a significant number of patents; so for clients who want to avoid extra patent licensing, they can take advantage of the fact that Imagination’s IP already comes indemnified against those patents. Meanwhile for chip designers who are based outside of the US and are weary of geopolitical issues affecting ongoing access to IP, Imagination is naturally positioned as an alternative there since they aren’t based in the US either – and thus access to their IP can’t be cut off by the US.

Wrapping things up, with the launch of their Catapult family of RISC-V CPU IP, imagination is laying out a fairly ambitious plan for the company for the next few years. By leveraging both their previous experience building CPUs as well as their current complementary IP like GPUs and NPUs, Imagination has their sights set on becoming a major player in the RISC-V IP space – and particularly when it comes to heterogeneous compute. Ultimately a lot will need to go right for the company before they can get there, but if they can succeed, then with their diverse collection of IP they would be in a rather unique position among RISC-V vendors.

![]()

![]()

Source: AnandTech – Imagination Launches Catapult Family of RISC-V CPU Cores: Breaking Into Heterogeneous SoCs