Khronos this morning is taking the wraps off of Vulkan 1.3, the newest iteration of the group’s open and cross-platform API for graphics programming.

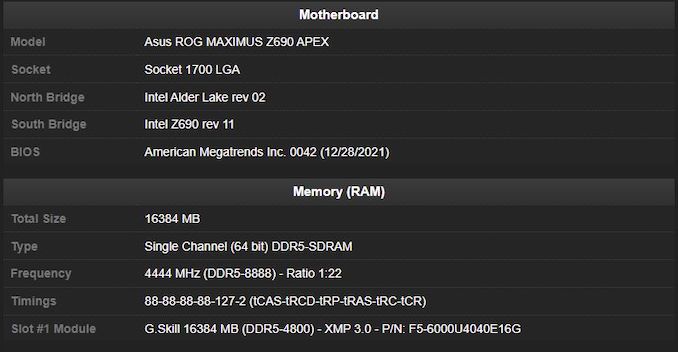

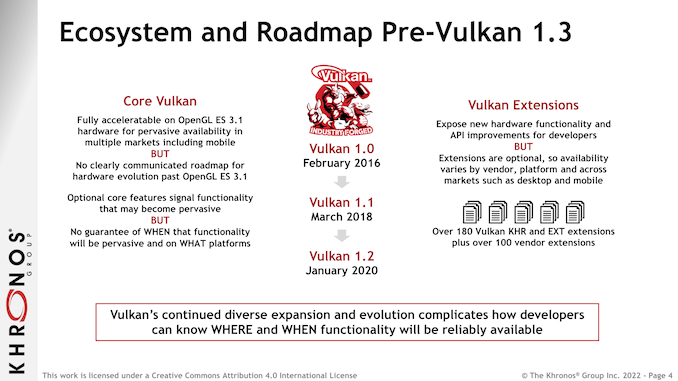

Vulkan 1.3 follows Khronos’s usual 2 year release cadence for the API, and it comes at a critical juncture for the API and its future development. Vulkan has been a full and official specification since 2016, turning 6 years old this year. This has given the API plenty of time to mature and have its kinks worked out, as well as to be adopted by software and hardware developers alike. But it also means that with the core aspects of the API having been hammered out, where to go next has become less obvious/harmonious. And with the API in use for everything from smartphones to high-end PCs, Vulkan is beginning to fragment at points thanks to the wide range of capabilities in devices.

As a result, for Vulkan 1.3, Khronos and its consortium members are taking aim at the future of the API, particularly from a development standpoint. Vulkan is still in a healthy place now, but in order to keep it that way, Khronos needs to ensure that Vulkan has room to grow with new features and functionality, but all without leaving behind a bunch of perfectly good hardware in the process. Thankfully, this isn’t a new problem for the consortium – it’s something virtually every standard faces if it lives long enough to become widely used – so Khronos is hitting the ground running with some further refinements to Vulkan.

Vulkan 1.3 Core

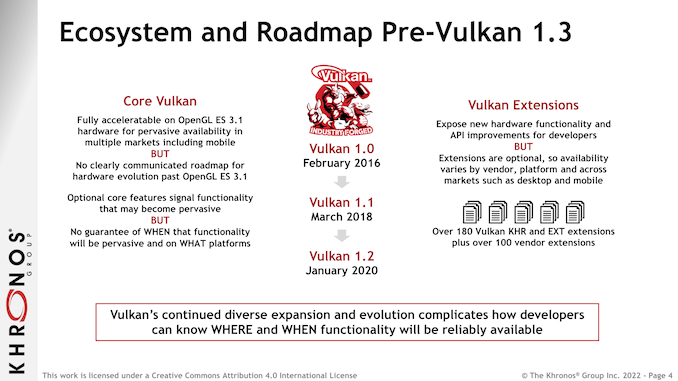

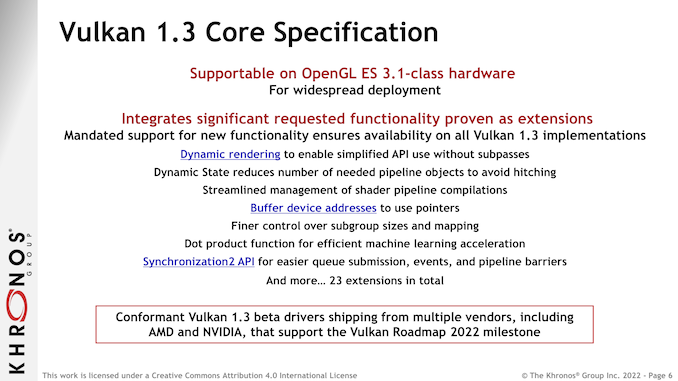

But before we get into Khronos’s fragmentation-fighting efforts, let’s first talk about what’s coming to the Vulkan 1.3 core specification. The core spec covers all of the features a Vulkan implementation is required to support, from the most basic smartphone to the most powerful workstation. As a result it has a somewhat narrow scope in terms of graphical features, but as the name says on the tin, it’s the common core of the API.

As with previous versions of the spec, Khronos is targeting this to work on existing Vulkan-compliant hardware. Specifically, Vulkan 1.3 is designed to work on OpenGL ES 3.1 hardware, meaning that of the new features being rolled into the core spec, none of them can be beyond what ES 3.1 hardware can do.

Consequently, Vulkan 1.3’s core spec isn’t focused on adding new graphical features or the like. By design, graphical feature additions are handled by extensions. Instead, the 1.3 core spec additions are largely a quality-of-life update for Vulkan developers, with a focus on adding features that simplify some aspect of the rendering process or add more control over it.

Altogether, Khronos is moving 23 existing extensions into the Vulkan 1.3 core spec. Most of these extensions are very much inside-baseball fodder for graphics programmers, but there are a couple of highlights. These include the integer dot product function, which is already widely used for machine learning inference on GPUs, as well as support for dynamic rendering. These functions already exist as extensions – so many developers can and are already using them – but by moving them into the core spec, they are now required for all Vulkan 1.3 implementations, opening them up to a wider array of developers.

But arguably the single most important addition coming to Vulkan isn’t an extension being promoted into the core specification. Rather, it’s entirely new functionality entirely, in the form of feature profiles.

Vulkan Profiles: Simplifying Feature Sets and Roadmaps

Up until now, Vulkan has not offered a concept of feature levels or other organizational grouping for additional feature sets. Beyond the core specification, everything in Vulkan is optional, all 280+ extensions. Meaning that for developers who are building applications that tap into features that go beyond the core spec – which has quickly become almost everything not written for a smartphone – there hasn’t been good guidance available on what extensions are supported on what platforms, or even what extensions are meant to go together.

The freedom to easily add extensions to Vulkan is one of the standard’s greatest strengths, but it’s also a liability if it’s not done in an organized fashion. And with the core spec essentially locked at the ES 3.1 level for the time being, this means that the number of possible and optional extensions has continued to bloom over the last 6 years.

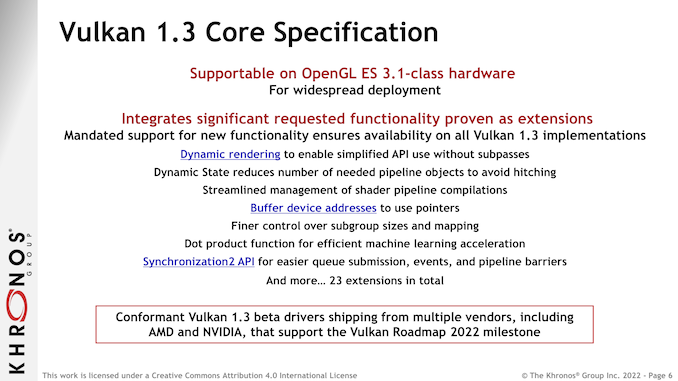

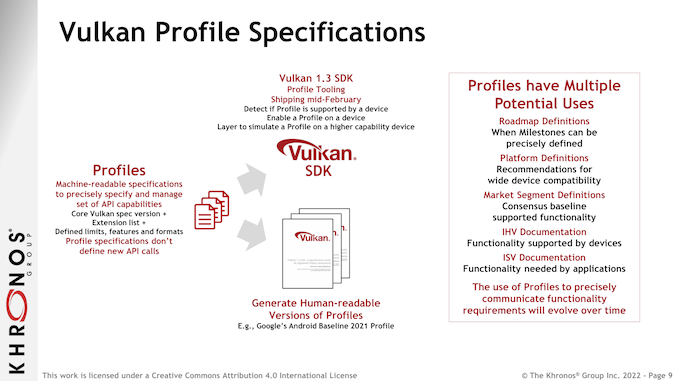

So in an effort to bring order to the potential chaos, as well as to create a framework for planning future updates, Khronos is adding profiles to the Vulkan standard.

Profiles, in a nutshell, are a precisely defined lists of supported features and formats. Profiles don’t define any new API calls (that’s done by creating new extensions outright), so they are very simple conceptually. But, absent any kind of way to define feature sets, they are very important going forward for Vulkan.

The power of profiles is that they allow for 280+ extensions to be organized into a much smaller number of overlapping profiles. Rather than needing to check to see if a specific PC video card supports a given extension, for example, a developer can just code against a (theoretical) “Modern Windows PC” profile, which in turn would contain all of the extensions commonly supported by current-generation PCs. Or alternatively, a mobile developer could stick to an Android-friendly profile, and quickly see what features they can use that will be supported by most devices.

At a high level, profiles are the solution to the widening gap between baseline ES 3.1 hardware, and what current and future hardware can do. Rather than risk fragmenting the Vulkan specification itself (and thus ending up with an OpenGL vs. OpenGL ES redux), profiles allow Vulkan to remain whole while giving various classes and generations of hardware their own common feature sets.

In line with the open and laissez faire nature of the Khronos consortium, profiles are not centrally controlled and can be defined by anyone, be it hardware devs, software devs, potato enthusiasts, or even Khronos itself. Similarly, whether a hardware/platform vendor wants to support a given profile is up to them; if they do, then they will need to make sure they expose the required extensions and formats. So this won’t be as neat and tidy as, say, Direct3D feature levels, but it will still be functional while offering the flexibility the sometimes loose consortium needs.

That said, Khronos’s expectation that we should only see a limited number of widely used profiles, many of which they’ll be involved with in some fashion. So 280 extensions should not become 280 profiles, at least as long as the hardware vendors can find some common ground across their respective platforms.

Finally, on a technical level, it’s worth noting that profiles aren’t just a loose list of features, but they do have technical requirements. Specifically, profiles are built as JSON lists, which along with providing a means to check profile compatibility, also open the door to things like generating human-readable versions of profiles. It’s a small distinction, but it will help developers quickly implement profile support in a generic fashion, relying on the specific JSON lists to guide their programs the rest of the way.

Profiles are also not limited to being built upon Vulkan 1.3. Despite being introduced at the same time as 1.3, they are actually a super-feature of sorts that can work with previous Vulkan versions, as all of the heavy lifting is being done at the application and SDK level. So it will be possible to have a profile that only calls for a Vulkan 1.0 implementation, for example.

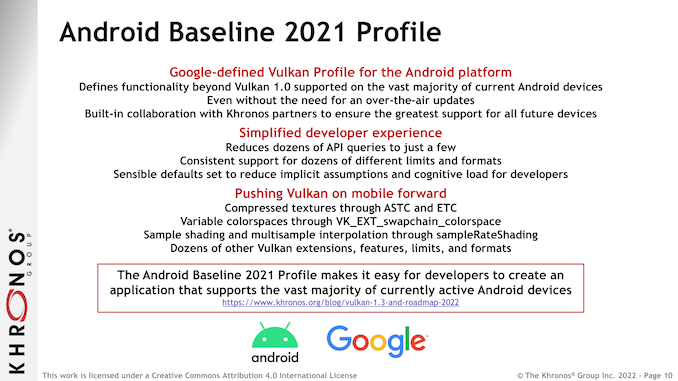

Google’s Android Baseline 2021 Profile

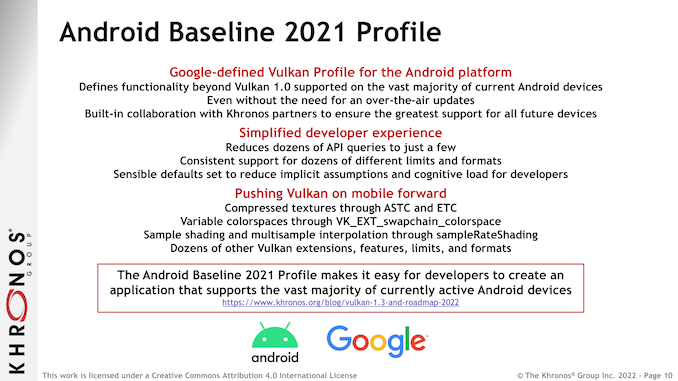

The first profile out the door, in turn, comes from Google. The Android author is defining a Vulkan profile for their market that, at a high level, will help to better define and standardize what feature are available on most Android devices.

Interestingly, Google’s profile is built upon Vulkan 1.0, and not a newer version of Vulkan. From what we’re told, there are features in the Vulkan 1.1 core specification that are still not widely supported by mobile devices (even with the ES 3.1 hardware compatibility goal), and as a result, any kind of common progression with Vulkan on Android has become stalled. So since Google can’t get Vulkan 1.1/1.2/1.3 more widely supported across Android devices, the company is doing the next best thing and using a profile to define a bunch of common post-1.0 extensions that are supported by the current crop of devices.

The net result of this is the Android Baseline 2021 Profile. By establishing a baseline profile for the ecosystem, Google is aiming to not only make newer functionality more accessible to developers, but to simplify graphics programming in the process. Essentially, the Baseline 2021 Profile is a fix for existing fragmentation within the Android ecosystem by establishing a reasonable set of commonly supported features and formats.

Of particular note, Google’s profile calls for support for both ETC and ASTC texture compression formats. As well, sample shading and multi-sample interpolation are on the list as well. Given that this is a baseline specification, there aren’t any high-concept next-generation features contained within the profile. But over time, that will change. Google has already indicated that they will be developing a 2022 profile for later this year, and will continue to keep adding further baseline profiles as the situation warrants.

Finally, Google’s use of profiles is also a solid example of taking advantage of the application-centric nature of profiles. According to Google, developers will be able to use profiles on the “vast majority” of Android devices without the need for over-the-air updates for those devices. Since profiles are handled at the application/SDK level, all the device itself needs to present are the necessary Vulkan extensions, which in accordance with a baseline specification are already present and supported in the bulk of Android devices.

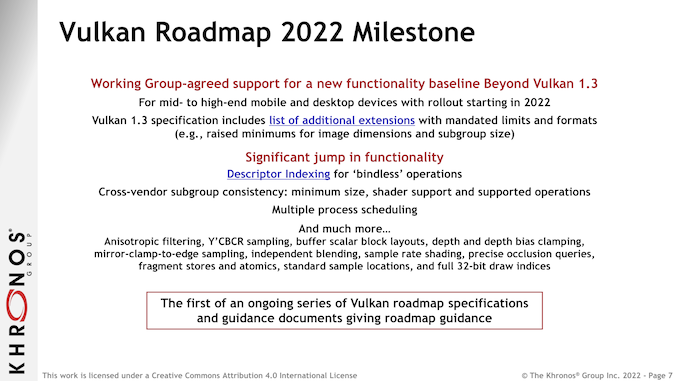

Vulkan Roadmap 2022: Making Next-Generation Features Common Features

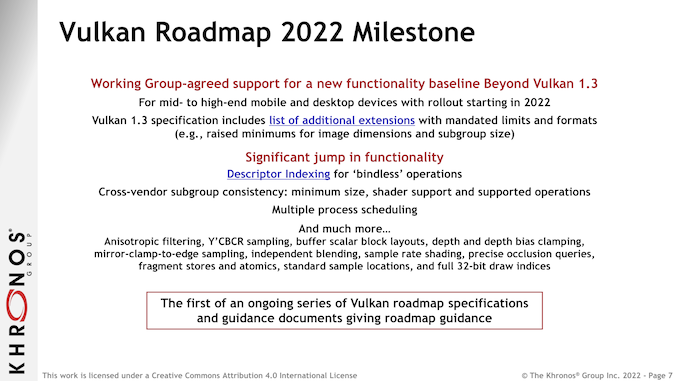

Last but certainly not least, the other big development to stem from the addition of profiles is a renewed path forward for developing and adopting new features for next-generation hardware. As mentioned previously, Vulkan has until now lacked a way to define feature sets for more advanced (non-core) features, which profiles are finally resolving. As a result, Khronos and the hardware vendors finally have the tools they need to establish baselines for not just low-end hardware, but high-end hardware as well.

In other words, profiles will provide the means to finally create some common standards that incorporate next-generation hardware and the latest programming features.

Because of Vulkan core’s ES 3.1 hardware requirements, there is a significant number of advanced features that have remained optional extensions. This includes everything from ray tracing and sample rate shading to more basic features like anisotropic filtering, multiple processor scheduling, and bindless resources (descriptor indexing). To be sure, these are all features that developers have had access to for years as extensions, but lacking profiles, there has been no assurance for developers that a given feature is going to be in all the platforms they want to target.

To that end, Khronos and its members have developed the Vulkan Roadmap 2022, which is both a roadmap of features they want to become common, as well as a matching profile to go with the roadmap. Conceptually, the Vulkan Roadmap 2022 feature set can be thought of as the inverse of Google’s baseline profile; instead of basing a profile around low-end devices, Roadmap 2022 excises low-end devices entirely in order to focus on common features found in newer hardware.

Roadmap 2022 is being based around features found in mid-end and high-end devices, mobile and PC alike. So while it significantly raises the bar in terms of features supported, it’s still not leaving mobile devices behind entirely – nor would it necessarily be ideal to do so. In practice, this means that Roadmap 2022 is slated to become the common Vulkan feature set for mid-end and better devices across the hardware spectrum.

Meanwhile, adoption of Roadmap 2022 should come very quickly since it’s based around features and formats already supported in existing hardware. AMD and NVIDIA have already committed to enabling support for the necessary features in their Vulkan 1.3 drivers, which are out today in beta and should reach maturity in a couple of months. In fact, the biggest hold-up to using profiles is Khronos itself – the Vulkan SDK won’t get profile support until next month.

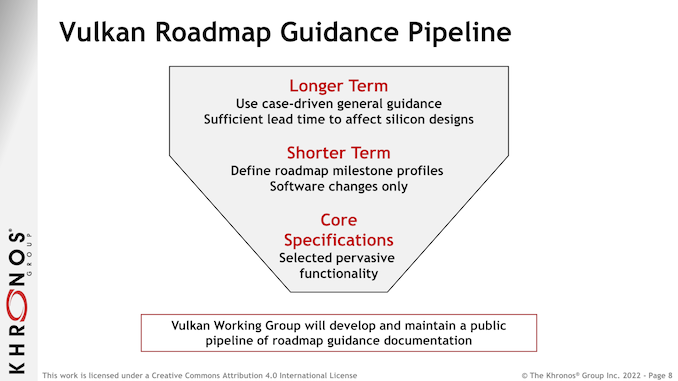

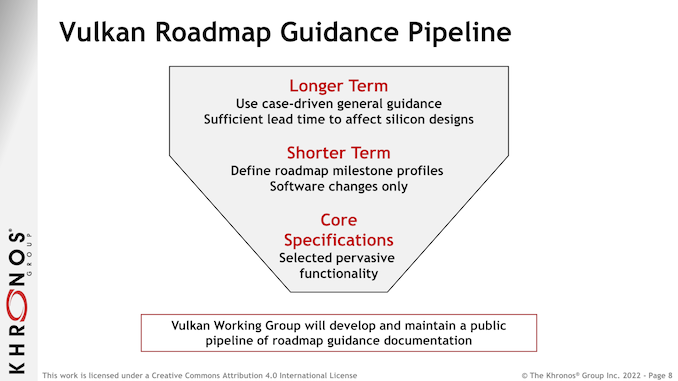

Finally, according to Khronos Roadmap 2022 is just the start of the roadmapping process for the group. After getting caught-up with current-generation hardware with this year’s profile, the group will be developing longer-term roadmaps for Vulkan profiles. Specifically, the group wants to get far enough ahead of the process that profiles are being planned out years in advance, when the next-generation of hardware is still under development. This would enable Khronos to have a compete pipeline of profiles in the works, giving hardware and software developers a roadmap for the next couple of years of Vulkan features.

Ultimately, having a roadmap will serve to help keep the development of advanced features for Vulkan on-track. Freed from having to support the oldest of hardware, the Vulkan group members will be able to focus on developing and implementing new features, knowing exactly when support is expected/planned/desired to arrive. Up until now the planning process has been weighed down by the lack of a timeline for making new features a requirement (de jure or otherwise), so having a formal process to standardize advanced features will go a long way towards speeding up and simplifying that process.

![]()

![]()

Source: AnandTech – Vulkan 1.3 Specification Released: Fighting Fragmentation with Profiles