Intel has announced two new x86-64 instruction sets designed to bolster and offer more performance in AVX-based workloads with their hybrid architecture of performance (P) and efficiency (E) cores. The first of Intel’s announcements is their latest Intel Advanced Performance Extensions, or Intel APX as it’s known. It is designed to bring generational, instruction set-driven improvements to load, store and compare instructions without impacting power consumption or the overall silicon die area of the CPU cores.

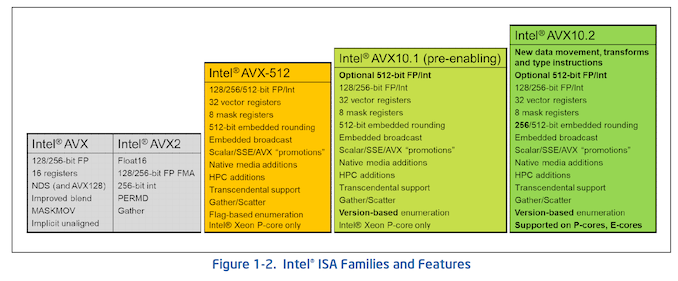

Intel has also published a technical paper detailing their new AVX10, enabling both Intel’s performance (P) and efficiency (E) cores to support the converged AVX10/256-bit instruction set going forward. This means that Intel’s future generation of hybrid desktop, server, and workstation chips will be able to support multiple AVX vectors, including 128, 256, and 512-bit vector sizes throughout the entirety of the cores holistically.

Intel Advanced Performance Extensions (APX): Going Beyond AVX and AMX

Intel has published details surrounding its new Advanced Performance Extensions, or APX for short. The idea behind APX is to allow access to more registers and improve overall general-purpose performance. They are designed to provide better efficiency when using x86-based instruction sets, allowing access to more registers. New features such as doubling the general-purpose registers from 16 to 32 enable compilers to keep more values within the registers, with Intel claiming 10% fewer loads and 20% fewer stores when the code is compiled for APX versus the same code for x86-64 using Intel 64; this is Intel’s 64-bit compatibility mode for x86 instruction sets.

The idea behind doubling the number of GPRs from 16 with x86-64 to the 32 GPRs available with the Intel APX is that more data can be held close by, avoiding the need to read and write further into the different levels of cache and memory. Having more GPR also means that it should theoretically require less access to slower areas, such as DRAM, which takes longer and uses more power.

Despite effectively abandoning its MPX (Memory Protection Extensions), the Intel APX can effectively use the existing area set aside for MPX for what it calls XSAVE. Touching more on XSAVE, Intel’s APX general purpose registers (GPRs) are XSAVE-enabled, which means they can automatically be saved and restored by XSAVE and XRSTOR sequences during context switches. Intel also states by default that these don’t change the size or layout, which means they can take up the same space left behind for the now-defunct Intel MPX registers.

Another essential feature of Intel’s APX is its support for three-operand instruction formats, a subset of the x86 instruction set specifying the data being operated on. APX introduces new instructions optimized for predicted loads, including a novel 64-bit absolute jump instruction. Compared to older examples that used EVEX, a 4-byte extension to VEX, APX transforms single register operands into three, effectively reducing the need for additional register move instructions. As a result, APX compiled code achieves a claimed 10% increase in efficiency, requiring 10% fewer instructions than previous ISAs.

Intel AVX10: Pushing AVX-512 through 256-bit and 512-bit Vectors

One of the most significant updates to Intel’s consumer-focused instruction sets since the introduction of AVX-512 is Intel’s Advanced Vector Extension 10 (AVX10). On the surface, it looks to bring forward AVX-512 support across all cores featured in their heterogeneous processor designs.

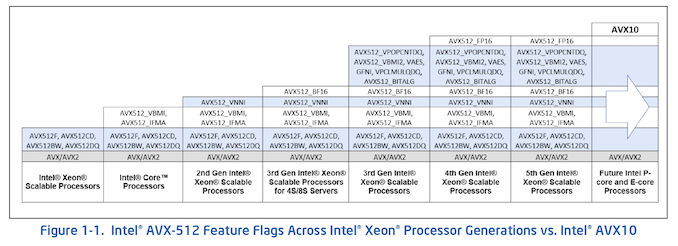

The most significant and fundamental change introduced by AVX10 compared to the previous AVX-512 instruction set is the incorporation of previously disabled AVX-512 instruction sets in future examples of heterogeneous core designs, exemplified by processors like the Core i9-12900K and the current Core i9-13900K. This enables support for AVX-512 in these processors. Currently, AVX-512 is exclusively supported on Intel Xeon performance (P) cores.

Examining the core concept of AVX10 it signifies that consumer-based desktop chips will now have full AVX-512 support. Although performance (P) cores have the theoretical capability to support 512-bit wide vectors if Intel desires (Intel has currently confirmed support is up to 256-bit vectors), efficiency (E) cores are restricted to 256-bit vectors. Nevertheless, as a whole, the entire chip will be capable of supporting complete AVX-512 instruction sets across all of the cores, whether they are fully-fledged performance or lower-powered efficiency cores.

Touching on performance, within the AVX10 technical paper, Intel states the following:

- Intel AVX2-compiled applications, re-compiled to Intel AVX10, should realize performance gains without the need for additional software tuning.

- Intel AVX2 applications sensitive to vector register pressure will gain the most performance due to the 16 additional vector registers and new instructions.

- Highly-threaded vectorizable applications are likely to achieve higher aggregate throughput when running on E-core-based Intel Xeon processors or on Intel® products with performance hybrid architecture.

Intel further claims that their chips, already utilizing 256-bit vectors as an example, will maintain similar performance levels when compiled onto AVX10 at the 256-bit ISO vector length. However, the true potential of AVX10 comes to light when leveraging the more substantial 512-bit vector length, promising the best AVX10 instruction set performance attainable. This aligns with introducing new AVX10 libraries and enhanced tool support, enabling application developers to compile newer AI and scientific-focused codes for optimal benefits. Additionally, this means preexisting libraries can be recompiled with AVX10/256 compatibility and, when possible, further optimized to exploit the larger vector units for better performance throughput.

In Intel’s first phase of AVX10 (AVX10.1), this will be introduced for early software enablement and will support the subset of Intel’s AVX-512 instruction sets, with Granite Rapids (6th Gen Xeon) performance (P) cores being the first cores to be forward compatible with AVX10. It is worth noting that AVX10.1 will not enable 256-bit embedded routing. As such, AVX10.1 will serve as an introduction to AVX10 to enable forward compatibility and implementation of the new versioning enumeration scheme.

Intel’s 6th Gen Xeons, codenamed Granite Rapids, will enable AVX10.1, and future chips after this will bring fully-fledged AVX10.2 support, with AVX-512 also being supported to allow for compatibility for legacy instruction sets and applications compiled with them. It is worth noting that despite Intel AVX10/512 including all of Intel’s AVX-512 instructions, applications compiled to Intel AVX-512 with vector lengths limited to 256-bit are not guaranteed to work with an AVX10/256 processor due to differences in the supported mask register width.

While initial support of the AVX10 instruction set is more of a transitional phase in AVX10.1, it’s when AVX10.2 finally rolls out will be where AVX10 will start to show cause and effect in performance and efficiency, at least with compatible instruction sets associated with AVX10. AVX10, by default, will allow developers that recompile their preexisting code to work with AVX10, as new processors with AVX10 won’t be able to run AVX-512 binaries as they previously would have. Intel is finally looking toward the future.

The introduction of AVX10 completely replaces the AVX-512 superset. Once AVX10 is widely available through Intel’s future product releases, there’s technically no need to use AVX-512 going forward. One challenge this presents is that software developers who have specifically compiled libraries specifically for 512-bit wide vectors will need to recompile the code as previously mentioned to properly work with the 256-bit wide vectors that AVX10 holistically supports across the cores.

While AVX-512 isn’t going anywhere as an instruction set, it’s worth highlighting that AVX10 is backward compatible, which is an essential aspect of supporting instruction sets with various vector widths such as 128, 256, and 512-bit where applicable. Developers can recompile code and libraries for the broader transition and convergence to the AVX10 unified instruction set going forward.

Intel is committing to supporting a maximum vector size of at least 256-bit on all Intel processors in the future. Still, it remains to be seen which SKUs (if any) and the underlying architecture will support full 512-bit vector sizes in the future, as this is something Intel hasn’t officially confirmed at any point.

The meat and veg of Intel’s new AVX10 instruction set will come into play when AVX10.2 is phased in, officially bringing 256-bit instruction vector support across all cores, whether performance and/or efficiency cores. This also marks the inclusion of 128-bit, 256-bit, and 512-bit integer divisions across both the performance and efficiency cores, and as such, will support full vector extensions based on the specification of each core.

Source: AnandTech – Intel Unveils AVX10 and APX Instruction Sets: Unifying AVX-512 For Hybrid Architectures