Researchers at OpenAI have created a neural network called DALL-E (named after Pixar’s WALL-E and artist Salvadore Dali) that creates images from text captions. As long as the concept is expressible in natural language, the AI can spit something out.

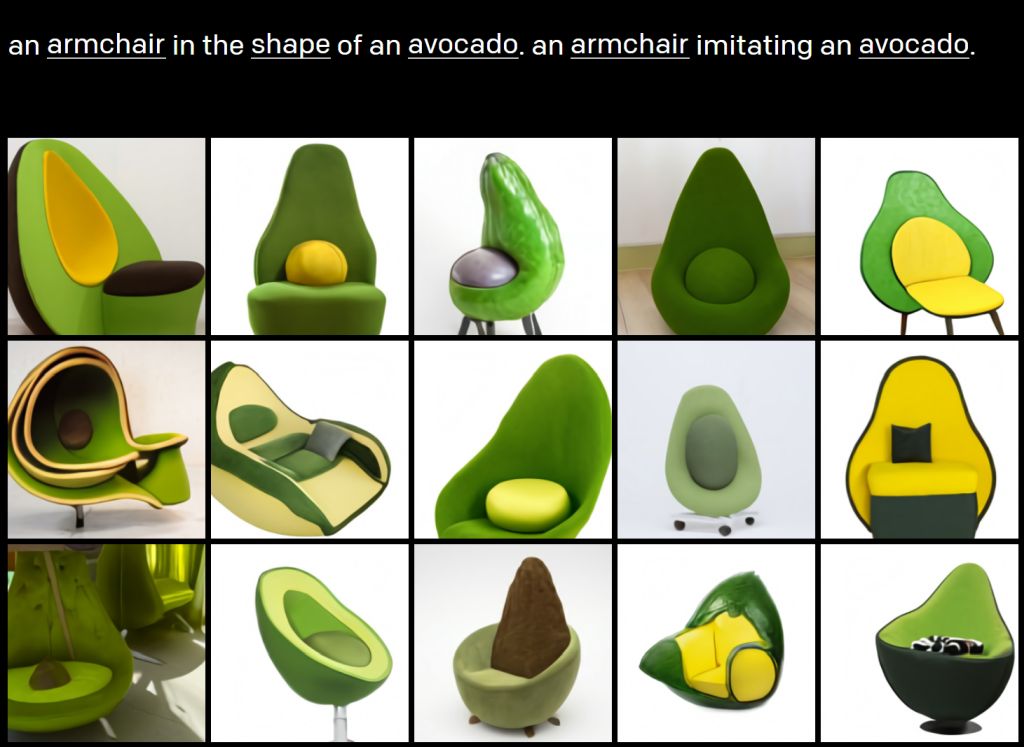

DALL-E is a 12-billion parameter version of GPT-3 trained to generate images from text descriptions, using a dataset of text-image pairs. We’ve found that it has a diverse set of capabilities, including creating anthropomorphized versions of animals and objects, combining unrelated concepts in plausible ways, rendering text, and applying transformations to existing images.

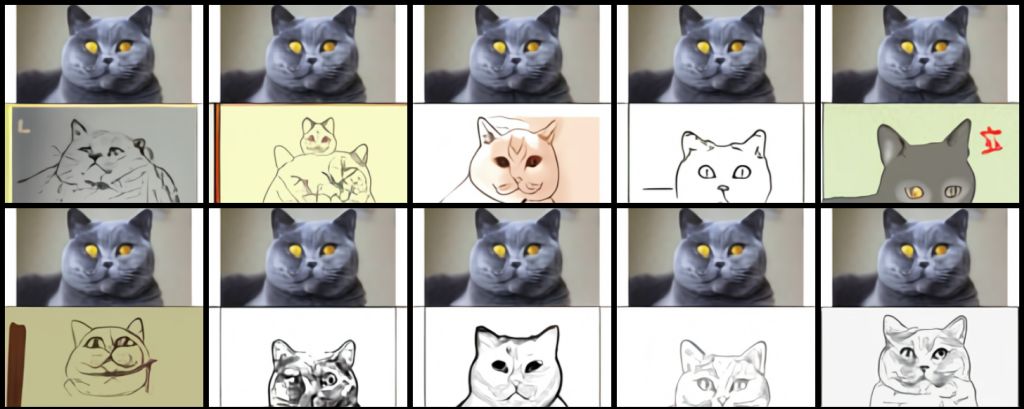

It’s worth taking a look at the site and checking out their examples because this is impressive and insane. You basically throw in a prompt like “A crocodile sitting in a car drinking boba” and the neural network will generate exactly that. Or at least an approximation of that. It’s obviously not perfect, but the generated images are amazingly accurate. If I told you to picture “the exact same cat on the top as a sketch on the bottom” you’d be a little confused, but then you see what DALL-E spits out and it seems obvious:

This damn thing is better at creating images from the prompts than my own imagination. I don’t even know what the intended purpose of this technology is, but I guarantee within 24 hours of its release it’ll be used for porn.

Source: Geekologie – DALL-E is a neural network that generates images from text captions