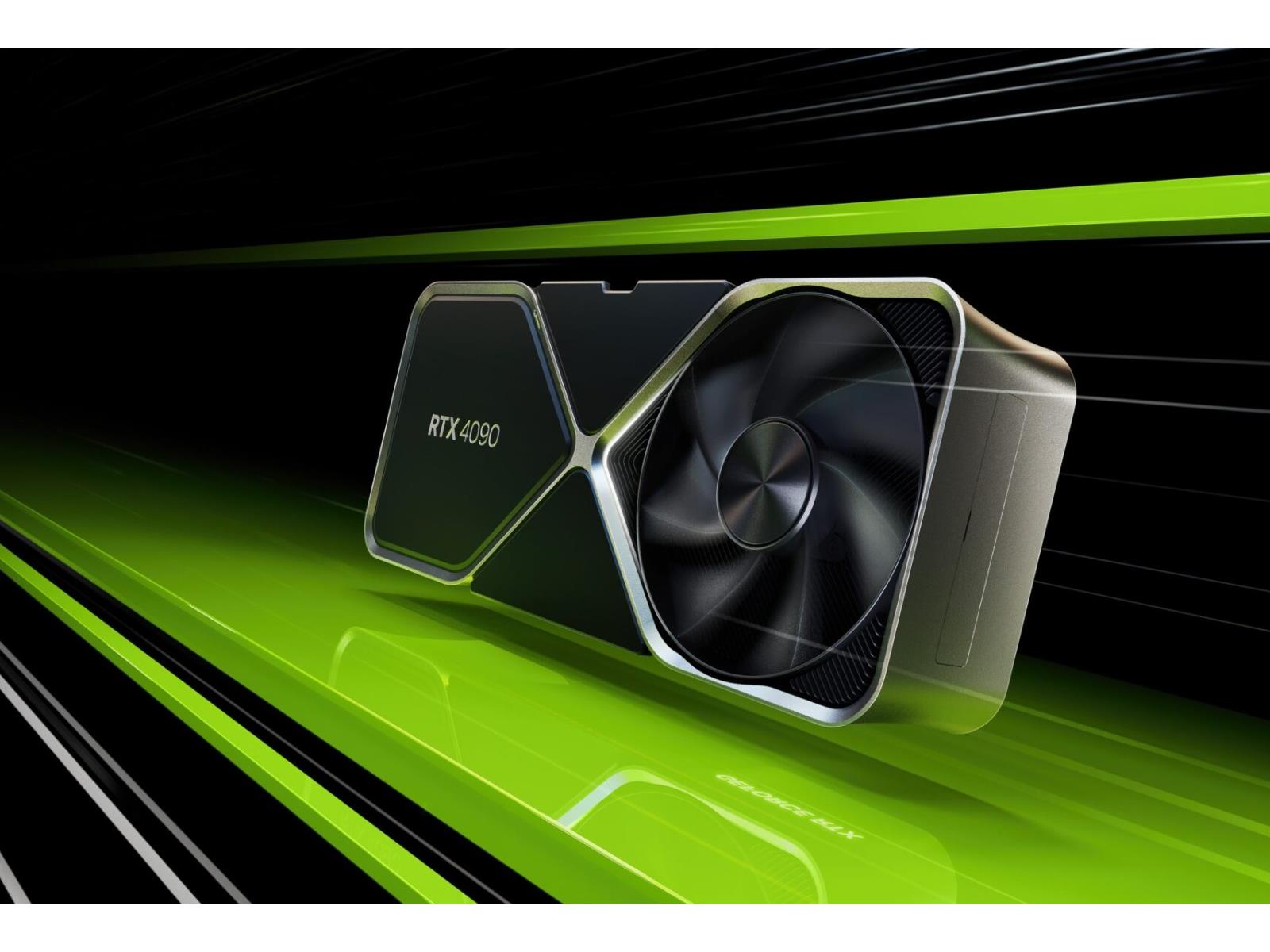

NVIDIA will be releasing an update to TensorRT-LLM for AI inferencing, which will allow desktops and laptops running RTX GPUs with at least 8GB of VRAM to run the open-source software. This update will introduce support to more large language models. However, only users who are currently using Windows 11 will be able to take advantage of this

Source: Hot Hardware – NVIDIA’s TensorRT AI Model Now Runs On All GeForce RTX 30 And 40 GPUs With 8GB+ Of RAM

Prime-WoW

My site, my way, no big company can change this